I am proud to present you a new long-awaited Dataedo 23.2! This probably our biggest release so far. We have a lot of new exciting features and updates for you.

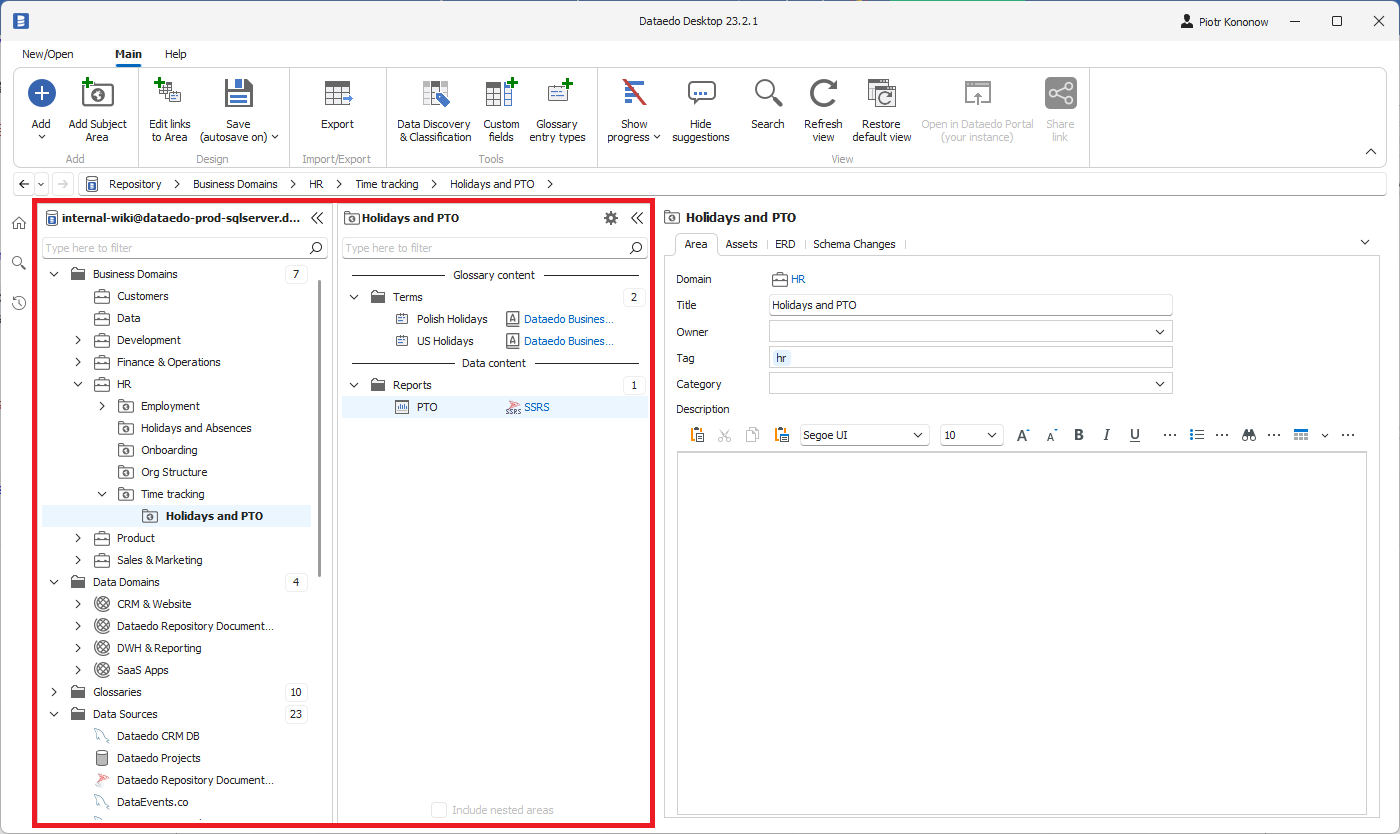

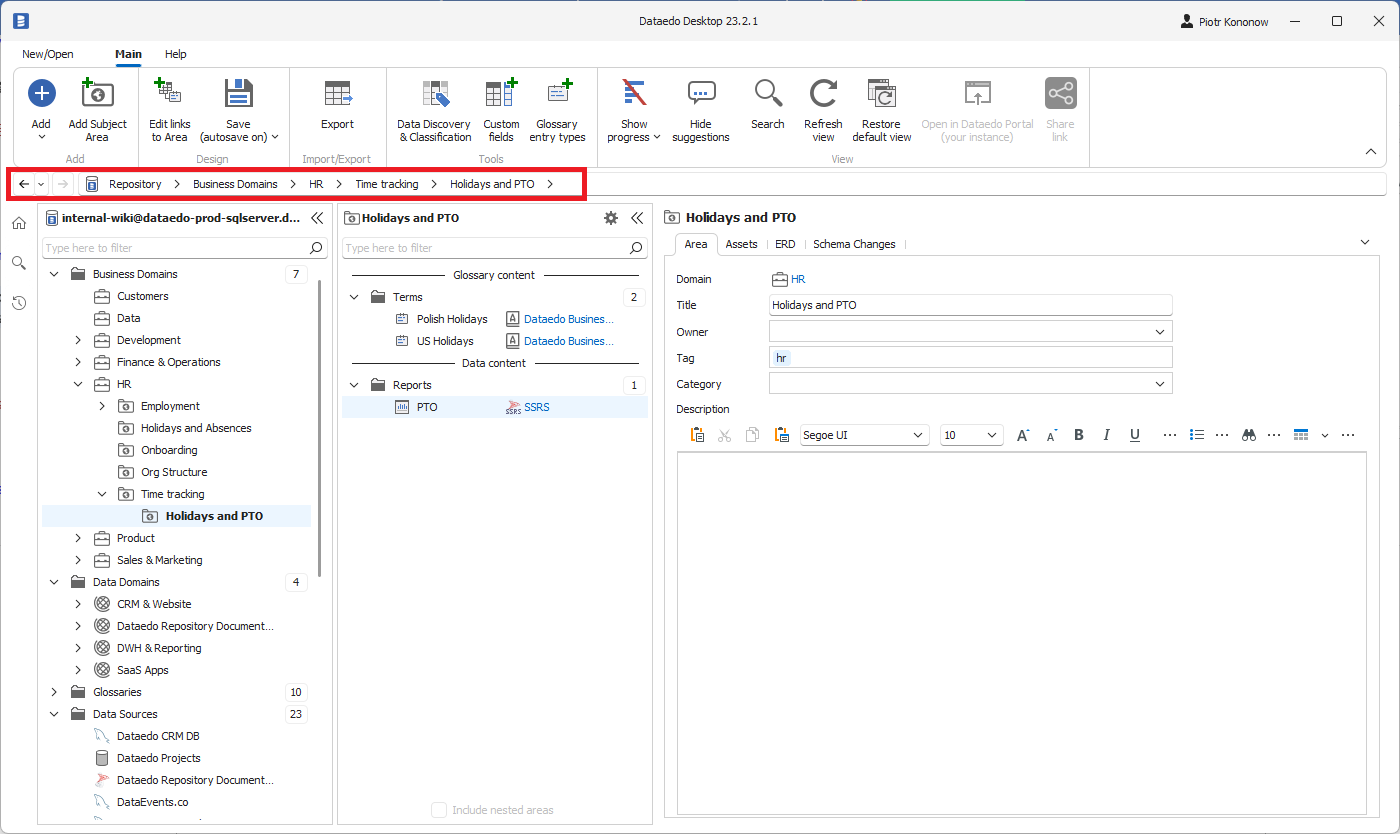

Domains and hierarchy of Subject Areas

The most ground breaking new feature of Dataedo, something many of our users were waiting for a while, are the new domains. With new domains we replaced the old subject areas and we met the following requirements:

- Added ability to group assets into domains (now, domains are separate from the sources, as subject areas were),

- Added the hierarchy of Subject Areas (domains can have a hierarchy of subject areas up to 3 levels),

- Now it is possible to define access to groups of data assets (each domain/subject area has its own permissions and objects linked inherit those permissions),

- Data teams can build a wiki like portal for business users with the hierarchy of business domains.

We built not one, but two types (hierarchies) of domains: Business and Data Domains.

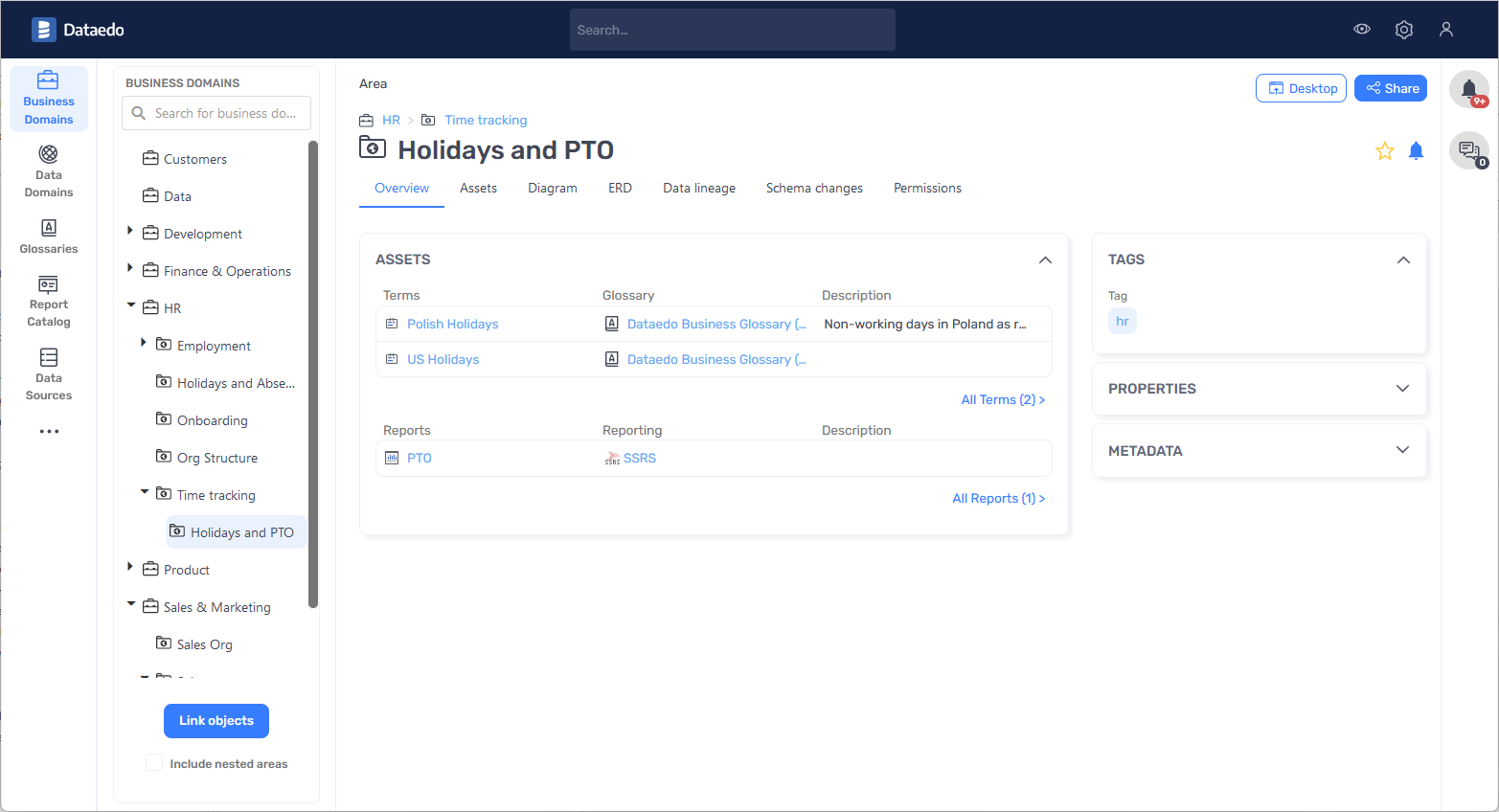

Business Domains

Business Domains, and the hierarchy of Subject Areas, allow you to break down your organization business processes and act like a wiki-like portal to the data and concepts in your organization. This can be an easy start with data for business users, or even be the place where they learn about various areas and processes of your business.

Data Domains

Data Domains, with the hierarchy of data areas, are meant to represent the Data Team's/IT view and organization of the data and applications. You can use it to represent Data Mesh domains or different departments, teams, applications, modules or even data products.

Permissions

From 23.2 objects inherit permissions from domains and areas (business and data). Users that have access to specific domain/area

When you assign an object to one of the that user has access to he will gain access to all the linked objects. Therefore, domains can be a way of controlling access on the object level.

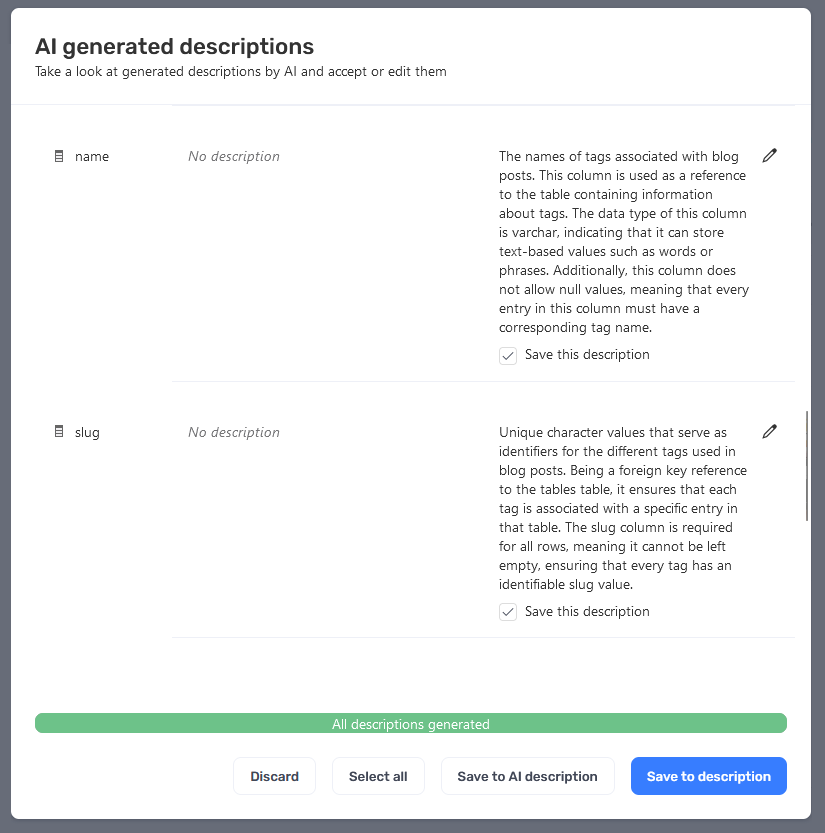

Auto documentation with AI

One of the most exciting features of 23.2 is AI Autodocumentation. Now, you can plug in Open AI's GPT subscription into Dataedo and ask it to generate descriptions for you.

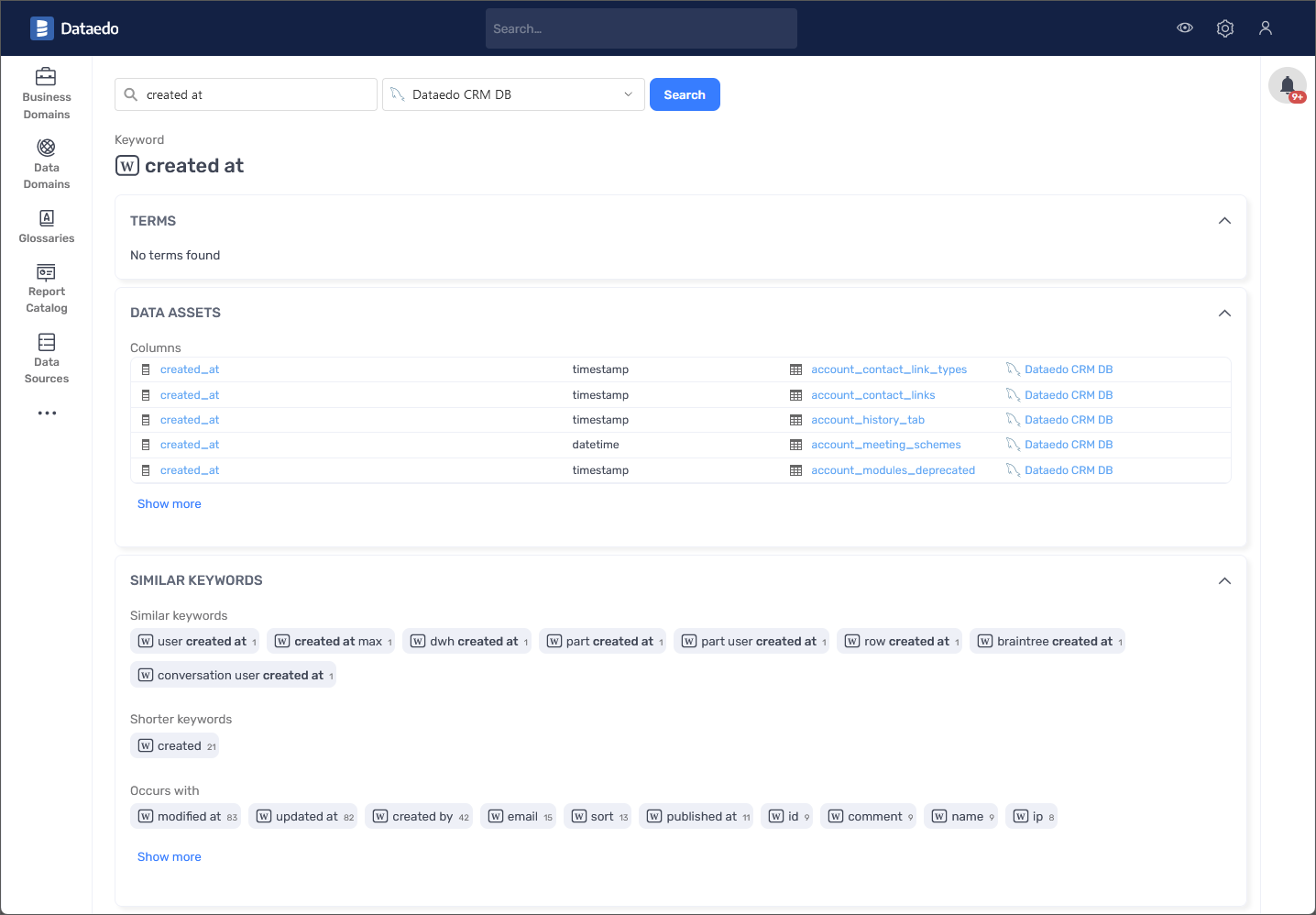

Keyword Explorer

Keyword Explorer is our own unique approach to browsing, searching and discovering metadata found in your databases and other sources. Dataedo analyzes different keywords that occur in table and column names. You can then explore keywords, finding their occurrences in data set names, finding glossary terms that exist with that name, and finding similar keywords.

Import improvements

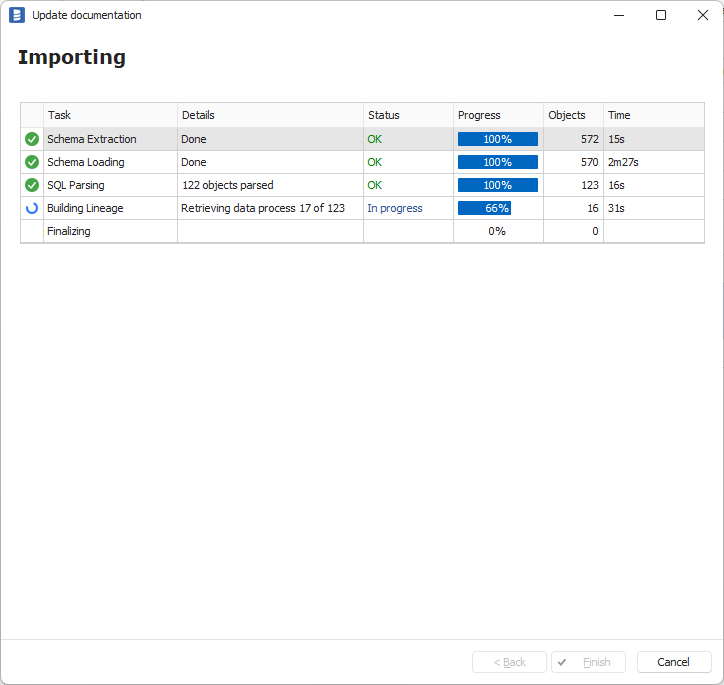

Performance

In 23.2 we redesigned our import engine and now imports should be much faster.

There are still some suboptimal elements in current import mechanism that we will be correcting in subsequent versions. We are planning to remove first step (counting objects) and continue to improve loading time.

User Experience (UX)

We built better import status tracking and logging.

Interface tables import improvements

Interface tables are set of tables in Dataedo repository database that you can write to raw metadata that you would like to import to repository safely. To load data from interface tables you need to run Interface tables connector.

Changes:

- Import of reports

- Import of linked sources

- Additional fields for importing data lineage (including custom fields)

- Ability to build a lineage between different databases

New connectors

In 23.2 we added the following connectors:

- Custom (SQL) connectors

- MS Fabric

- Azure Synapse Pipelines

- Power BI Report Server

- SSAS Multidimensional (Cubes)

- Local disk, FTP/SFTP connector

- MySQL Core connector

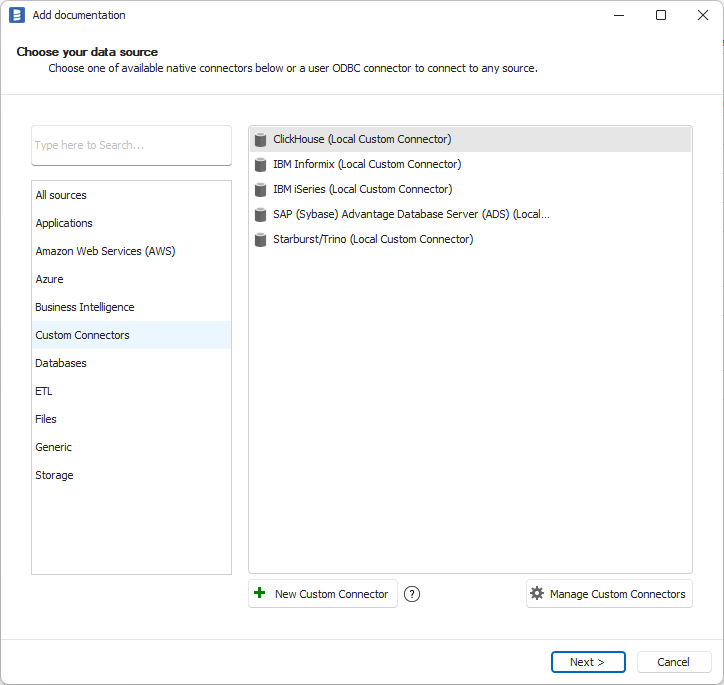

Custom (SQL) connectors

To ship connectors faster and without the need to release new version Desktop, we build a "custom (SQL) connector" functionality. Custom connectors are saved and distributed in proprietary .dataedocon files (XML format). Connectors are built and delivered by Dataedo team or can be build by users (they currently require signing by Dataedo team).

We built and shipped with 23.2 custom connectors to:

- ClickHouse

- IBM Informix

- IBM iSeries

- SAP Advantage Server (Sybase ADS)

- Starburst/Trino

Learn more about custom SQL connectors.

MS Fabric

We created connectors to MS Fabric - unified analytics platform. It allows to connect to Power BI and Data Warehouse inside the Fabric Workspace.

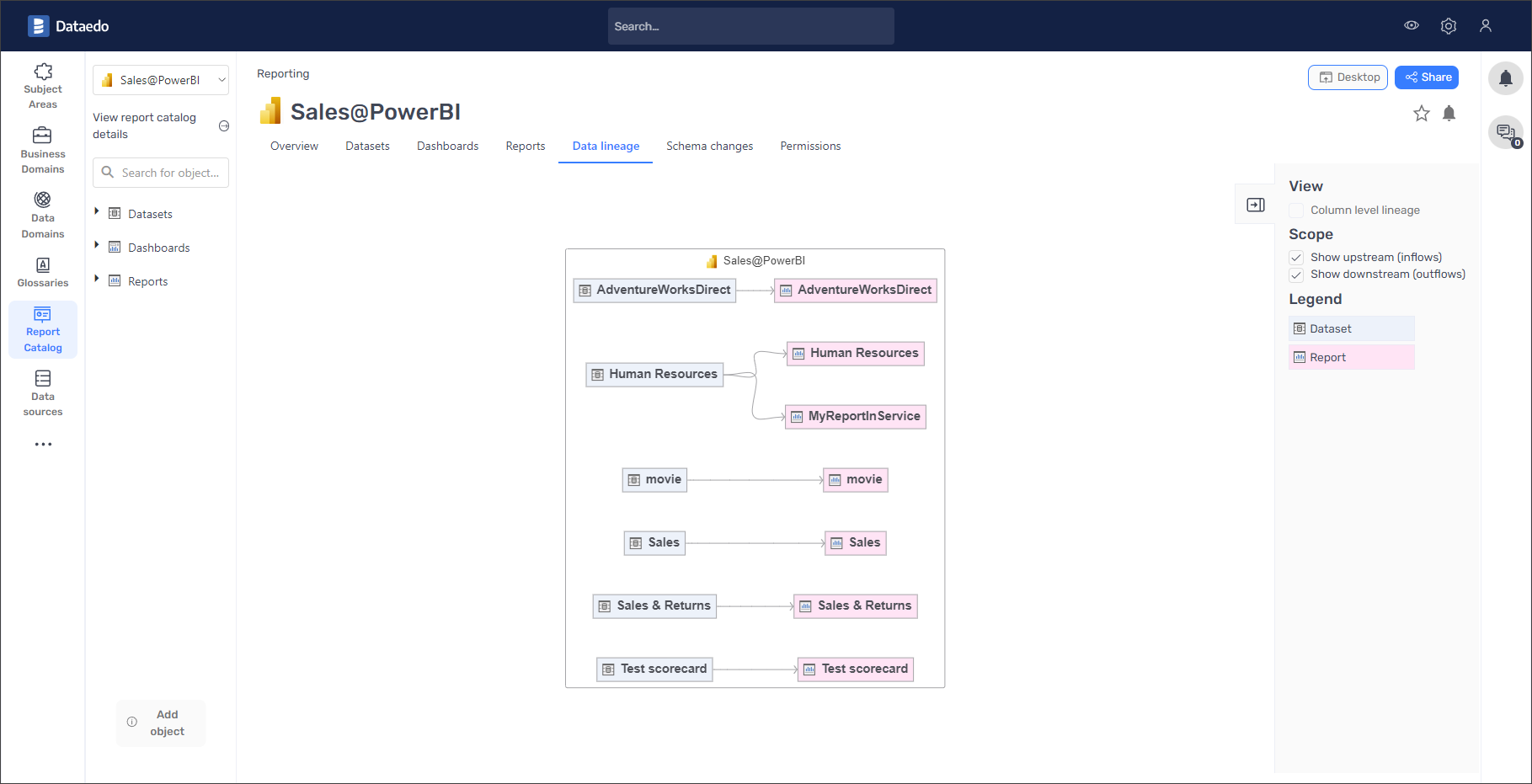

Power BI

Metadata imported with this connector is same as for the conventional Power BI connector.

Learn more about Microsoft Fabric - Power BI.

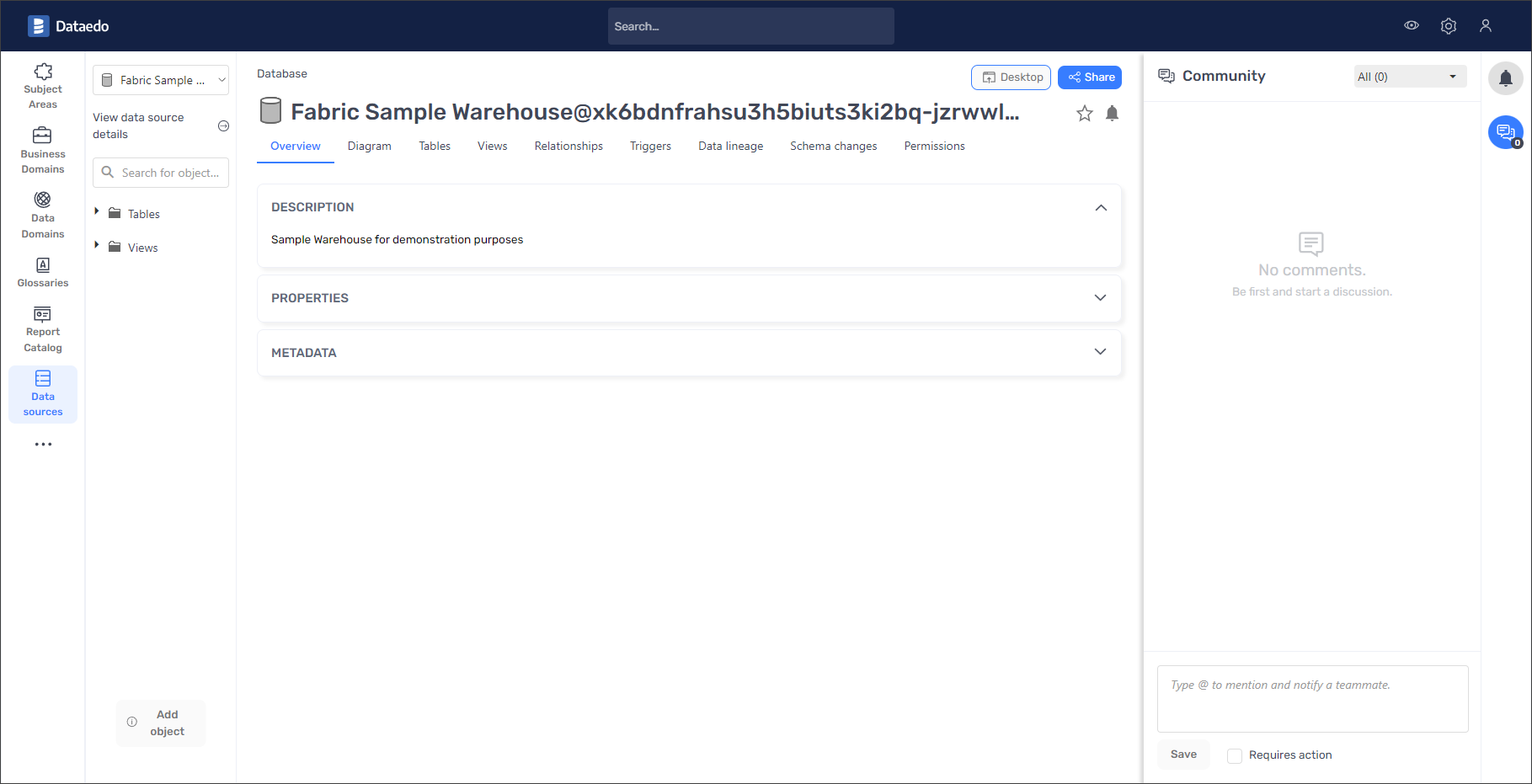

Data Warehouse

MS Fabrics Data Warehouse will import same metadata as the Azure Synapse connector

Learn more about Microsoft Fabric - Data Warehouse.

Learn more about Microsoft Fabric connector.

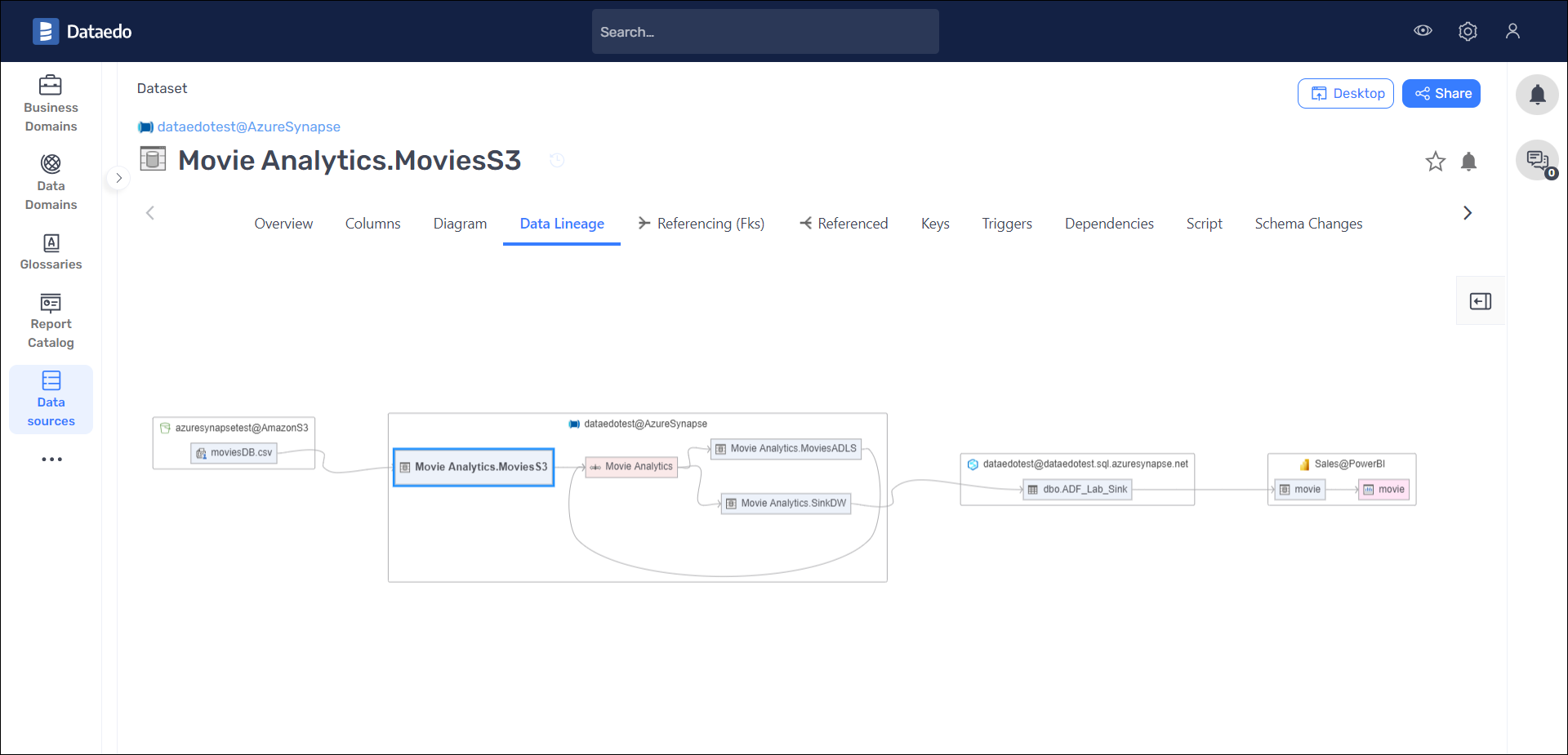

Azure Synapse Pipelines

We have created a separate connector for Pipelines in Azure Synapse Analytics (Azure Synapse Pipelines) that imports pipelines and builds lineage automatically.

What is imported:

- Pipelines

- Activities

- Source and sink datasets

- Linked services

- Lineage between pipelines, activities, datasets, and external data sources

Learn more about Azure Synapse Pipelines connector.

Power BI Report Server

We built a new connector to Power BI - Power BI Report Server. It is a on-prem version of Power BI service.

We import the following objects:

- Power BI Reports

- Paginated Reports

- Paginated Report Datasets

We haven't figure out how to import Power BI Datasets and build lineage for Power BI reports due to limitations of what's provided by the platform.

Learn more about Power BI Report Server connector.

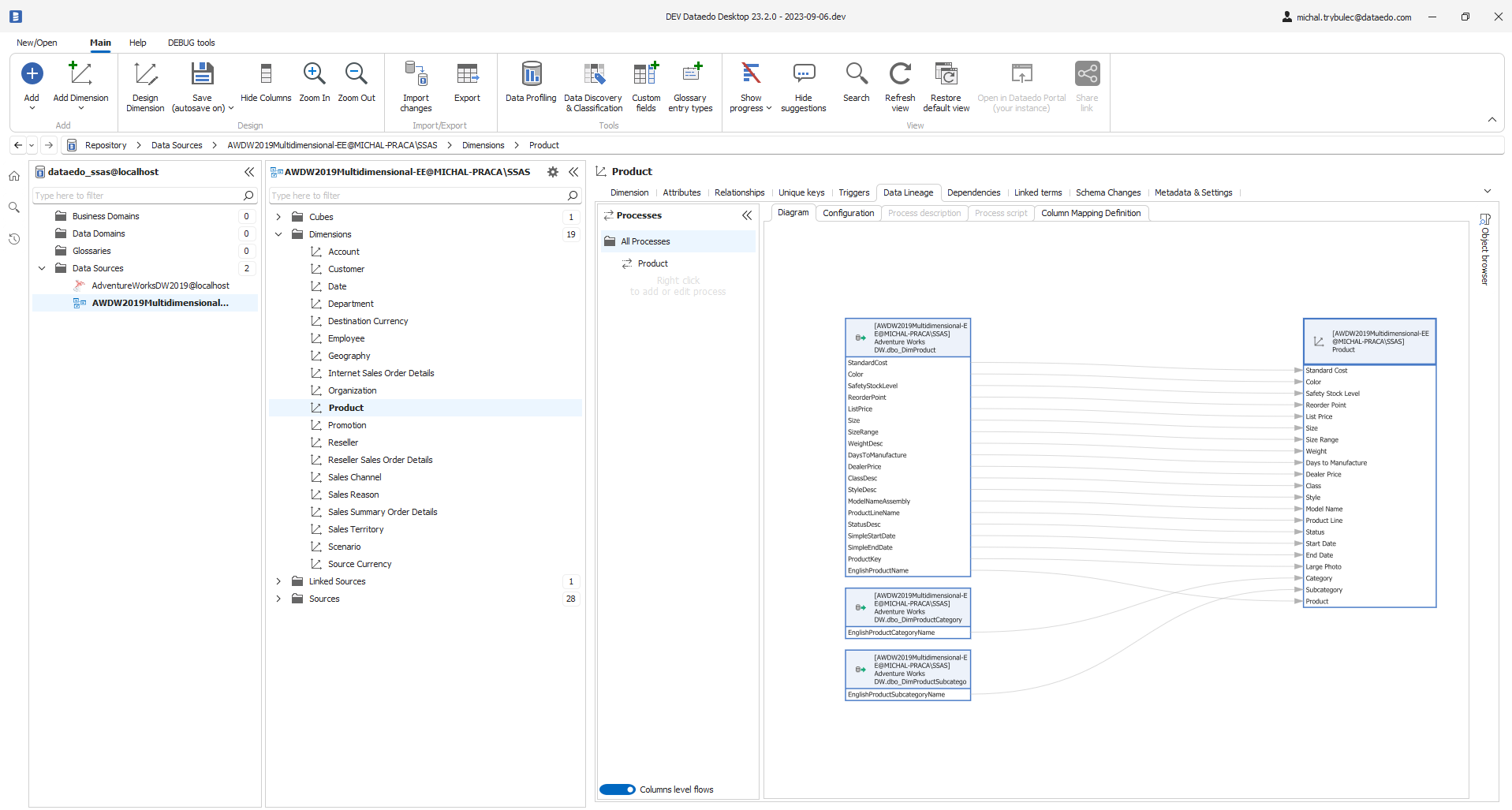

SSAS Multidimensional (Cubes)

Dataedo 23.2 comes with brand new connector to SQL Server Analysis Services (SSAS) Multidimensional model (cubes, measures and dimensions). Previously you could connect to SSAS Tabular, that is completely different technology.

We import the following objects:

- Cubes

- Measures

- Dimensions

- Data Source View

- Data Sources (as Linked Sources)

- Column level lineage!

Learn more about SSAS Multidimensional connector.

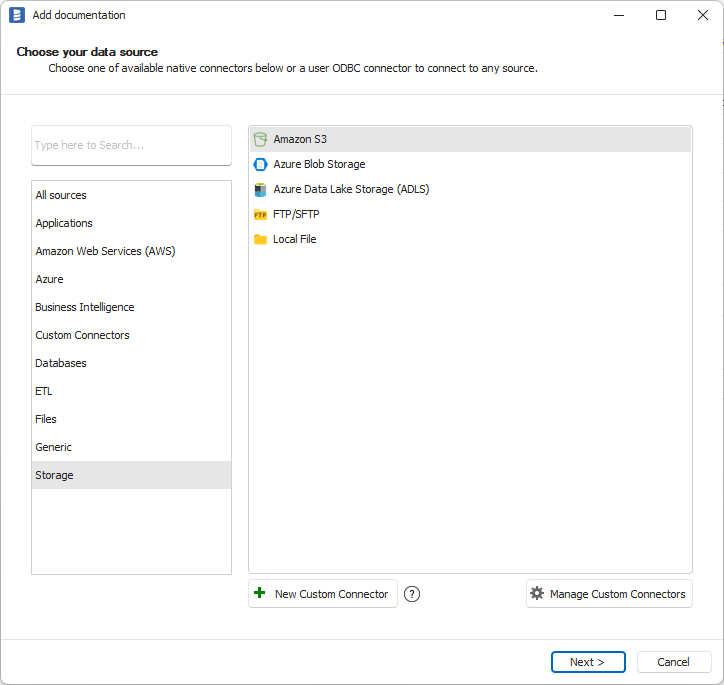

Local disk, FTP/SFTP connector

In previous versions you could import big data files from Amazon S3, Azure Blob Storage and Azure Data Lake Storage cloud storages. Now we extended this list with local disk and FPT and SFTP connections.

Learn more about FTP/SFTP connector.

MySQL Core connector

There are many databases derived from MySQL. Many of them are not compatible with most recent version of MySQL. We created a MySQL Core connector that scans just the most basic metadata therefore giving you the most chance of successful connection to all those other types of databases using this connector.

Learn more about MySQL Core connector.

Connector improvements

In 23.2 we made improvements to the following connectors:

- SQL Server Polybase

- Azure Synapse

- SSIS

- SSRS

- Tableau

- Power BI

- Azure Data Factory

- Snowflake

- Amazon Redshift

- Oracle

- CSV/Delimited text files

- Dbt

SQL Server Polybase

In 23.2 we added import of object-level lineage for external tables in SQL Server Polybase. Supported sources of external tables are the following: SQL Server, Oracle, MongoDB, Excel, S3, Azure Blob Storage, Azure Data Lake Gen2.

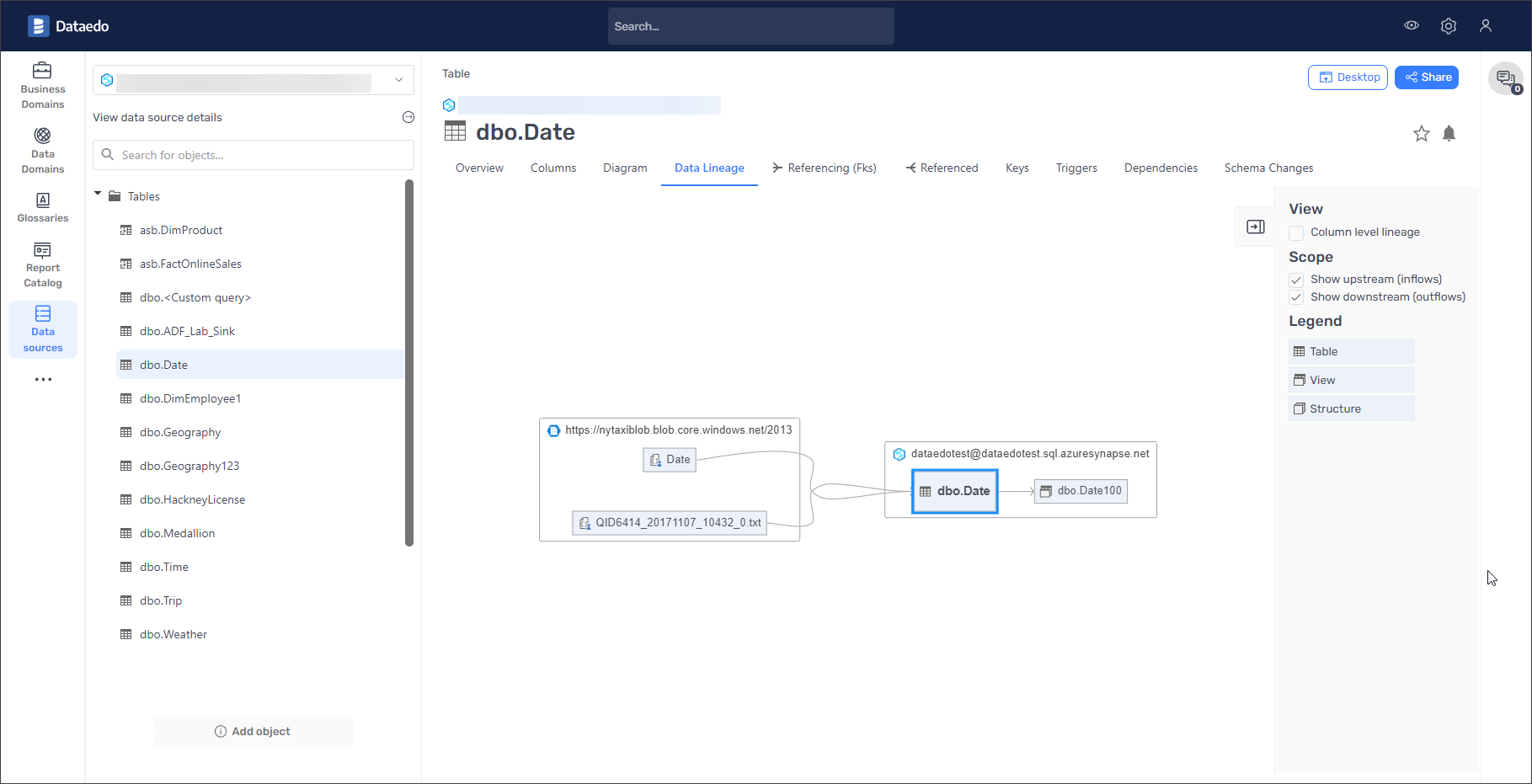

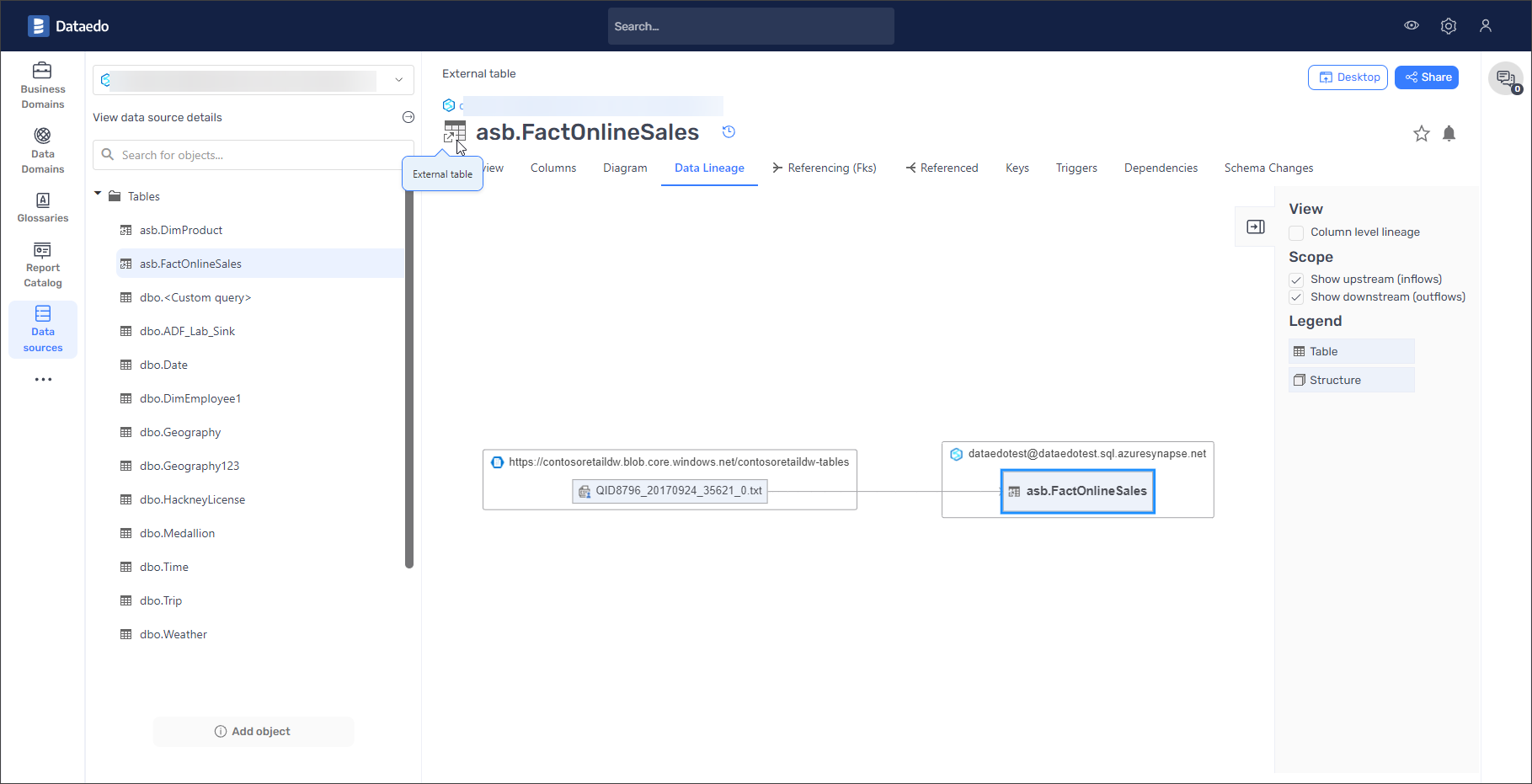

Azure Synapse

Lineage based on COPY INTO history - Synapse Dedicated instances

Dataedo will create lineage from source file to destination table of COPY INTO command. It requires documenting source file before importing Synapse Dedicated metadata.

Lineage for external tables - Synapse Dedicated and Synapse Serverless instances

External tables will have full lineage from source file to external table in Dataedo 23.2.

Learn more about Azure Synapse Analytics connector.

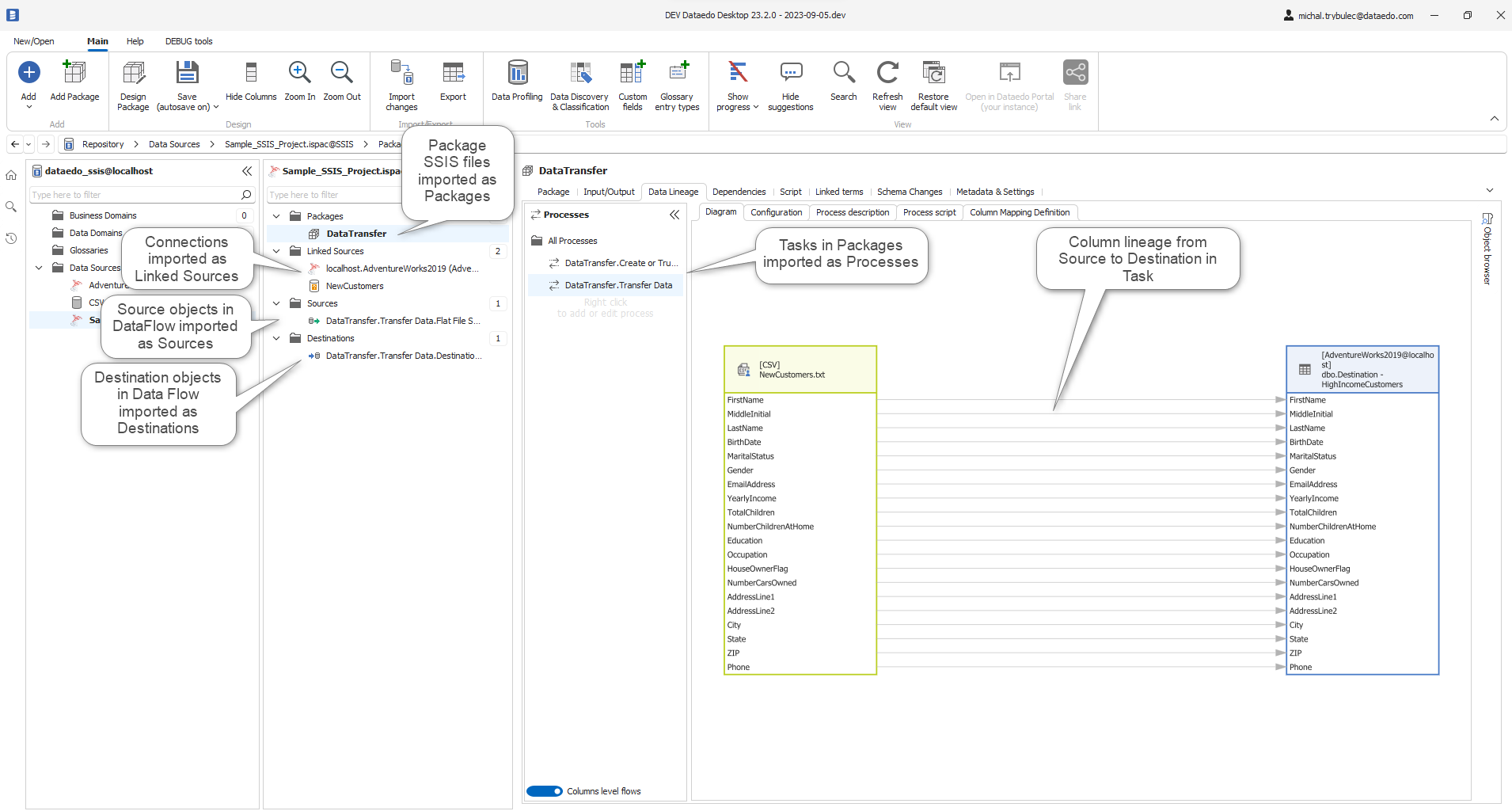

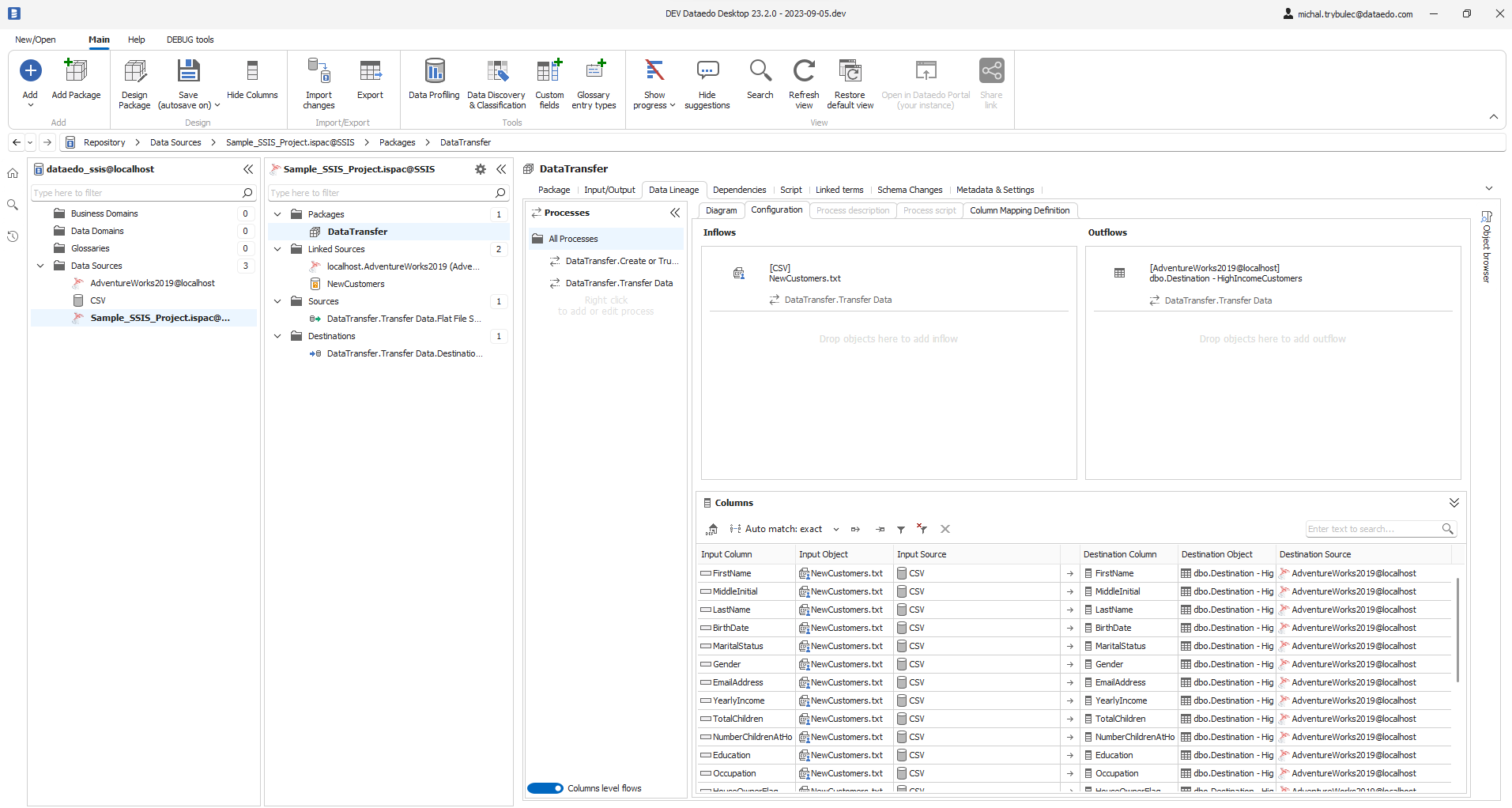

SSIS

From 23.2 Dataedo imports the following object types:

- Packages (now moved to Packages folder)

- Sources

- Destinations

- Connections (as Linked Sources)

More detailed lineage

For some types of SSIS processes such as Balanced Data Distributor, Conditional Split, Lookup, Multicast Transform and many more (more details in SSIS documentation) Dataedo can extract column data lineage

Learn more about SSIS connector.

SSRS

Learn more about SSRS connector.

Tableau (Prep Builder data flows)

In 23.2 we now read Tableau Prep Builder data flows into repository.

Upgrades include:

- Importing of flows with lineage

- Filtering out system tables during import

- Building of column level lineage from Custom SQL (represented as datasets) with SQL parsing

- Importing of fields hierarchy in Data sources

Learn more about Tableau connector.

Power BI

We upgraded our Power BI connector:

- Added support for column-level data lineage for live connection

- Support for Datasets that have been created as a Live connection

- We added Linked Sources for better control over automatic lineage building (see Data Lineage improvements section)

- Importing Dashboards as "Dashboard" not a "Report"

Learn more about Power BI connector.

Azure Data Factory

- Importing of Linked Services (as Linked Sources)

- Importing of Data sources and destinations into Datasets

- Better way of fetching dataset columns

Learn more about Azure Data Factory connector.

Snowflake

23.2 Snowflake connector includes following upgrades:

- Loading lineage from data loading history of COPY INTO \<table>

- Loading of lineage for external tables

- Distinguished external functions as a separate type

Learn more about Snowflake connector.

Amazon Redshift

We upgraded automated lineage creation for Amazon Redshift connector. Upgrade includes:

- Import of external tables with lineage

- import of COPY commands (not COPY Jobs)

- Import of Data Ingestion (as Linked Sources)

Learn more about Amazon Redshift connector.

Oracle

In 23.2 we moved Oracle packages and their stored procedures and functions into Packages folder.

Learn more about Oracle connector.

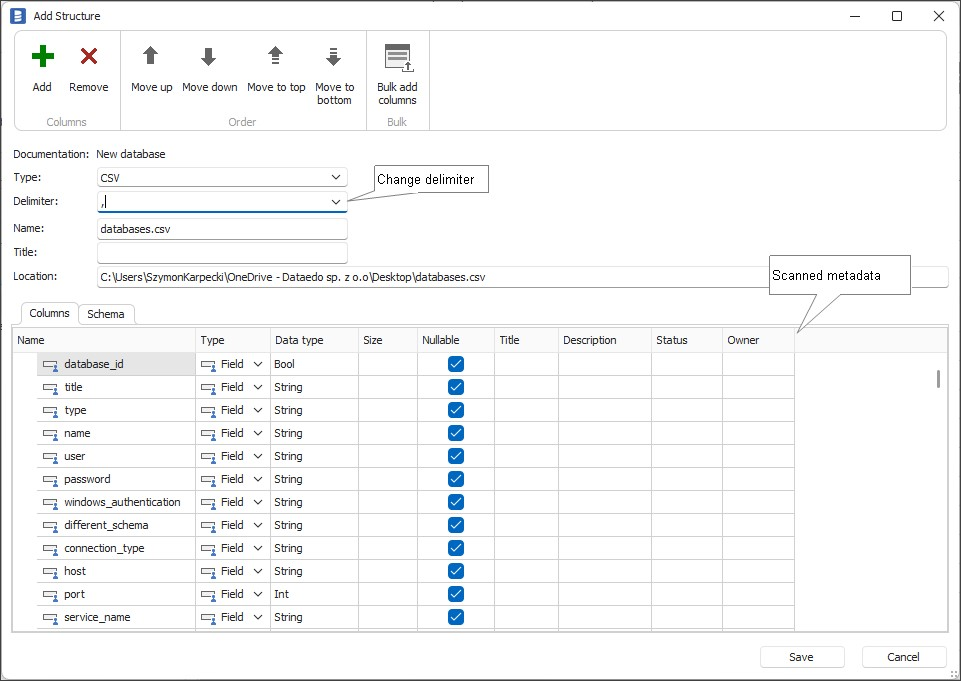

CSV/Delimited text files

Dataedo 23.1 and older supported import of CSV files (comma separated files). CSVs store values separated by comma (","). Some files use this format with other separators (";", "|", "tab"). Now during the import you can provide a separator character.

Dbt

Added option to add manifest.json and catalog.json files manually.

Learn more about Dbt connector.

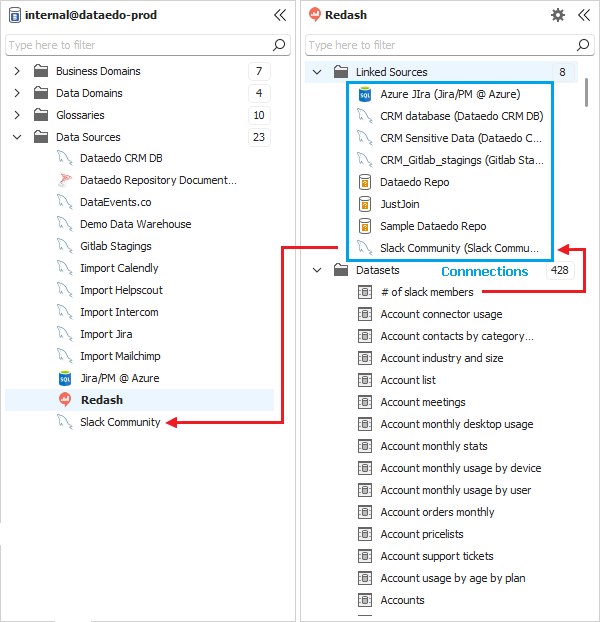

Data Lineage improvements

Lineage from SQL parsing

- Snowflake dialect support

- CTE support for PostgreSQL, MySQL & Oracle

Linked Sources

In 23.2 we introduced a new object type - Linked Source. Linked Source represents a connection in one data source to another data source. In BI and ETL tools those are simply connections. This allows you identify manually links across various sources in Dataedo repository in case Dataedo is not able to detect it automatically (for instance, in one source address is identified by url and in another one by IP). This gives you more control over automated lineage creation and increases scope of what is created automatically.

Selectable SQL dialect

Now, you can direct parser and lineage builder on what parser/SQL dialect should be used. This gives you more control over parsing and lineage automation and allows you to use one of the existing parsers on sources we don't provide dedicated SQL parsers for.

You can assign SQL dialect to:

- Data source

- Linked source - link to data source from another data source

- Data Lineage process

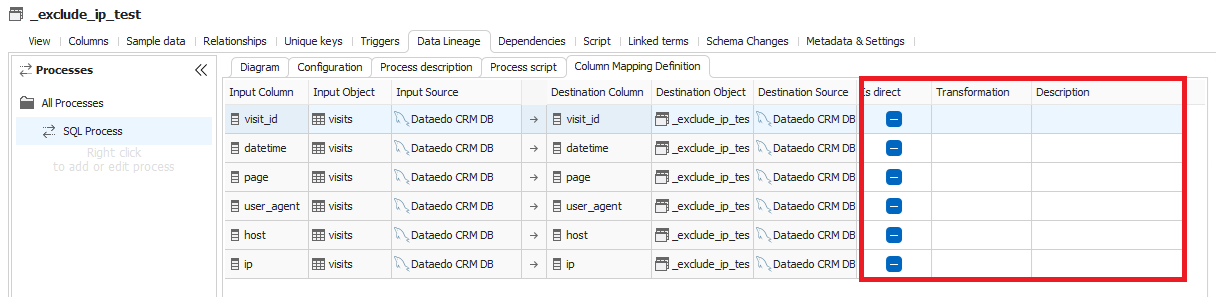

Column level lineage fields

From 23.2 you can add additional metadata to column mapping:

- Description - you can provide a description for each column mapping

- Transformation (as text)

- Custom fields

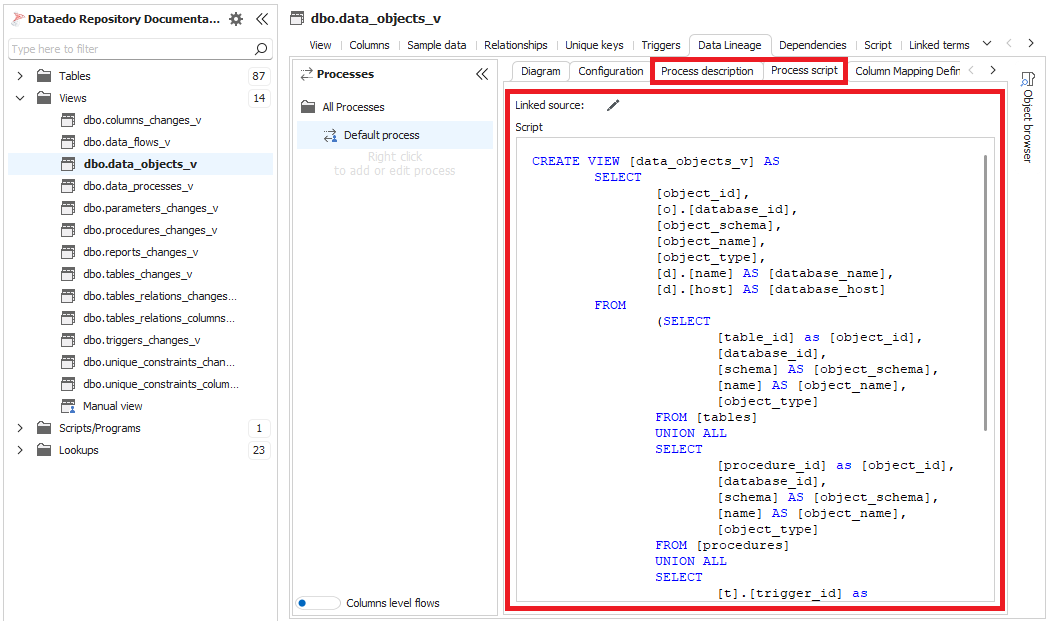

Process level fields

We added a number of fields to process level of the lineage

- Description - you can describe each process

- Script - script is imported automatically or added manually

- Linked Source

Marking as deleted

Automated data lineage process now marks processes and object and column mapping as deleted, rather than removing it. This is because now processes and column mapping have extra manual metadata that would be lost otherwise.

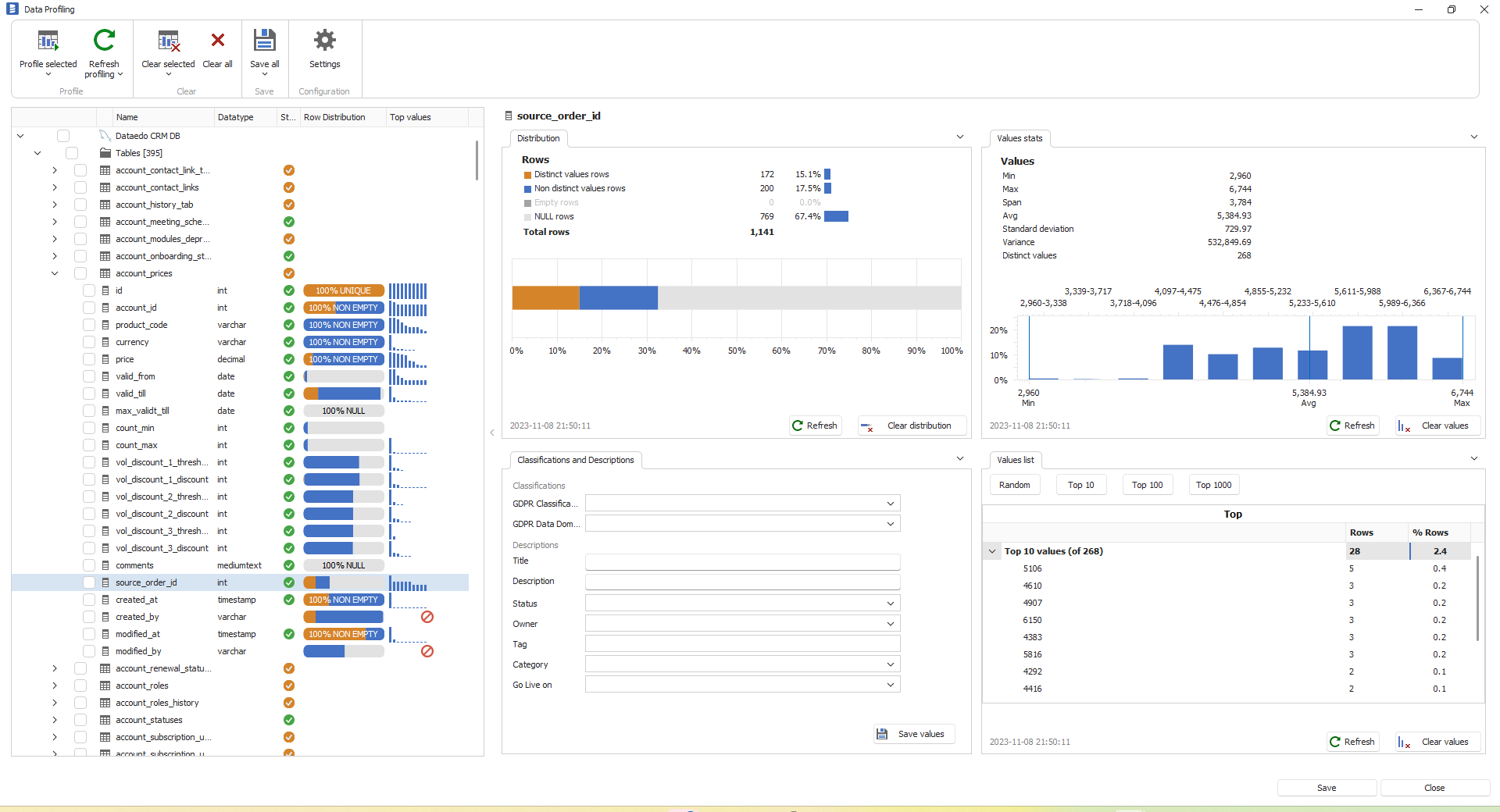

Data Profiling improvements

Refreshed UI

In 23.2 we refreshed the UI of data profiling window:

- Ribbon options have been grouped

- Sparklines and progress icons have been updated

- Context menu have been updated

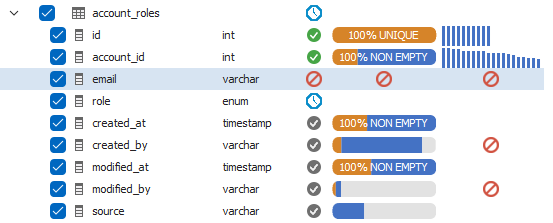

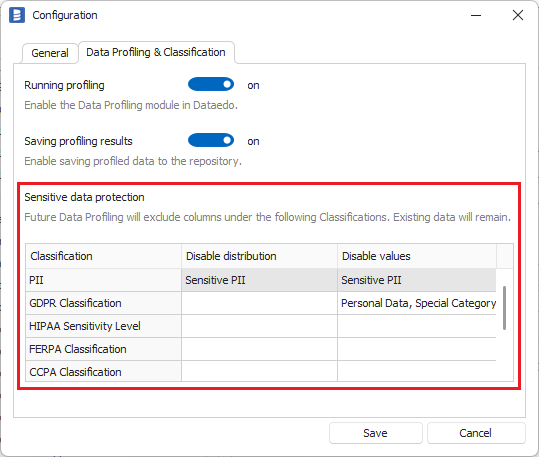

Blocking classified fields

Now, Dataedo will not profile and save data for columns that have classification.

You can define what classification labels disable what profiling steps.

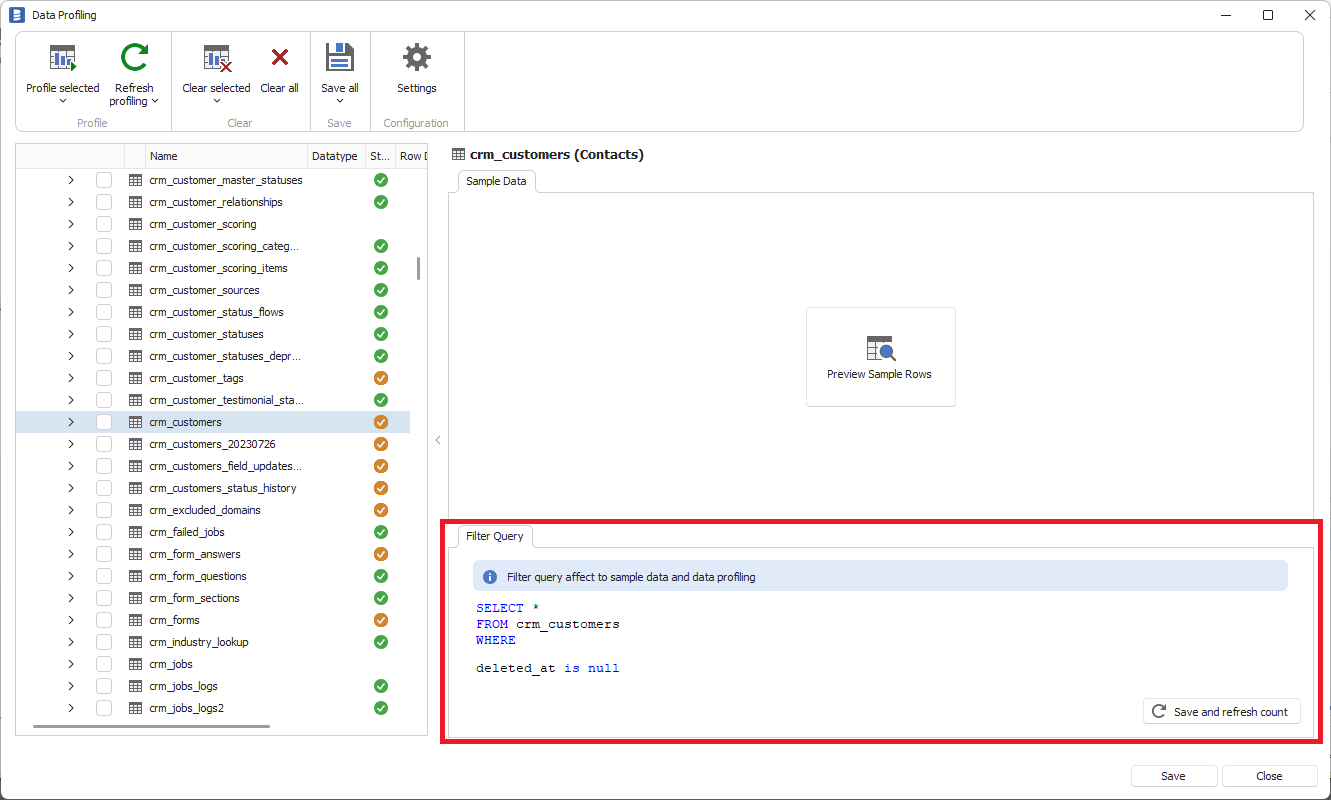

Filtering tables

Now Dataedo allows you to add where clause to tables (and views) to limit rows that will be profiled.

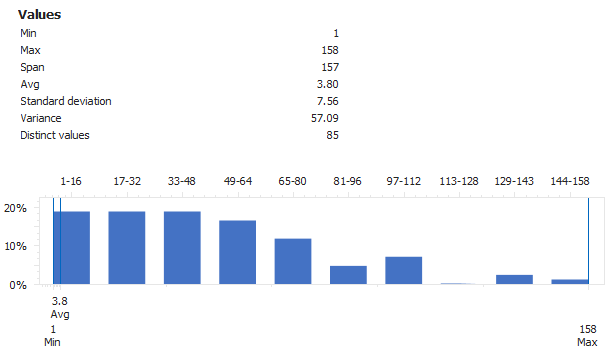

Value distribution chart

Dataedo now builds value distribution chart for numbers columns, and length distribution for text columns.

Sample data as a tab

We added Sample data tab directly to the table form so it is easier to preview table sample rows. This information is stored only in Desktop memory and is not saved in the repository or shared with other users.

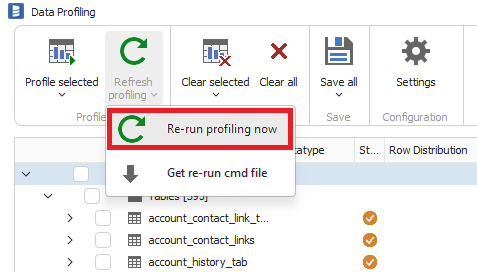

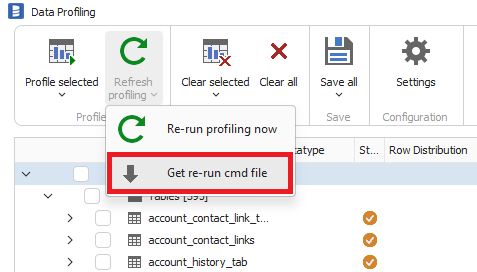

Re-run profiling

Now you can re-run profiling for the same set of tables, columns and settings of profiling scope with one button.

Running profiling from command line

You can also re-run profiling using command line which means you can schedule profiling operation.

Other improvements

- You can now also profile saved SQL Queries

- Profiling skips empty tables

Dataedo Portal

This section lists changes in Dataedo Portal, formerly known as Dataedo Web Catalog).

Preview pane

Dataedo 23.2 Portal has a convenient preview pane, that presents basic information about selected object, in the example below a column in the table, without the need for opening of a new page. Click arrow next to the name to go to the object page.

History of changes in Portal

Since 10.2 and 10.3 we are saving changes to descriptions, titles and custom fields, both from Desktop and Portal. There is also an option to view this history for each field in Desktop. Now, we added this capability to Portal.

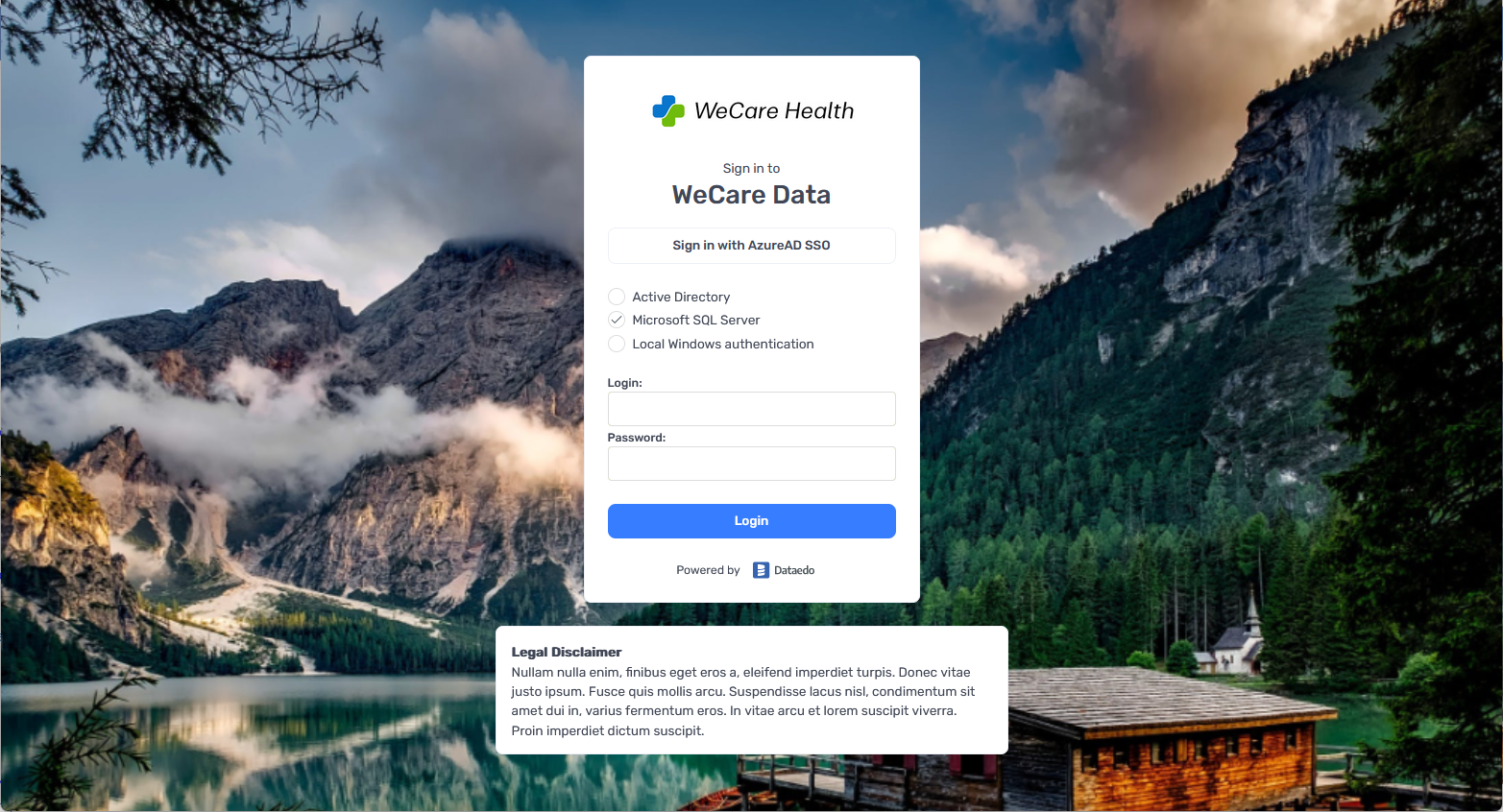

Customization

From now on, you can customize the following elements of Dataedo Portal :

- Logo

- Login page background

- Login page additional information box

- Portal name

- Home page additional information box

To customize portal, log in as an admin, go to System Settings > Customization.

Search redesigned

New search is faster and groups different types of objects in separate tabs.

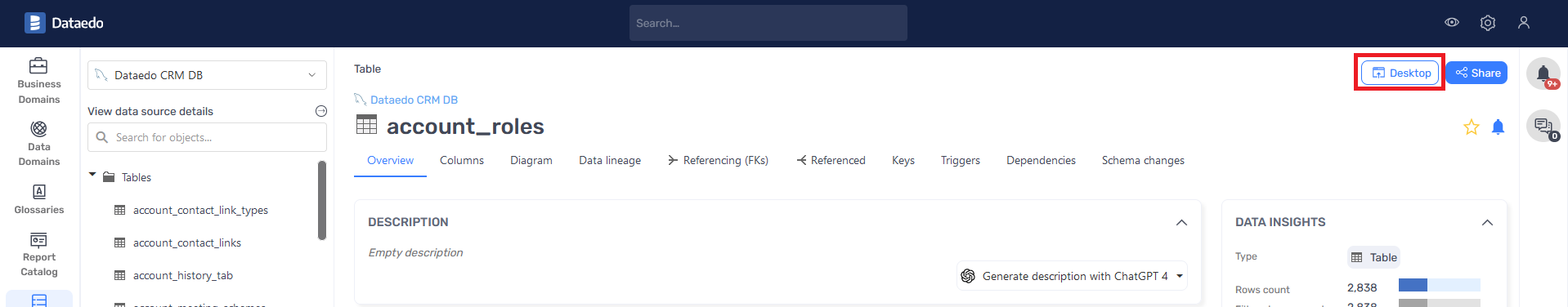

Open in Desktop

In 23.1 we added in Dataedo Desktop an "Open in Web" button, that allows you to quickly open the same page in Dataedo Portal (formerly Dataedo Web). Now, we added a similar button in Portal that allows you to open the same object in Dataedo Desktop - "Open in Desktop".

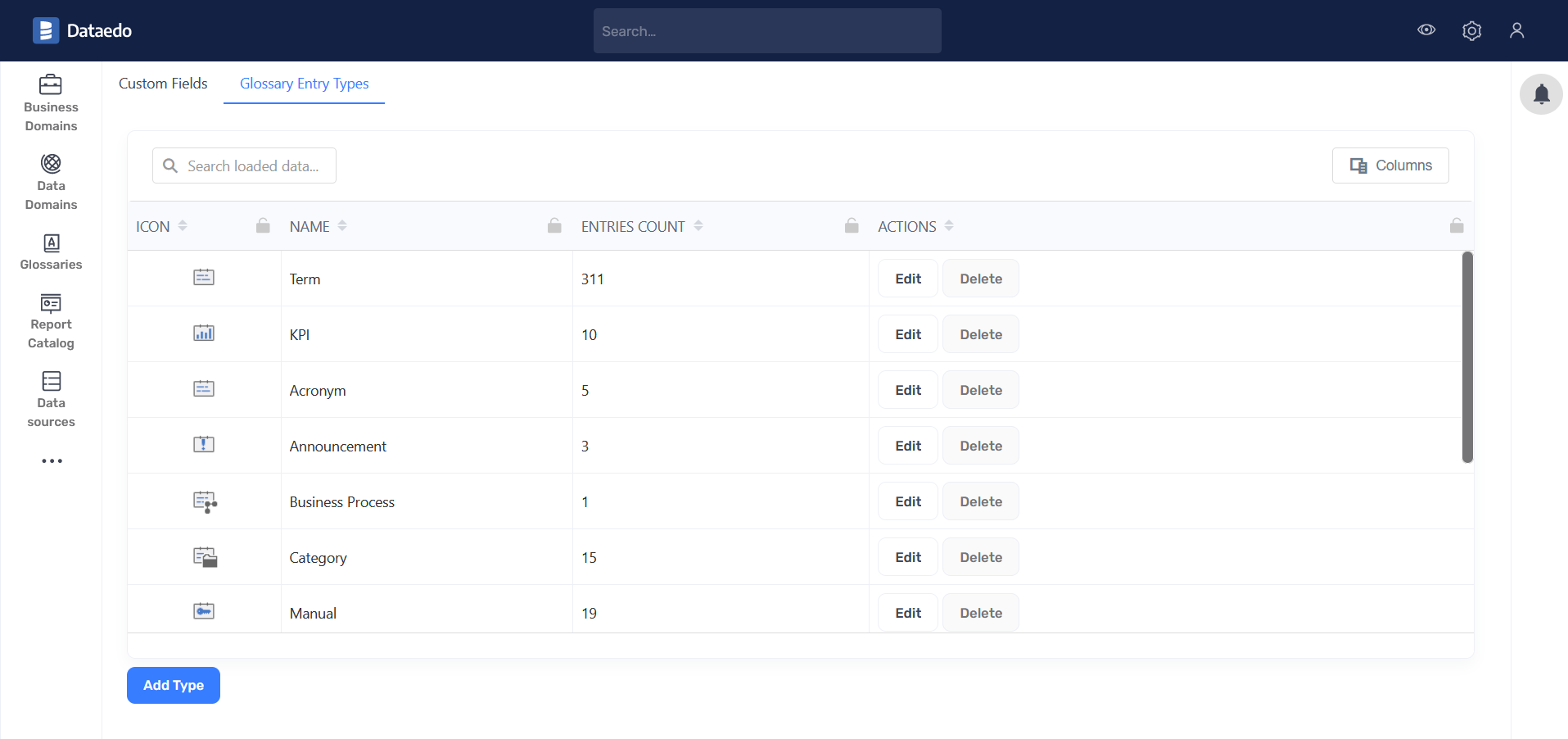

Business Glossary types configuration

Now, you can add/edit glossary entry types directly in the Portal.

Improved code diff

In schema change tracking report we improved:

- Before and after code are aligned to the same lines

- Marking removed and new fragments in red/green

Dataedo Desktop

This section lists changes in Dataedo Desktop.

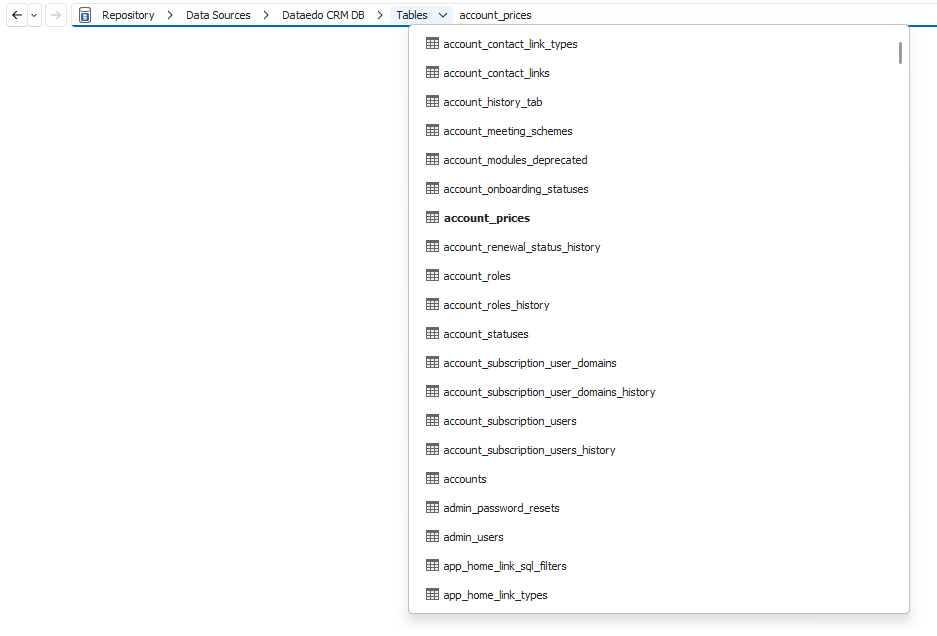

New navigation and double click

In 23.2 Desktop got a complete overhaul of the navigation. The repository explorer now has two layers. Items got grouped into categories what makes it easier to navigate.

Now, you have to double click on the object to open it.

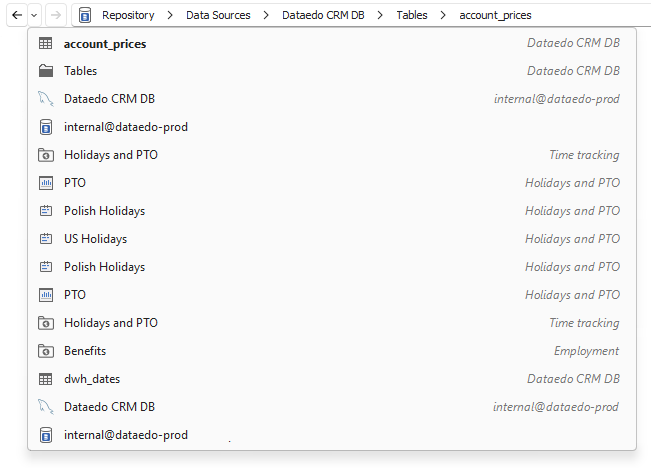

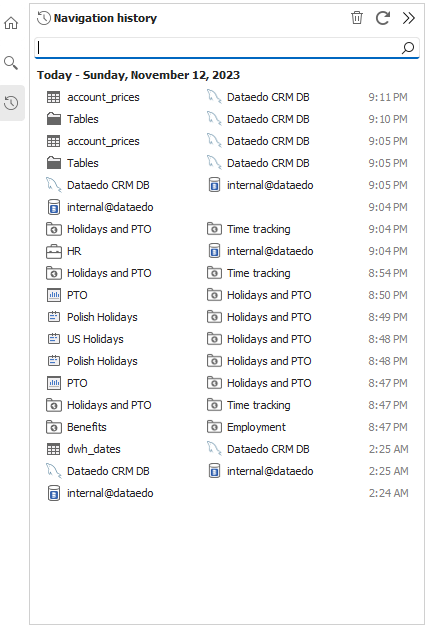

Breadcrumbs, history and Back button

A long awaited new feature - option to go back to the last visited object. We have made a full package - Windows-like breadcrumbs (that in next version will allow copy and paste), back button and history of viewed objects.

You can navigate repository in the breadcrumb:

Browser-like stacked history:

Full history (for current session):

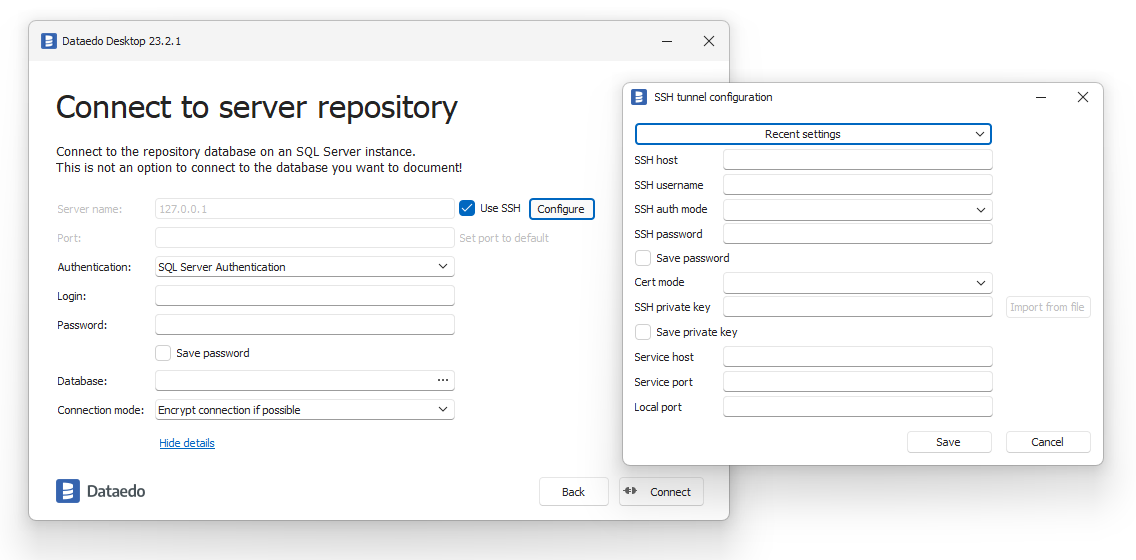

Secure repository connection

To make it more secure to host Dataedo Portal and repository in the cloud Desktop now allows you to connect to the repository over SSH tunnel.

Before you upgrade

Before you upgrade consider the following:

- Upgrade requirements - this release requires upgrade of the following:

- Repository

- Dataedo Portal (formerly Web Catalog)

- Dataedo Desktop

- Check your plan - not all the features added in 23.2 are available in all the plans. Check the overview of plans and check your plan in Dataedo Account.

Piotr Kononow

Piotr Kononow