Azure Data Factory (ADF) is a cloud ETL tool developed by Microsoft. It allows you to build complex ETL pipelines using drag&drop web UI.

This article describes abilities and limitations of Azure Data Factory Dataedo connector and walk you through the import process.

Supported elements and metadata

Objects imported

- Pipelines

- Name

- Folder (as schema)

- Activities

- Name

- Data lineage (object and column level, details below)

- Datasets

- Name

- Folder (as schema)

- Columns (if linked service is imported to Dataedo)

Dataedo imports all the activities as data processes within pipelines. Nested activities like ForEach, Until, IfCondition, and Switch are also imported with all the activities inside them.

Activites we build automatic data-lineage for

- Copy activity - Object-level and column-level lineage

- Dataflow - Object-level lineage

Automatic data lineage

Dataedo ADF connector always creates automatic data lineage dataset -> task -> dataset. We also support source -> dataset -> task -> dataset -> sink for following linked service types:

- Microsoft SQL Server

- Azure SQL Server

- Azure Synapse Analytics

- Azure Blob Storage

- Azure Data Lake Storage

- Amazon S3,

- Postgres

- Redshift

- MariaDB

- MySQL

- MongoDB

- Snowflake

- DB2

- Postgres

Connecting to Azure Data Factory

Permissions

To list resource groups in a connection window you must belong to the Data Factory Contributor role at the Resource Group level or above.

To run import you need just read access to the factory you want to document (Microsoft.DataFactory/factories/read). However in this case you must provide the resource group and factory name by hand instead of picking from the list.

Add new connection

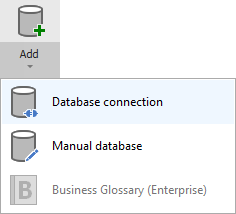

To connect to Azure Data Factory and create new documentation click Add documentation and choose Database connection.

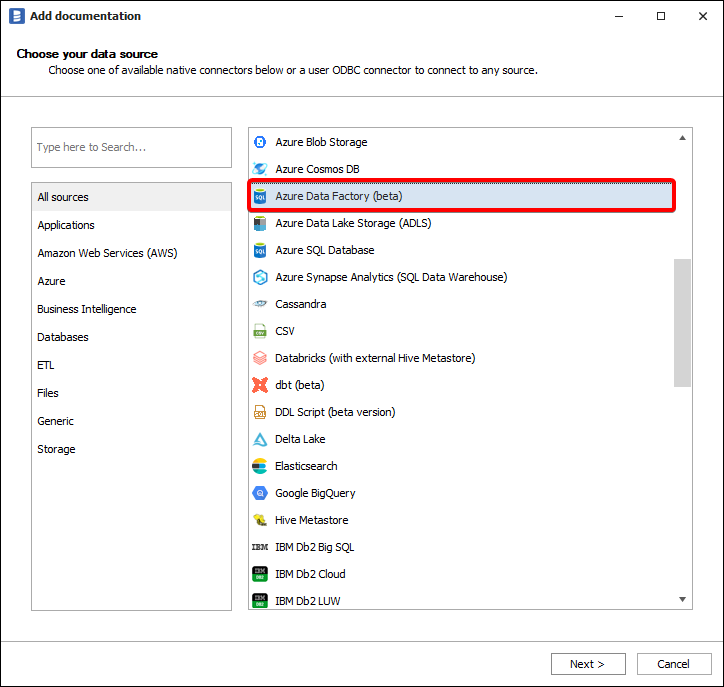

On the connection screen choose Azure Data Factory as DBMS.

Connection details

- Advanced settings - When you want to login using custom Azure Application Registration

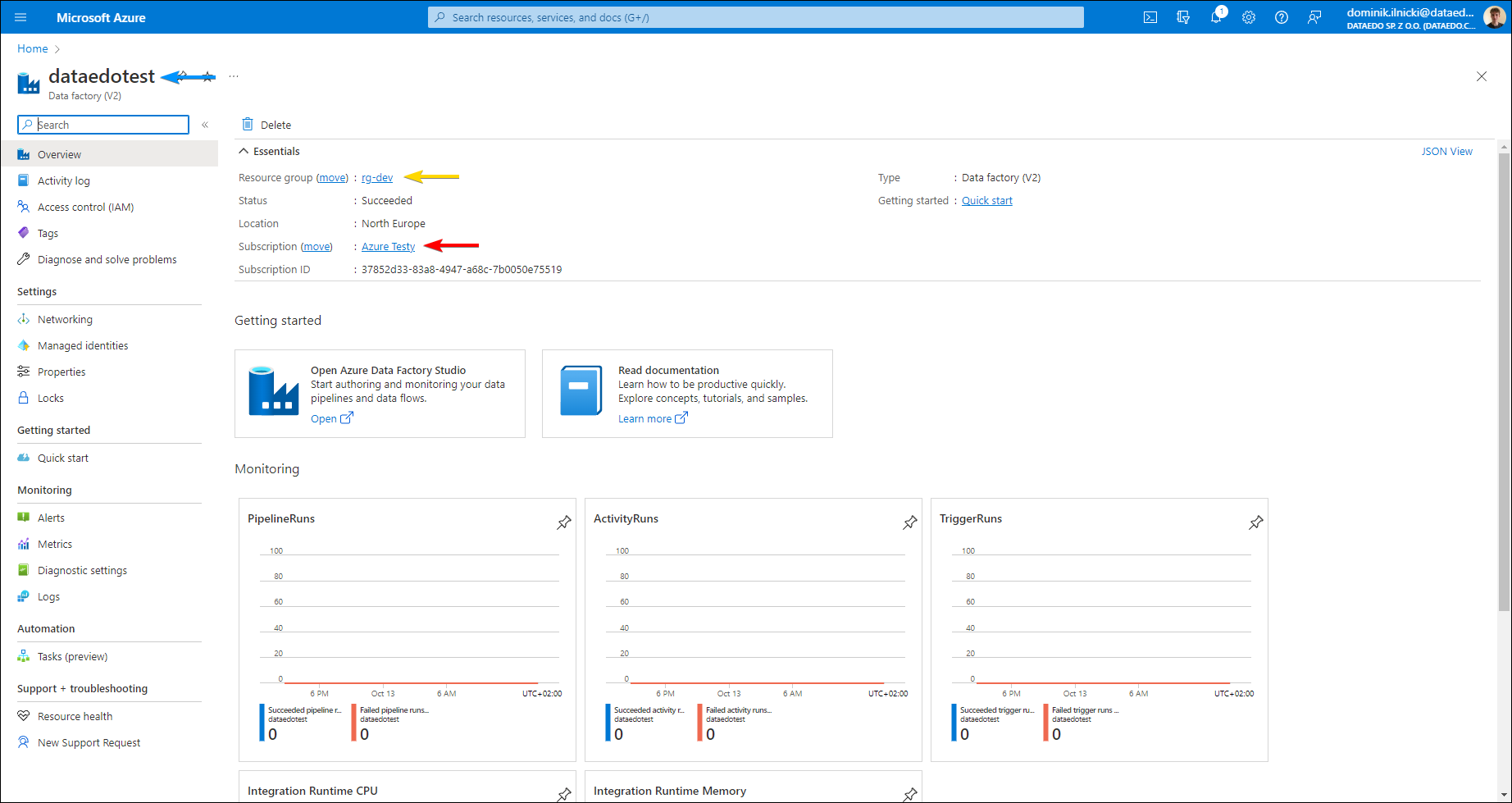

- Subscription - Azure subscription assigned to your factory.

- Resource group - Resource group where your factory sits in.

- Data factory name - Name of the factory you want to extract metadata from.

- Analyze Pipeline Runs - Select if you want to get lineage from runtime values of pipeline parameters

- Last Days - When choosing Analyze Pipeline Runs specifies the number of recent days for analyzing pipeline runs.

- Recent Runs - When choosing Analyze Pipeline Runs indicates the number of recent runs for each activity from the selected days to be analyzed.

Go to portal.azure.com . Search for your data factory name and open this resource to get information needed.

Connecting using custom Azure Application Registration

To set up custom Application Registration click on Advanced settings next to Sign In button.

- Azure App Client Id is available on Overview tab of your Application Registration

- Authority and Cloud instance depending on your Azure configuration

- Audience depending on value of Suggested account types setting on Overview tab of your Application Registration

Application Registration needs to have proper permissions: - user_impersonation under Azure Service Management - User.Read under Microsoft Graph

Importing objects

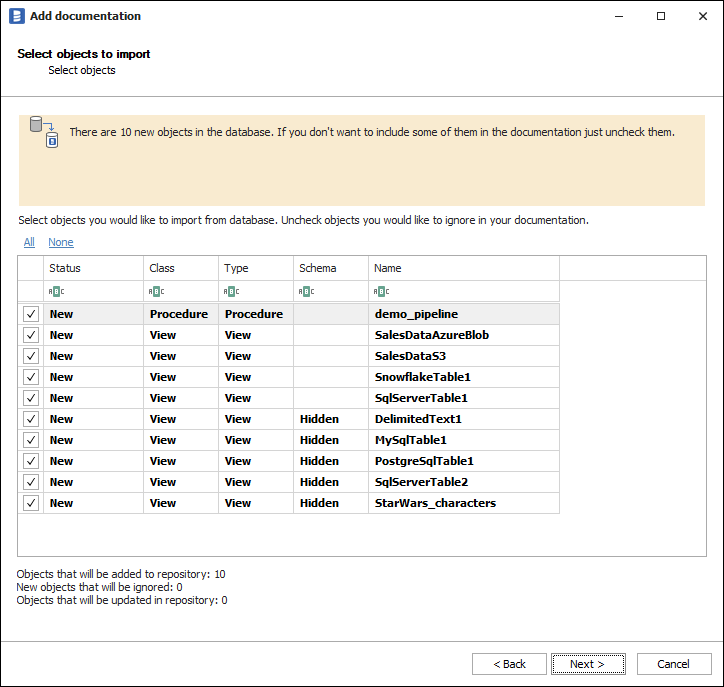

When the connection was successful Dataedo will read objects and show a list of objects found. You can choose which objects to import. You can also use an advanced filter to narrow down the list of objects.

Confirm list of objects to import by clicking Next.

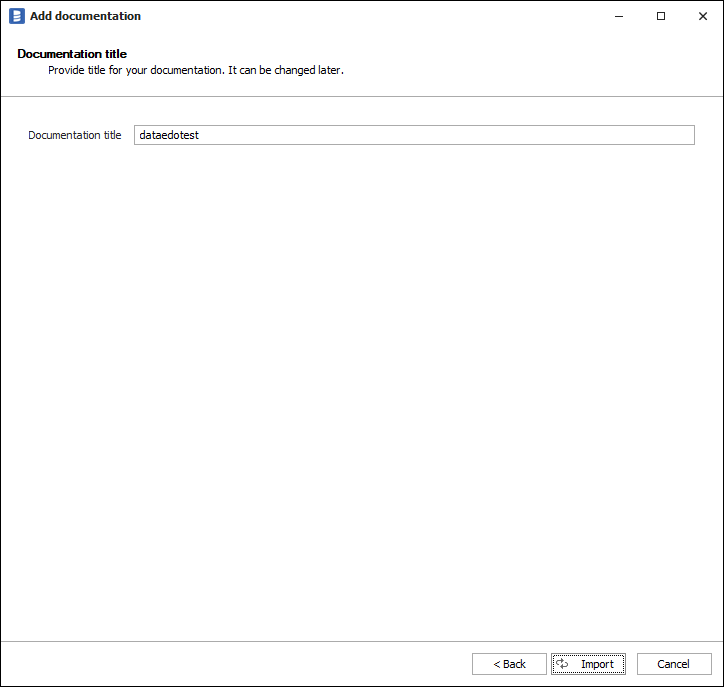

The next screen allows you to change the default name of the documentation under your schema will be visible in the Dataedo repository.

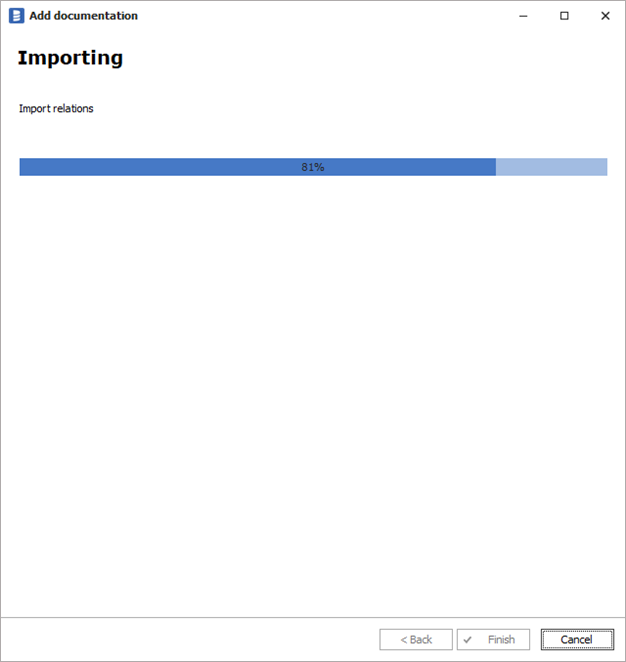

Click Import to start the import.

When done close import window with Finish button.

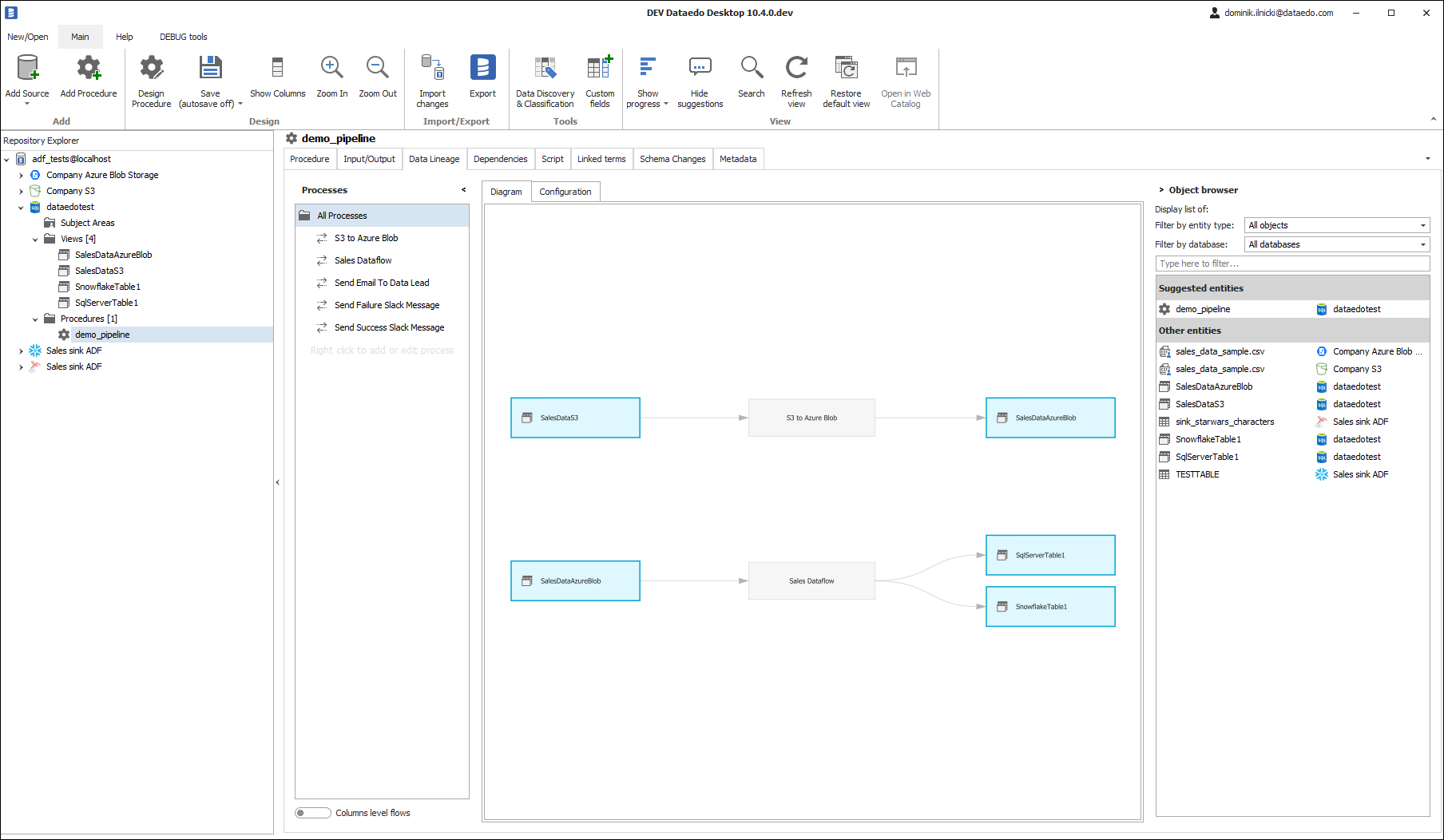

Outcome

Your Azure Data Factory objects has been imported to new documentation in the repository.

Dominik Ilnicki

Dominik Ilnicki