How to connect

Pre-requisites:

To scan Azure Databricks Unity Catalog, Dataedo connects to a Databricks workspace API, and uses the Personal Access Token for authentication. You need to have a Databricks workspace that is Unity Catalog enabled and attached to the metastore you want to scan. Dataedo will need following information to connect to Databricks instance:

NOTE: You can find them in your Databricks workspace

Workspace url - once you've opened your Databricks workspace, copy url from the address bar in your web browser and paste it into Dataedo

Token - a personal token that has to be generated in profile settings in your Databricks workspace. Check how to generate PAT

Catalog - you can either type in the Catalog name manually or later choose it from the list

Create Connection

- From the list of connections, select "Databricks"

- Enter connection details

- If you don't remember the Catalog name, you can select it from the list of available catalogs using [...] button in Dataedo

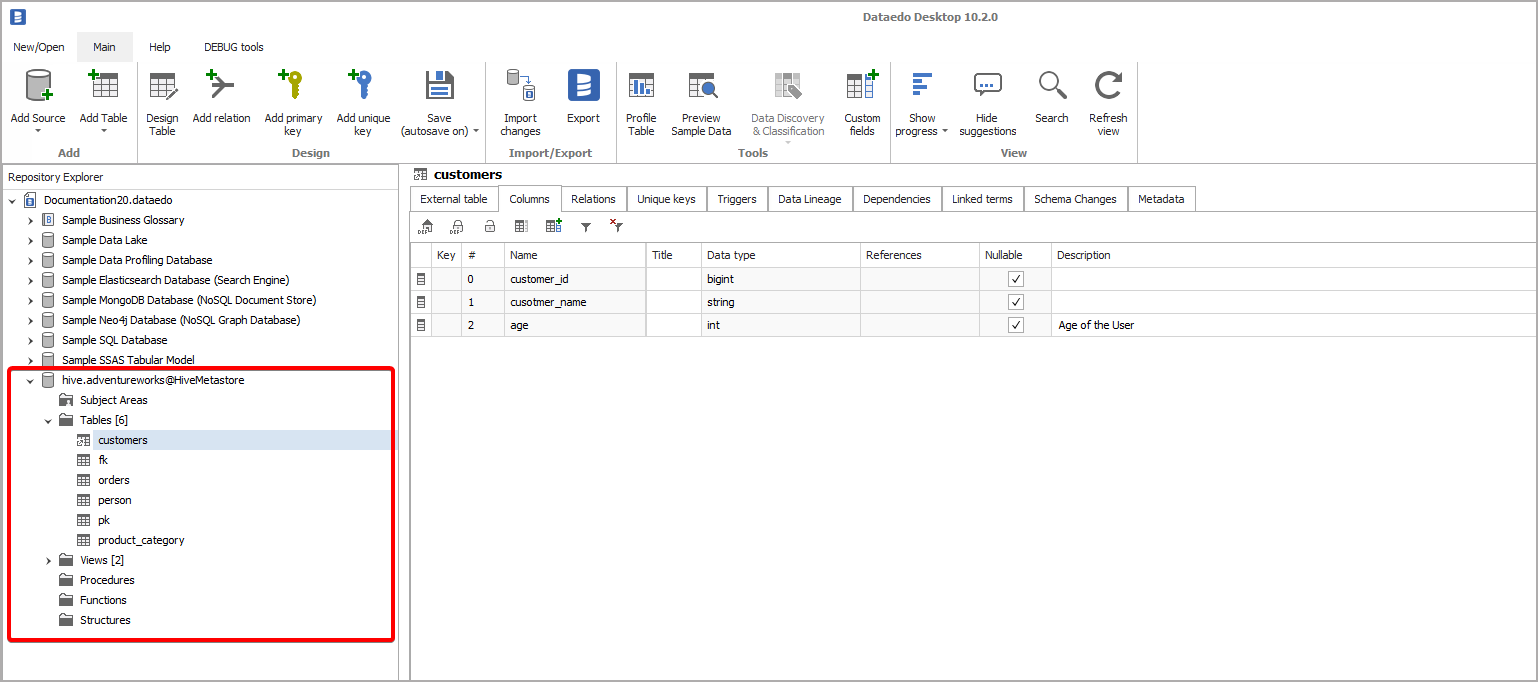

Importing metadata

- When connection was successful, Dataedo will read objects and then show a list of objects found. You can choose which objects to import.

- You can also use advanced filter to narrow down list of objects.

Outcome

- Metadata for your Unity Catalog has been imported to new documentation in the repository.

- Lineage for your objects within imported Unity Catalog objects was documented more details check here

Watchouts

- For all the objects that you want to bring into Dataedo, the user needs to have at least SELECT privilege on tables/views, USE CATALOG on the object’s catalog, and USE SCHEMA on the object’s schema.

- In order to scan all the objects in a Unity Catalog metastore, use a user with metastore admin role. Learn more from Manage privileges in Unity Catalog and Unity Catalog privileges and securable objects.

- For classification, user also needs to have SELECT privilege on the tables/views to retrieve sample data.

- If your Azure Databricks workspace doesn’t allow access from public network, or if your Microsoft Purview account doesn’t enable access from all networks, you can use the Managed Virtual Network Integration Runtime for scan. You can set up a managed private endpoint for Azure Databricks as needed to establish private connectivity.

How to generate the Personal Access Token?

- Use latest manual from Databricks Databricks personal access token authentication

Depends on your implementation scenario, you would need to to use:

After openning page, if you are using other cloud provider than AWS you can switch to respective manual.

We are recommending to use Service Principal, to avoid loosing connection if personal account is removed or token expire. to do it follow this guide Databricks personal access tokens for service principals

For smaller non-production implementations like POC you can use the Personal account , to setup use following steps Databricks personal access tokens for workspace users