AWS S3 is an object store provided by Amazon. It can store files and structures in any format. Dataedo provides a native connector that can be used to document files in S3 in the following formats:

- JSON.

- CSV,

- Apache Avro,

- Apache Parquet,

- Apache ORC,

- Delta Lake,

- Microsoft Excel,

- XML

Prerequisites

IAM User

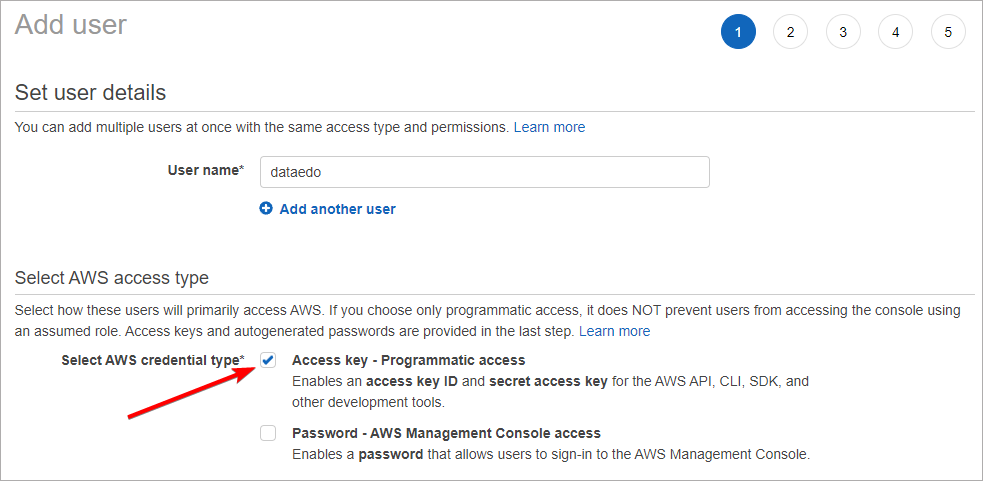

To document objects stored in S3 with Dataedo, you will need an IAM user with S3 read access which will be used to connect to bucket. To create this user:

- Open IAM resource in AWS Console,

- Open Users tab,

- Click Add Users button,

- Set user name,

- In Select AWS Credential Type, check Access key – Programmatic access,

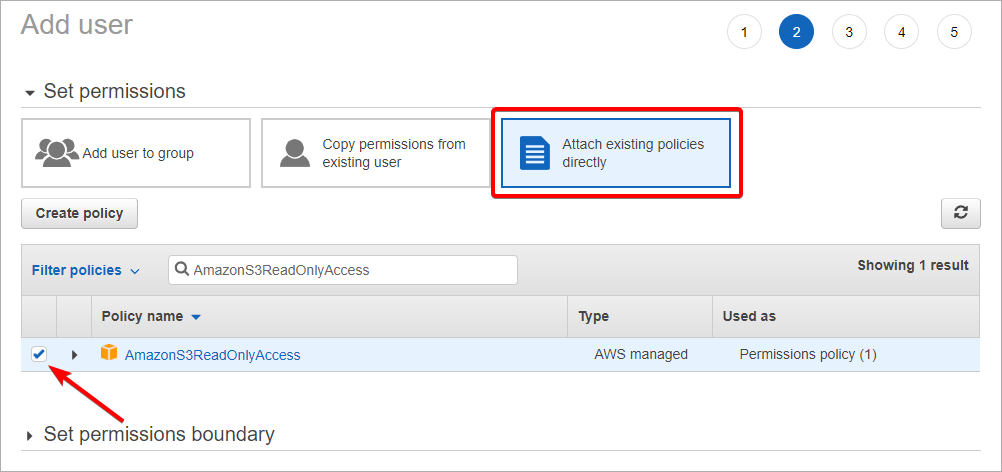

- In the Permissions section:

- select Attach existing policies directly,

- search for AmazonS3ReadOnlyAccess and check the policy

- (Optional) Set tags,

- Review options and if everything is correct Create User.

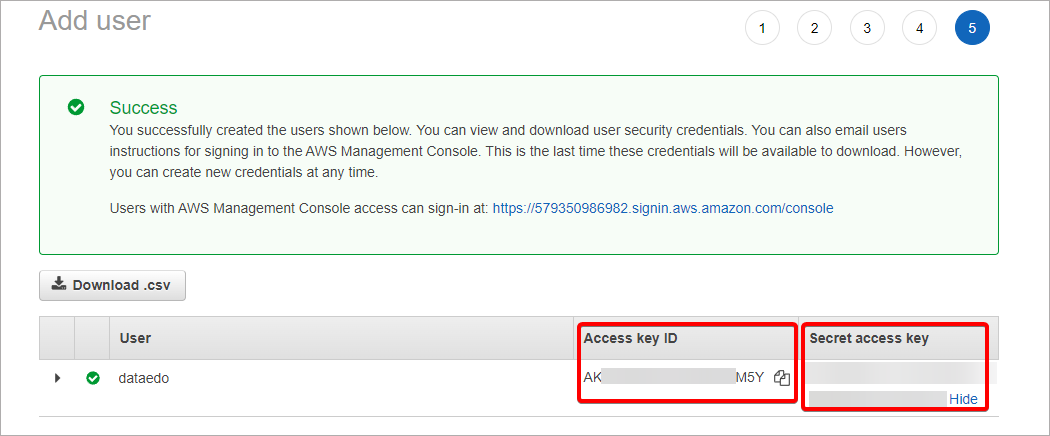

- After creating user, save the Access Key and the Secret Key, as you will need them later to authenticate to S3 when connecting with Dataedo.

Amazon Resource Name - ARN

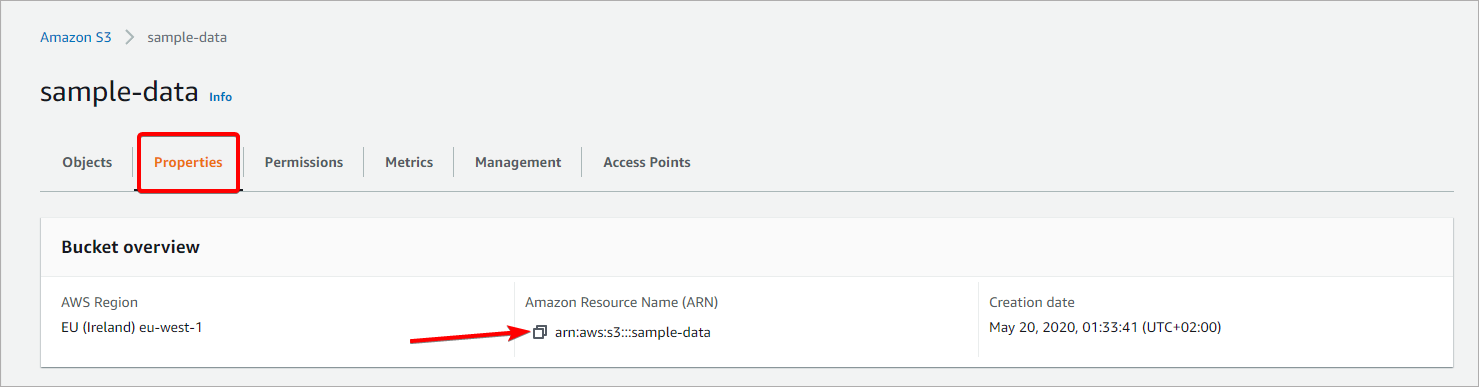

Amazon Resource Name (ARN) is a unique identifier of Amazon resource. Dataedo will use it to connect to the selected S3 Bucket. To find ARN:

- Open S3 Resource in AWS Console,

- Open bucket which contains file(s) you want to document,

- Open properties tab,

- Copy the ARN value.

Connecting Dataedo to Amazon S3

Dataedo provides two ways to document file(s) in the S3 bucket. You can either Document an object stored in S3 as structure in existing documentation or Add new documentation.

Document an object stored in S3 as structure in existing documentation

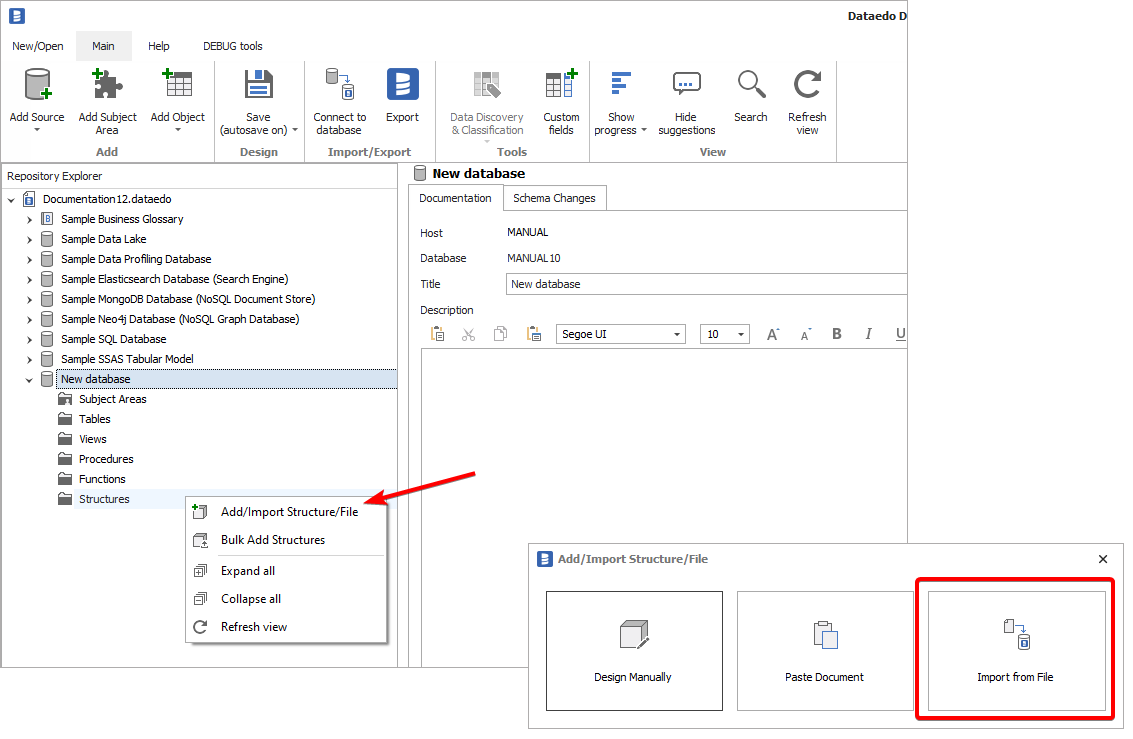

Right-click Structures and select Add/Import File/Structure. In opened window select Import from file.

Select the format of the file to import. If in the next steps you will select more than one file, this will be used as the default choice, although you will be able to select the format for each of the files.

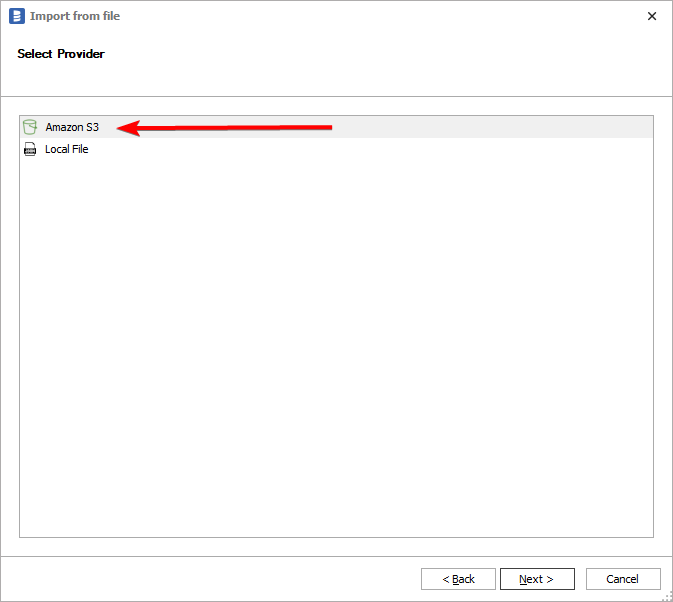

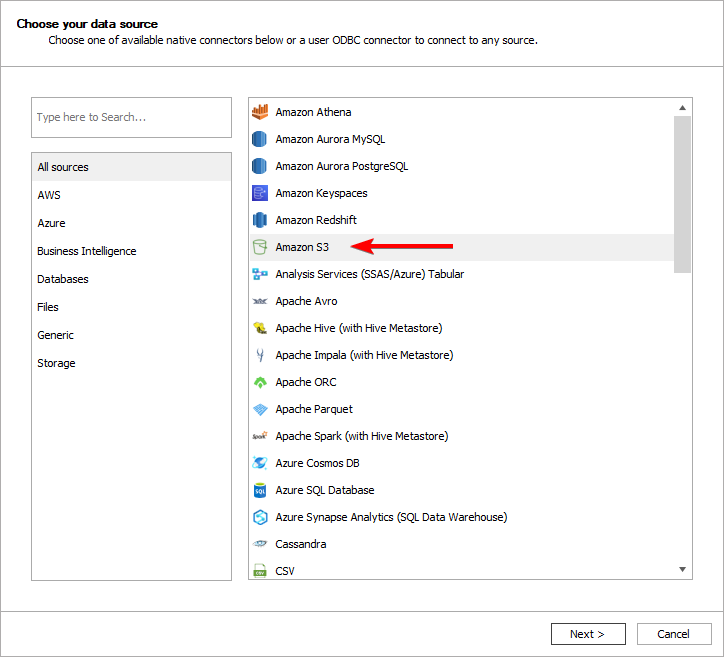

Select Amazon S3 as provider:

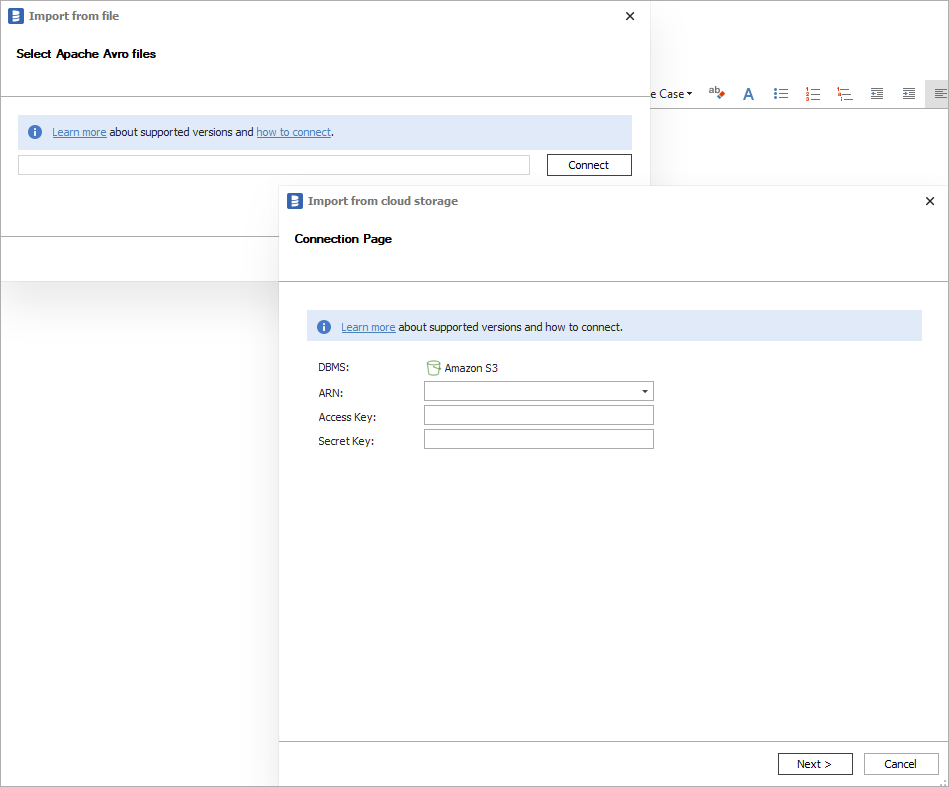

In Select file step, click Connect button and provide connection details to Amazon S3:

- ARN - Amazon Resource Name which uniquely identifies S3 Bucket,

- Access Key - key assigned to IAM user which will be used to connect Dataedo to S3 Bucket,

- Secret Key - password for IAM user.

Obtaining connection details was described in the Prerequisites section. Click Next.

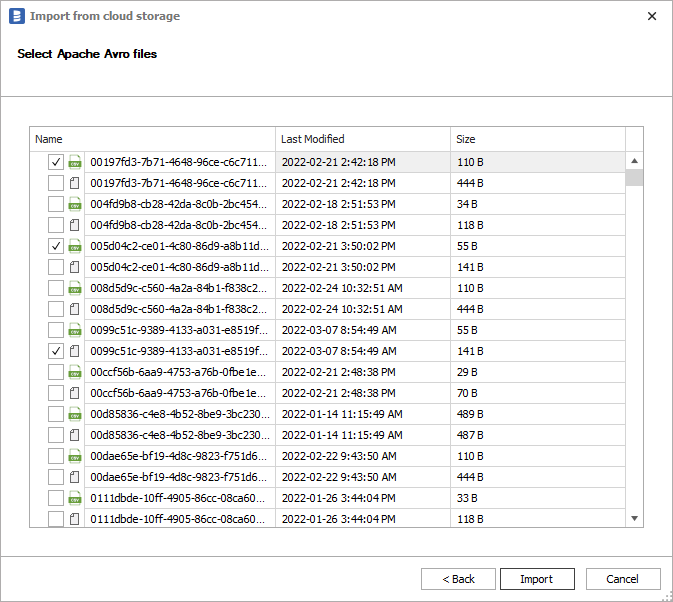

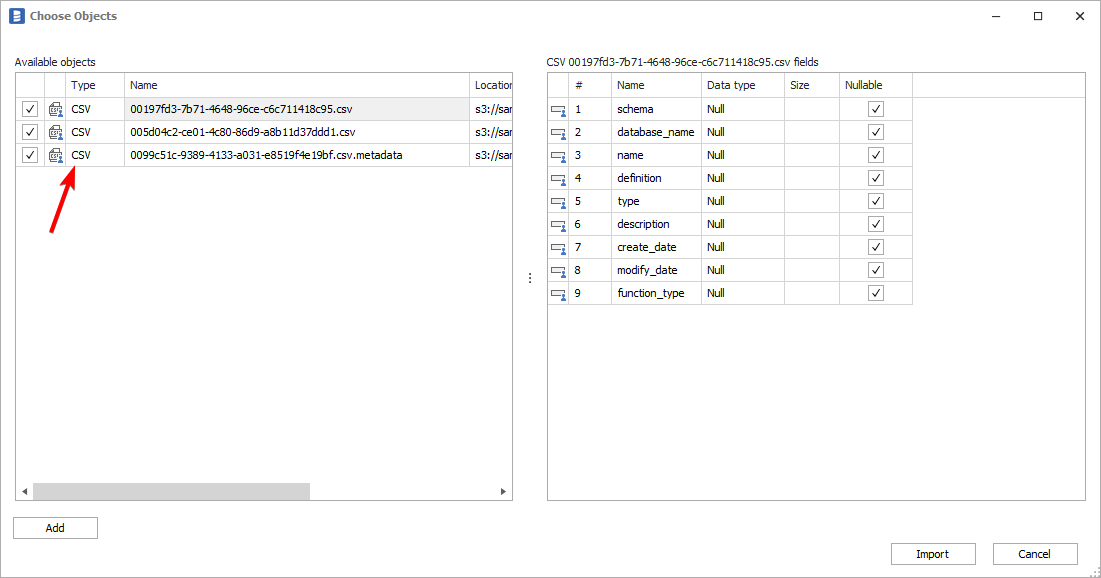

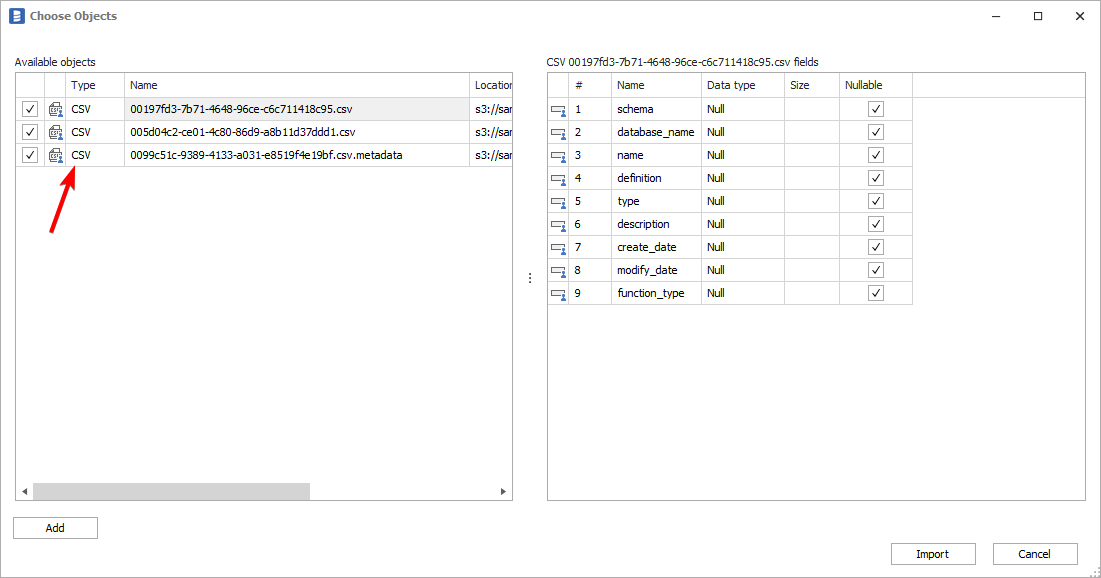

In next step, select a file or multiple files to import.

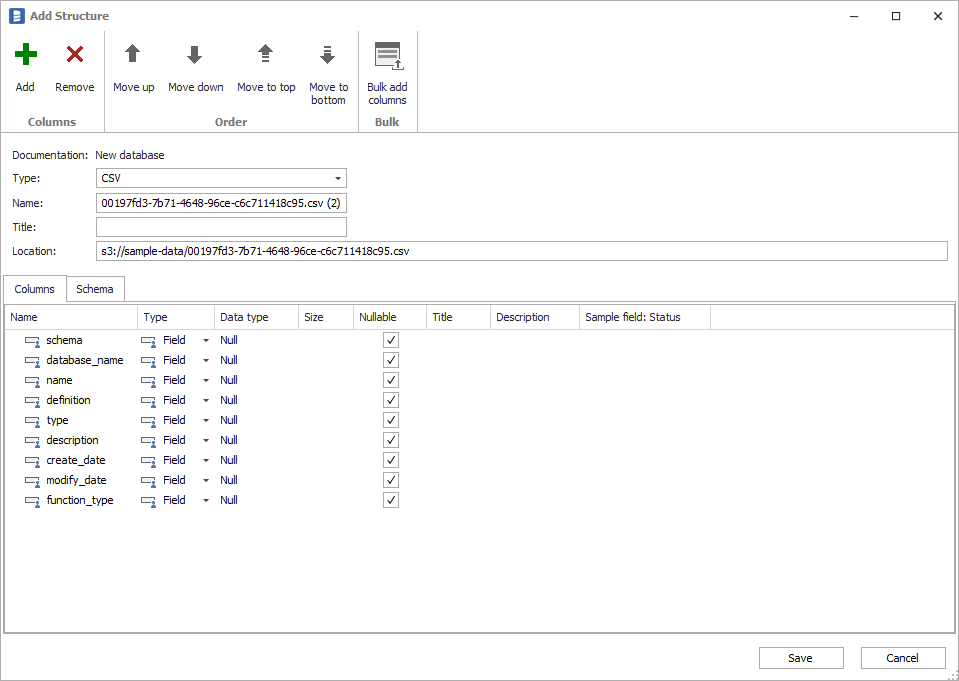

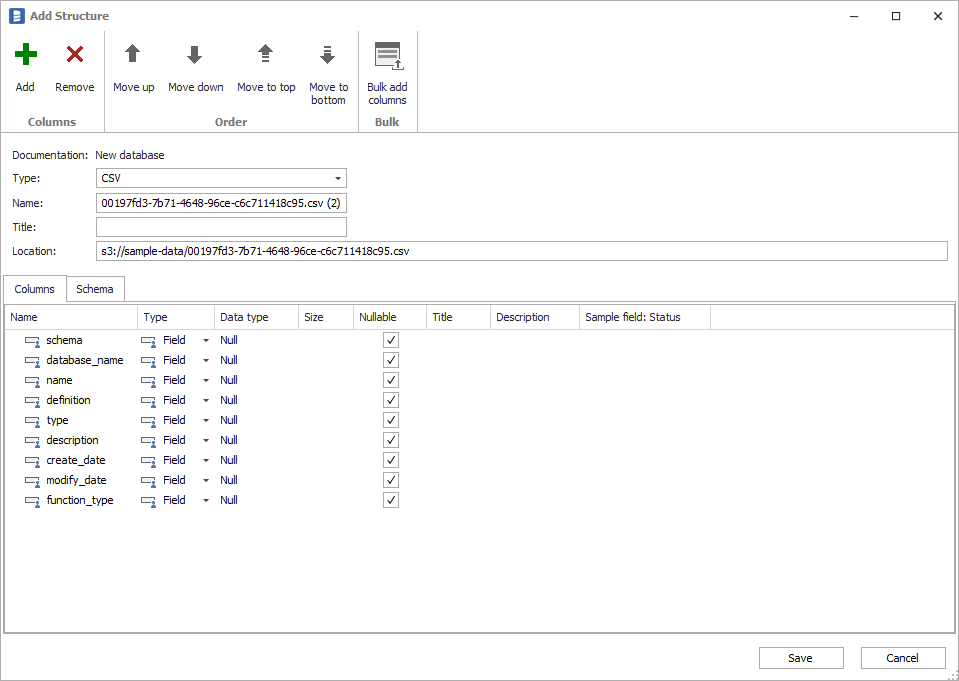

If you selected only one file, Dataedo will try to read this file and if succeded will open a window with schema and fields to provide details for structure.

For multiple files, Dataedo will try to figure out the format of each file. If failed, you will see an error and have to select the type of a file manually. You can also change the format of a file if the recognized format is wrong.

Add new connection to S3 bucket

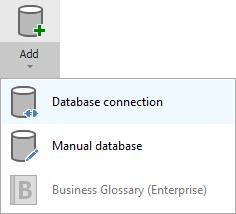

To connect to S3 and create a new documentation, click Add documentation and choose Database connection.

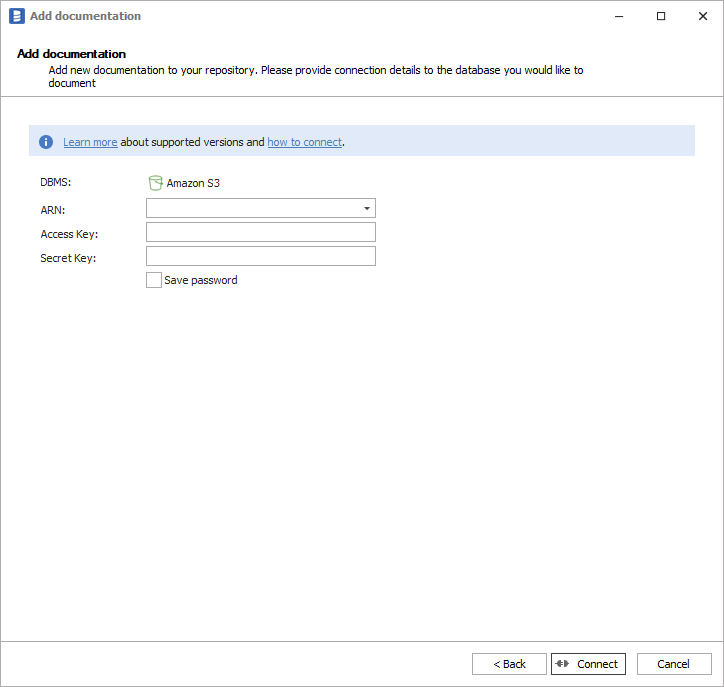

On the Add documentation window choose Amazon S3:

Provide connection details to Amazon S3:

- ARN - Amazon Resource Name which uniquely identifies S3 Bucket,

- Access Key - key assigned to IAM user which will be used to connect Dataedo to S3 Bucket,

- Secret Key - password for IAM user.

Obtaining connection details was described in the Prerequisites section. Click Next.

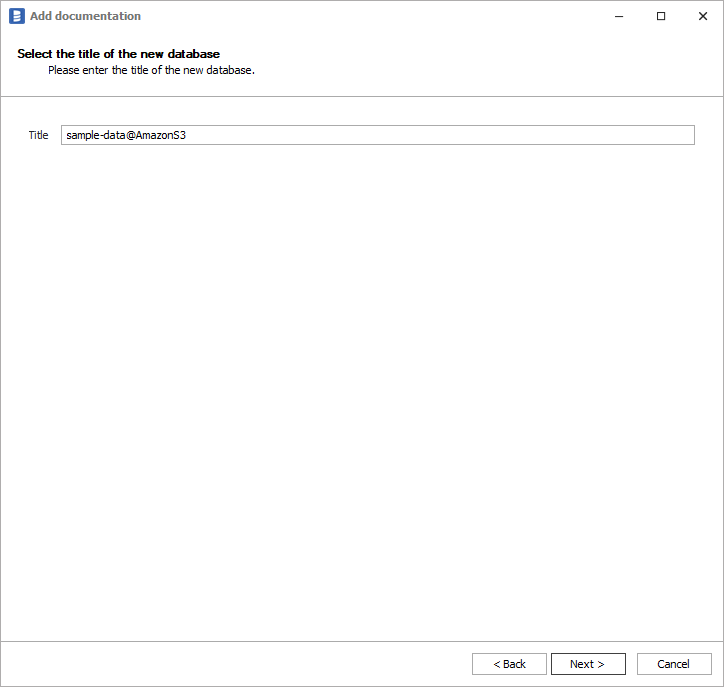

Next screen allows you to change name of the documentation under which it will be visible in Dataedo repository.

Select the format of the file to import. If in the next steps you will select more than one file, this will be used as the default choice, although you will be able to select the format for each of the files.

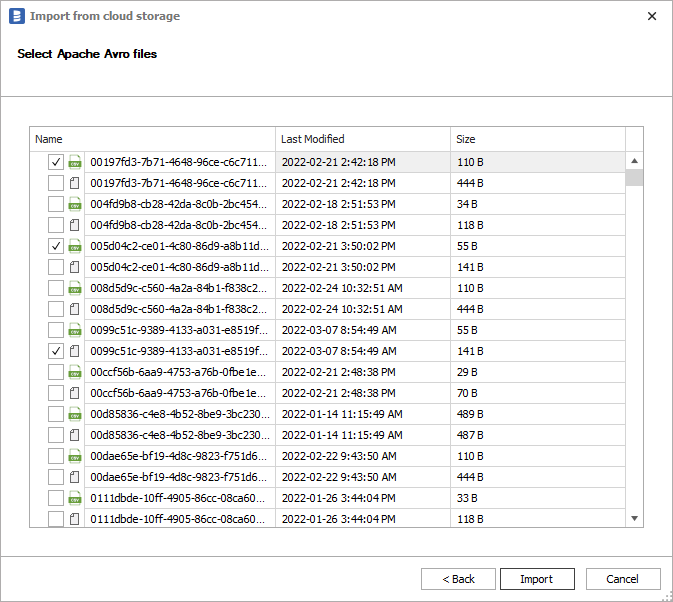

In next step, select a file or multiple files to import.

If you selected only one file, Dataedo will try to read this file and if succeded will open a window with schema and fields to provide details for structure.

For multiple files, Dataedo will try to figure out the format of each file. If failed, you will see an error and have to select the type of a file manually. You can also change the format of a file if the recognized format is wrong.

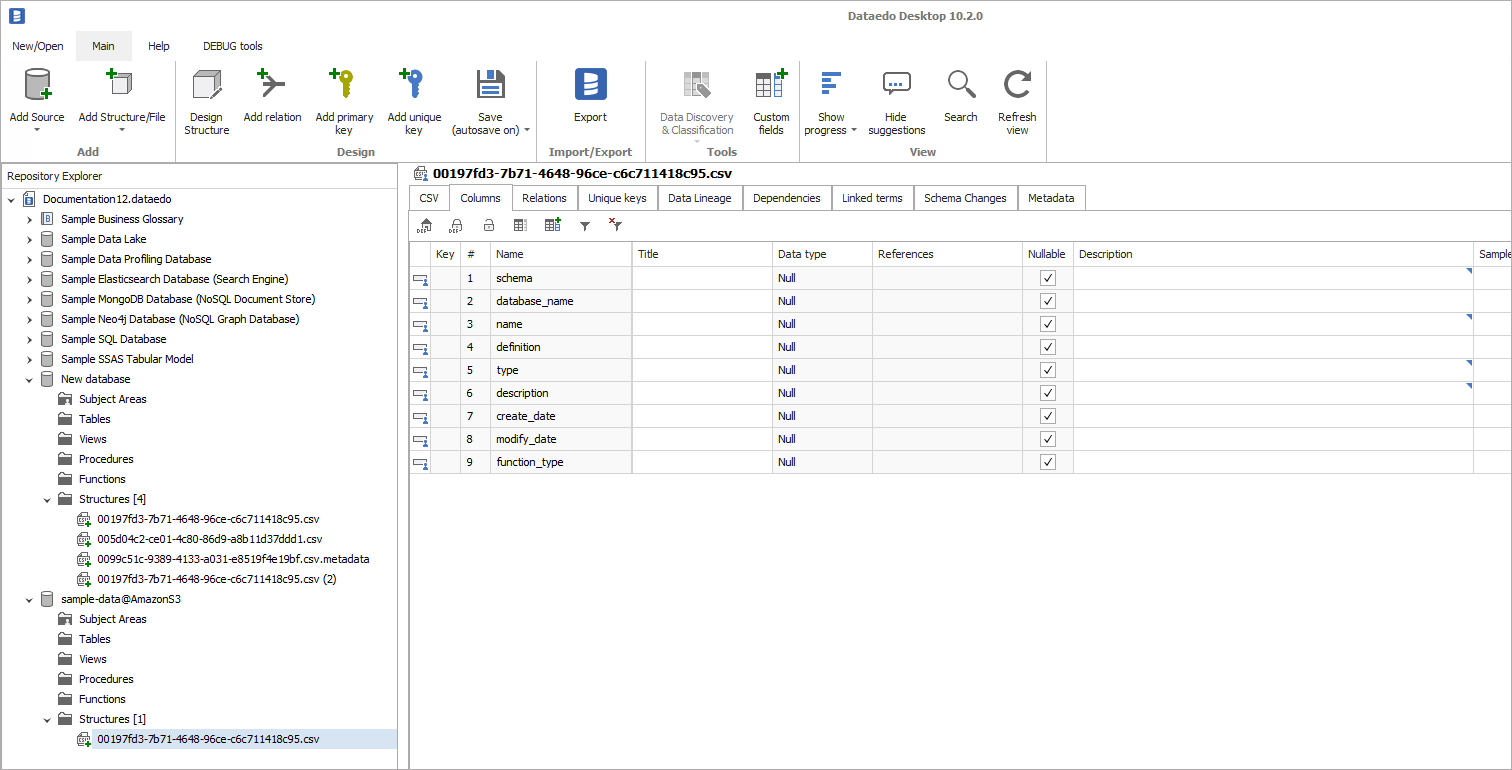

Outcome

Your S3 objects have been imported to the repository.

Data profiling

Dataedo does not support profiling objects stored in Amazon S3.

Data lineage

Dataedo does not support data lineage in Amazon S3.