dbt tool that helps data teams to transform data in their warehouses by simply writing select statements - it enables analysts to work more like software engineers. dbt cloud is a hosted service that helps data analysts and engineers productionize dbt deployments. It comes equipped with turnkey support for scheduling jobs, CI/CD, serving documentation, monitoring, alerting, and an integrated development environment (IDE).

Supported metadata and data lineage

Imported metadata

| Imported | Editable | |

|---|---|---|

| Tables, Views, Materialized Views, Incremental, Ephemeral |

✅ | ✅ |

| Table comments | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Data types | ✅ | ✅ |

Nullability (from not_null test) |

✅ | |

Foreign keys (from relationships test) |

✅ | ✅ |

Unique keys (from unique test) |

✅ | ✅ |

| Column comments | ✅ | ✅ |

All objects from dbt will be displayed as views in Dataedo, the subtype will determine the actual materialization.

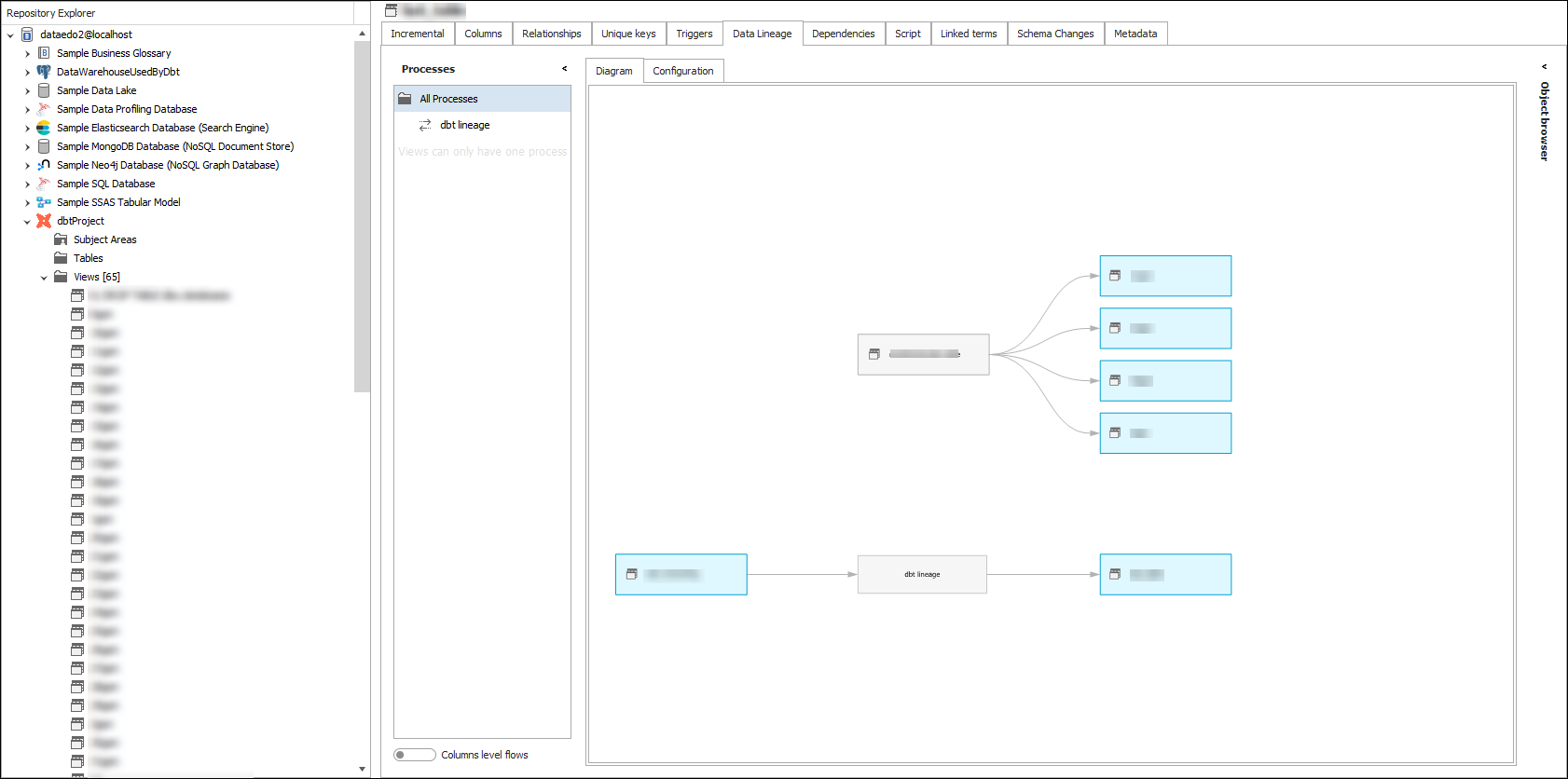

Data Lineage

| Source | Method | Status |

|---|---|---|

| dbt object - dbt object | From Manifest.json |

✅ |

| database object - dbt object | From Manifest.json |

✅ |

Dataedo creates an automatic data lineage for you between dbt objects (always). Additionally, it creates a lineage between a dbt object and its related database object (only if you connect the data warehouse used in this dbt project).

{{ source(source_name, table_name) }} or {{ ref(package_name, model_name) }}. So only when you see a lineage between objects in the documentation generated by dbt, it will be imported into Dataedo.

Prerequisites

- dbt Cloud Service Token with at least read-only permissions.

- Run Id which contains the

manifest.jsonfile and for the best resultscatalog.jsonfile as well.

Preparations

Creating a service token

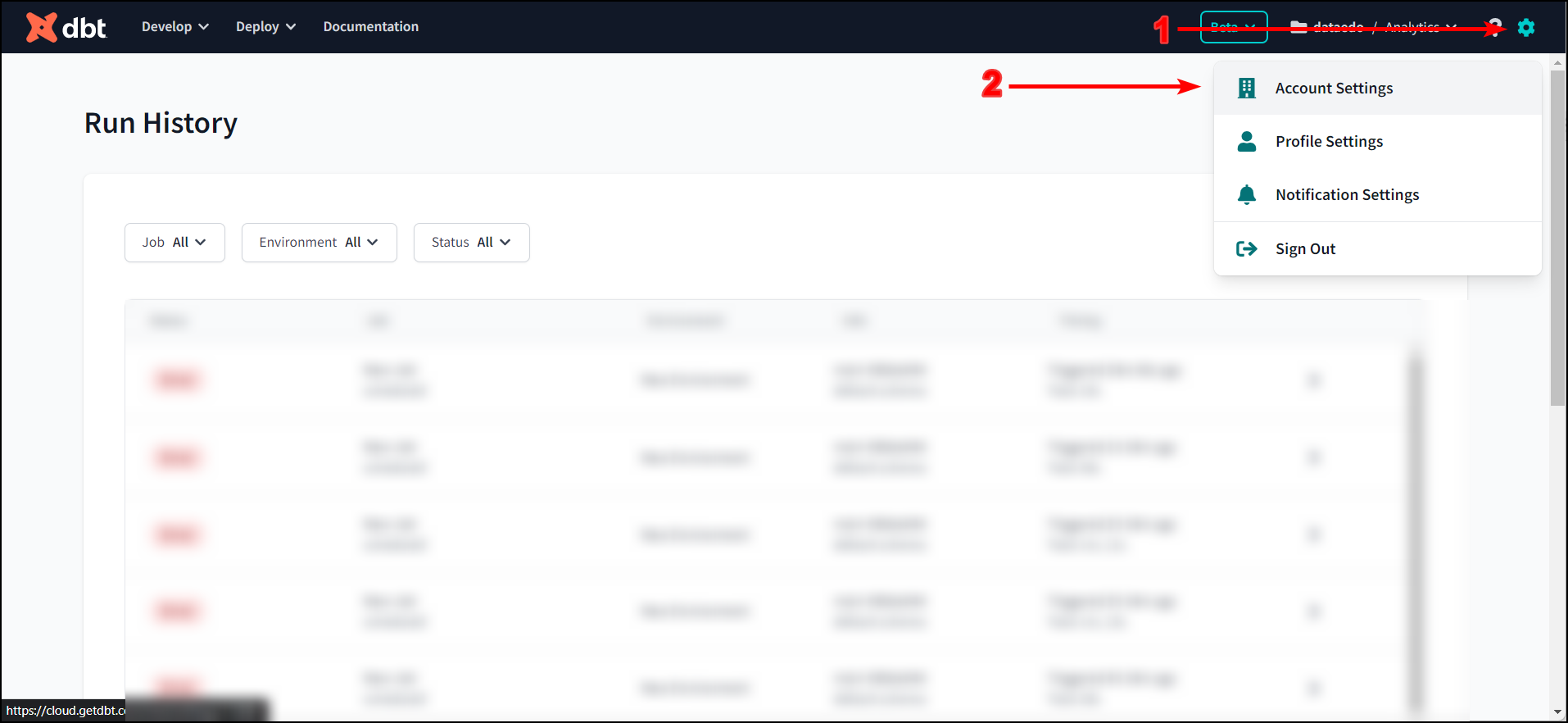

In the upper right edge, click on the gear wheel, then select Account Settings.

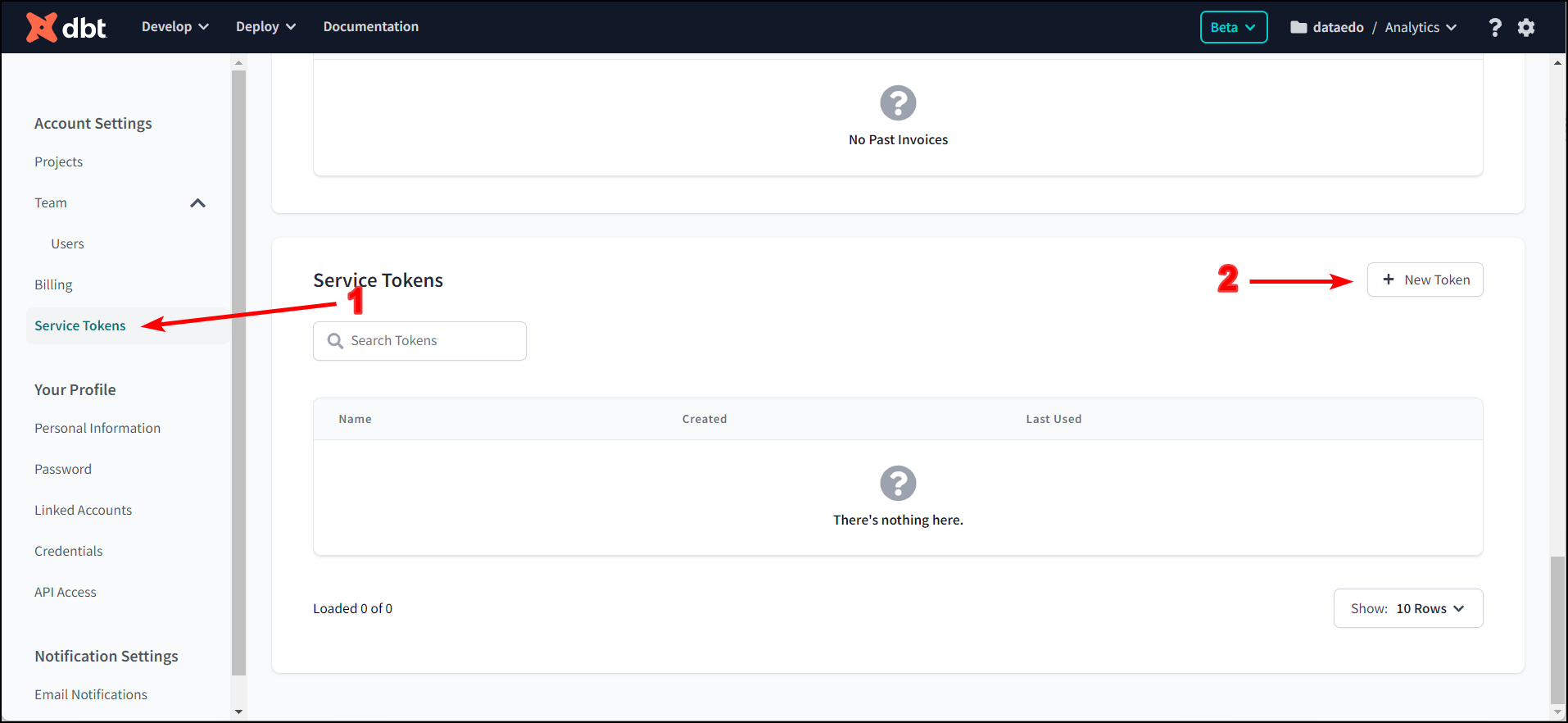

On the left panel, select Service Token, choose + New Token

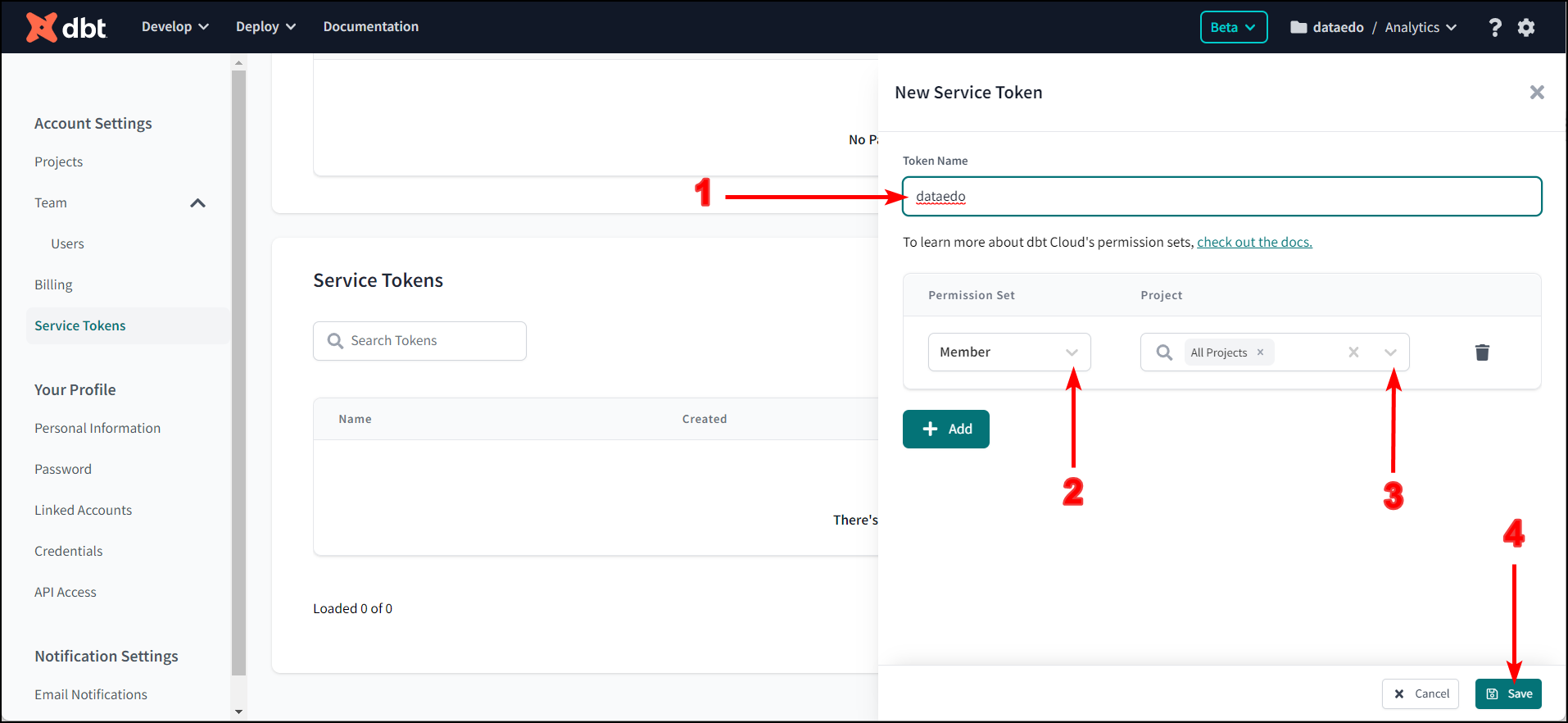

Name the token, add member permissions, select the projects you want to import, and click save.

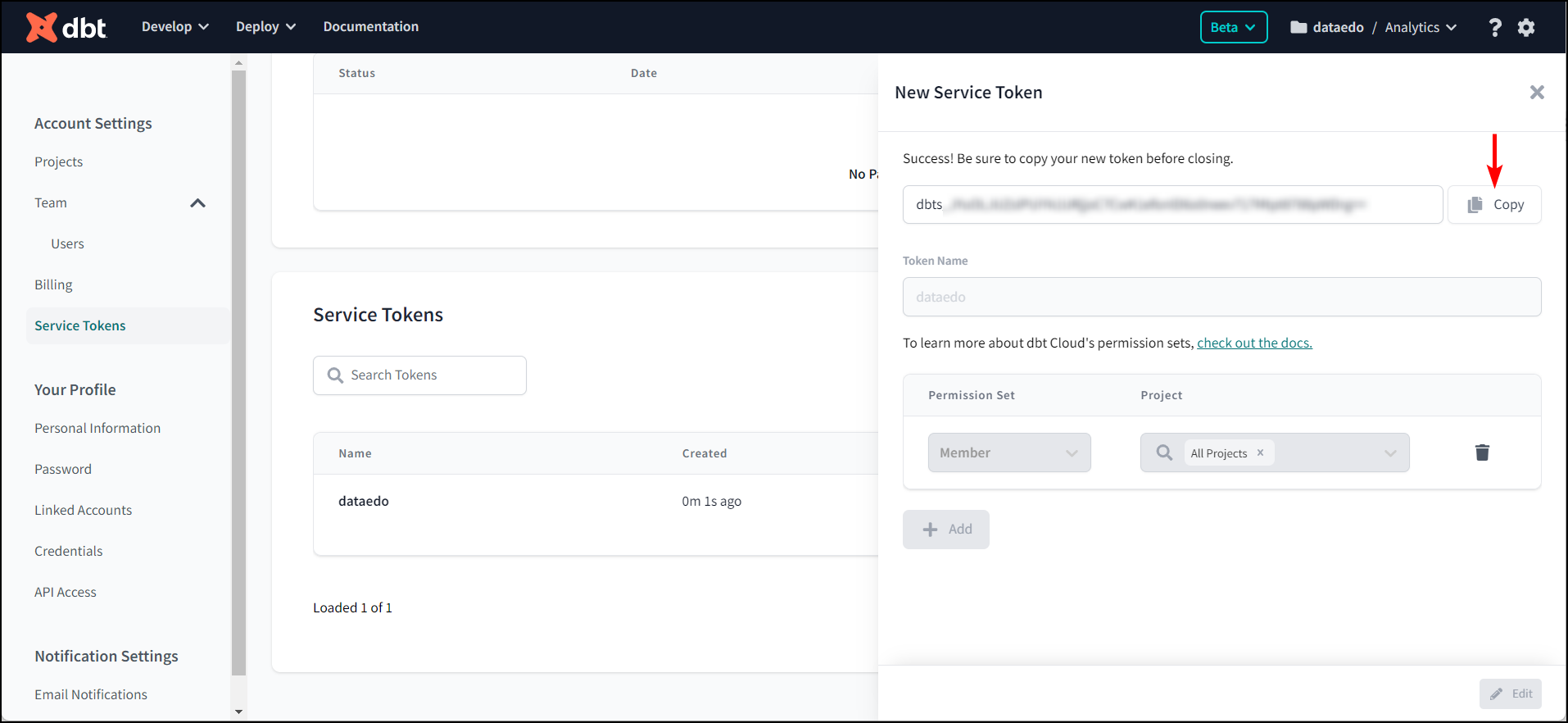

Copy the token and save it in a safe place. Once you close it, you will no longer be able to see its value in dbt Cloud.

Create proper run and read run ID

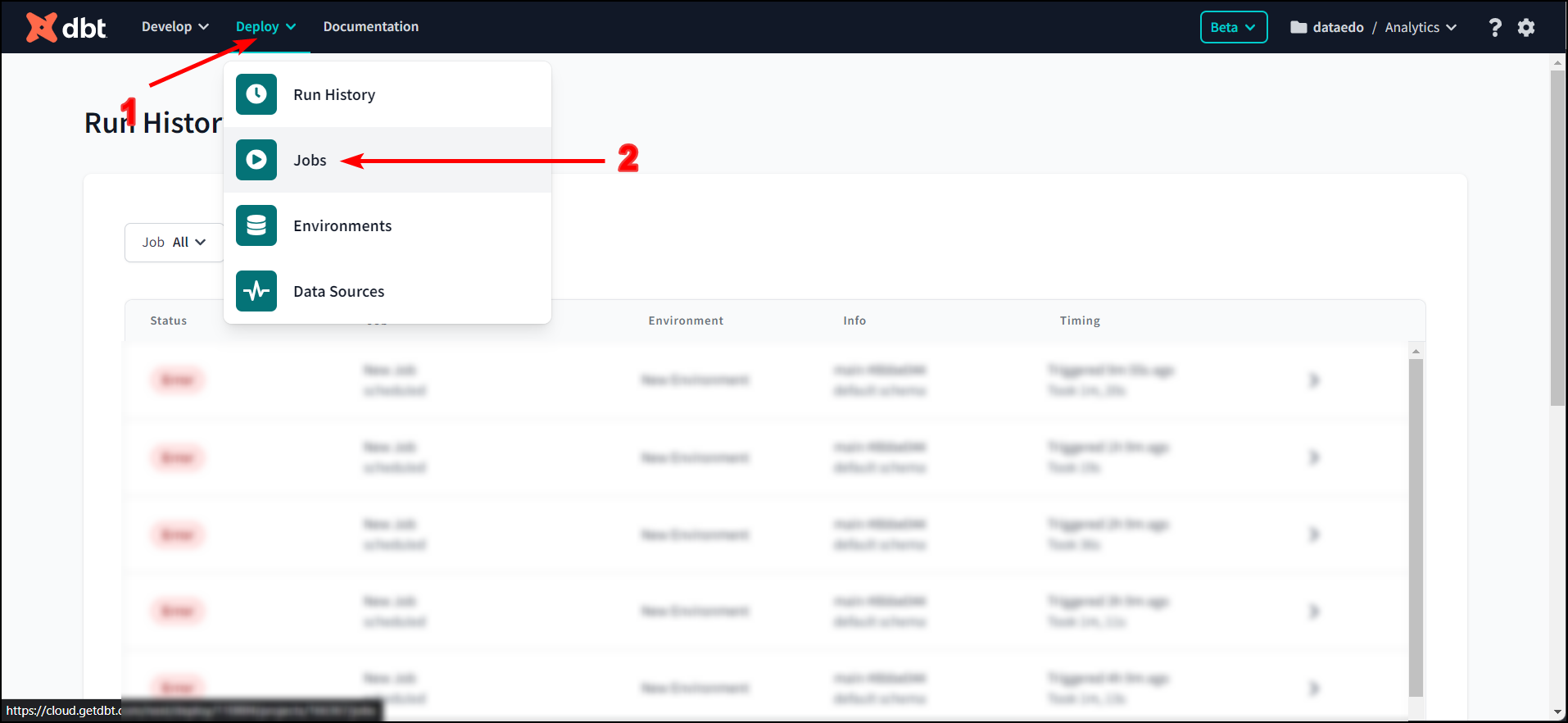

Select Deploy, then Jobs.

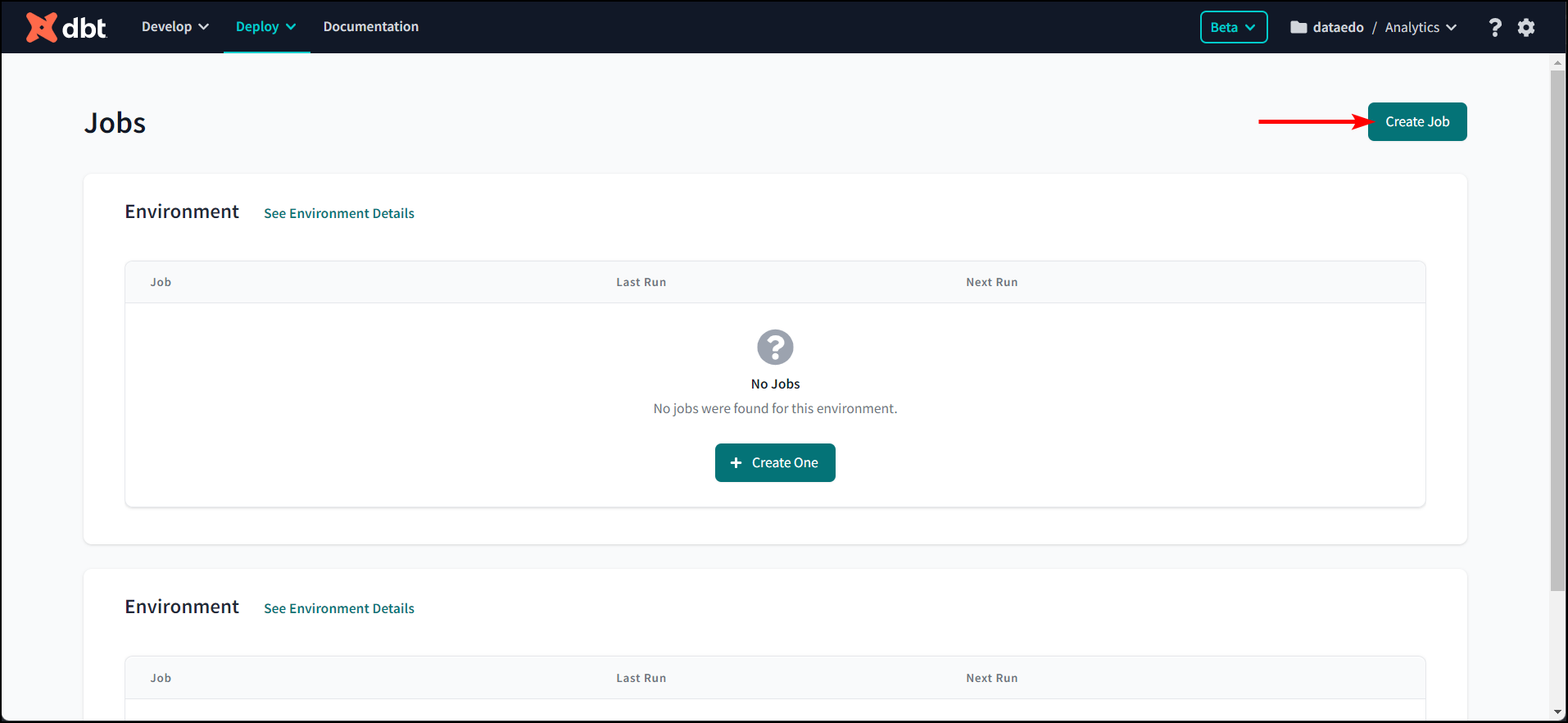

In the upper right corner, click on Create Job.

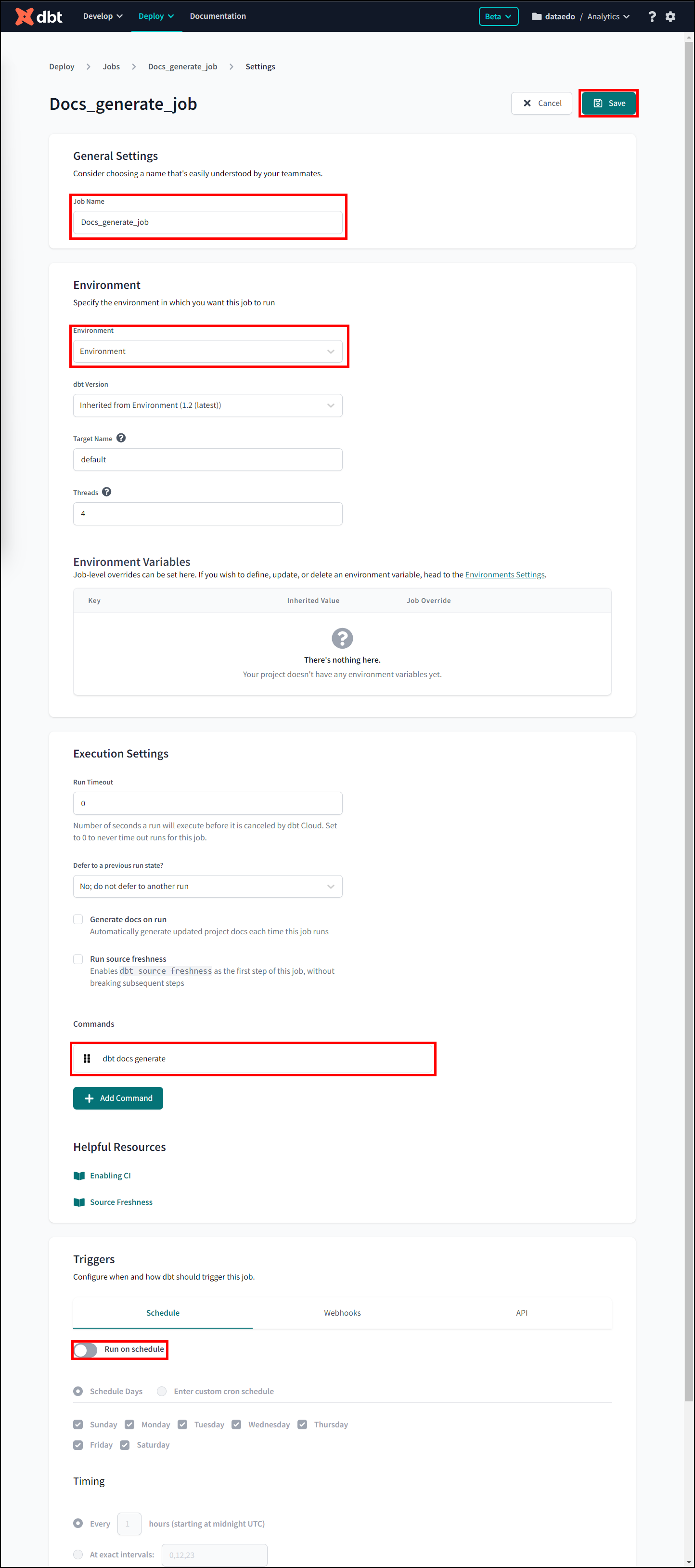

Name the job, specify the environment. In the command field, write dbt docs generate. You can uncheck Run on schedule, and click save.

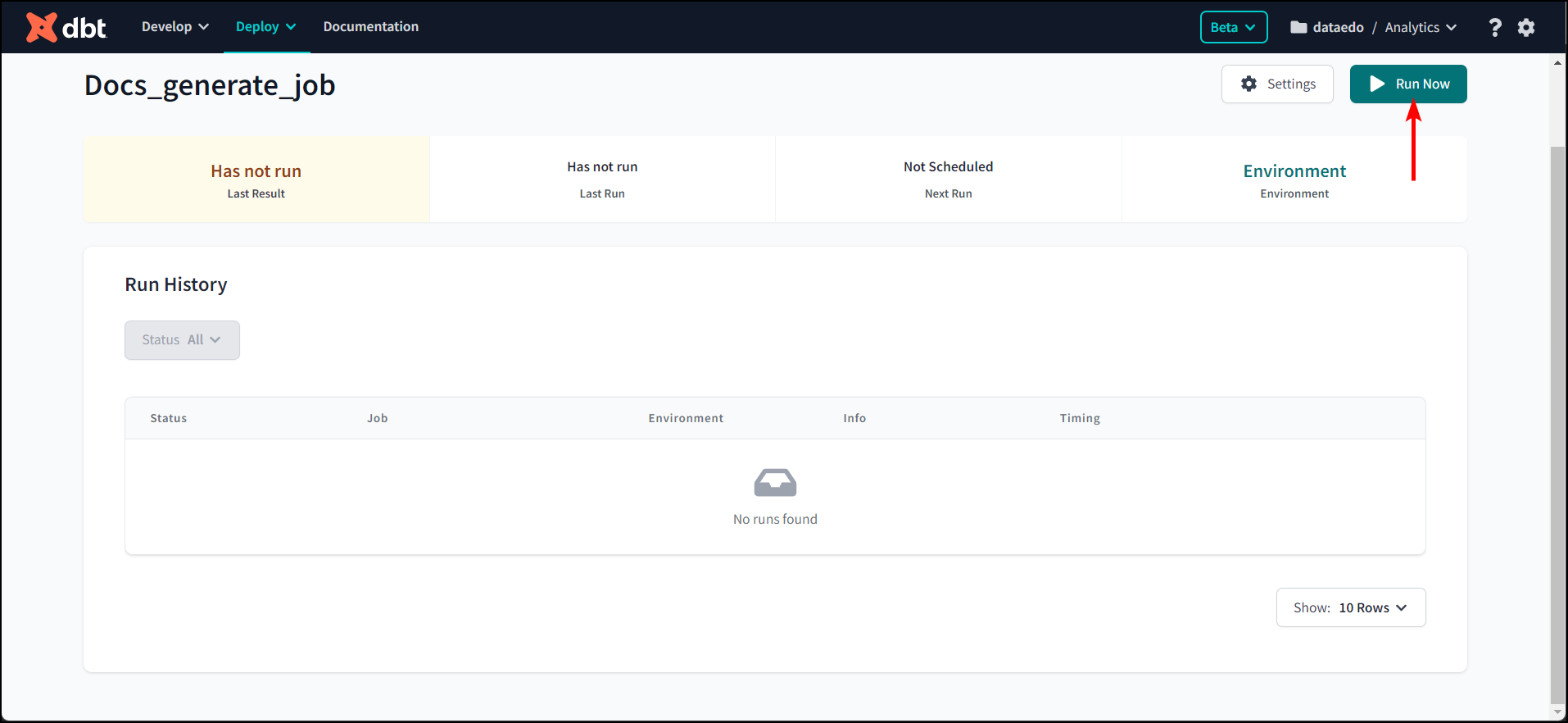

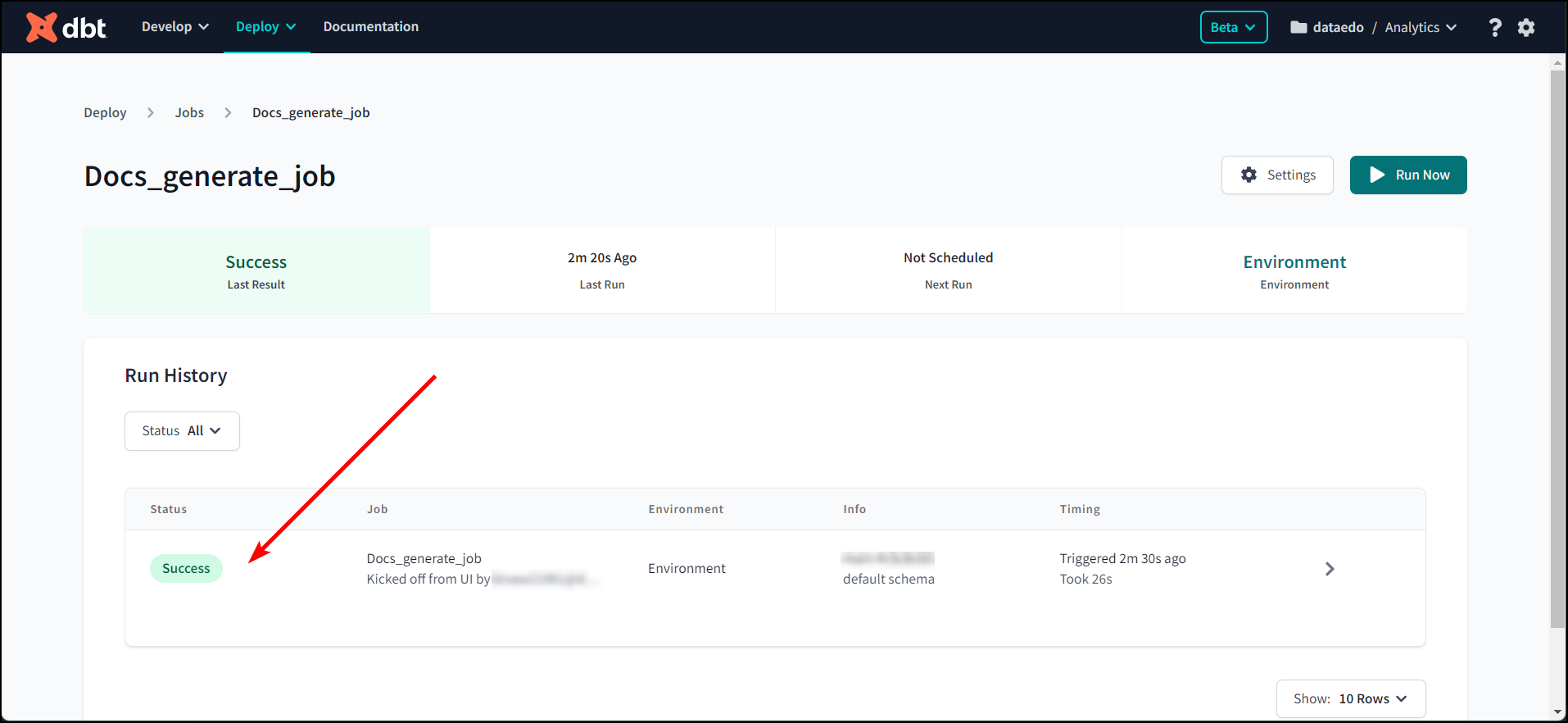

In the upper right corner, click on Run now.

When the run is over, click on it.

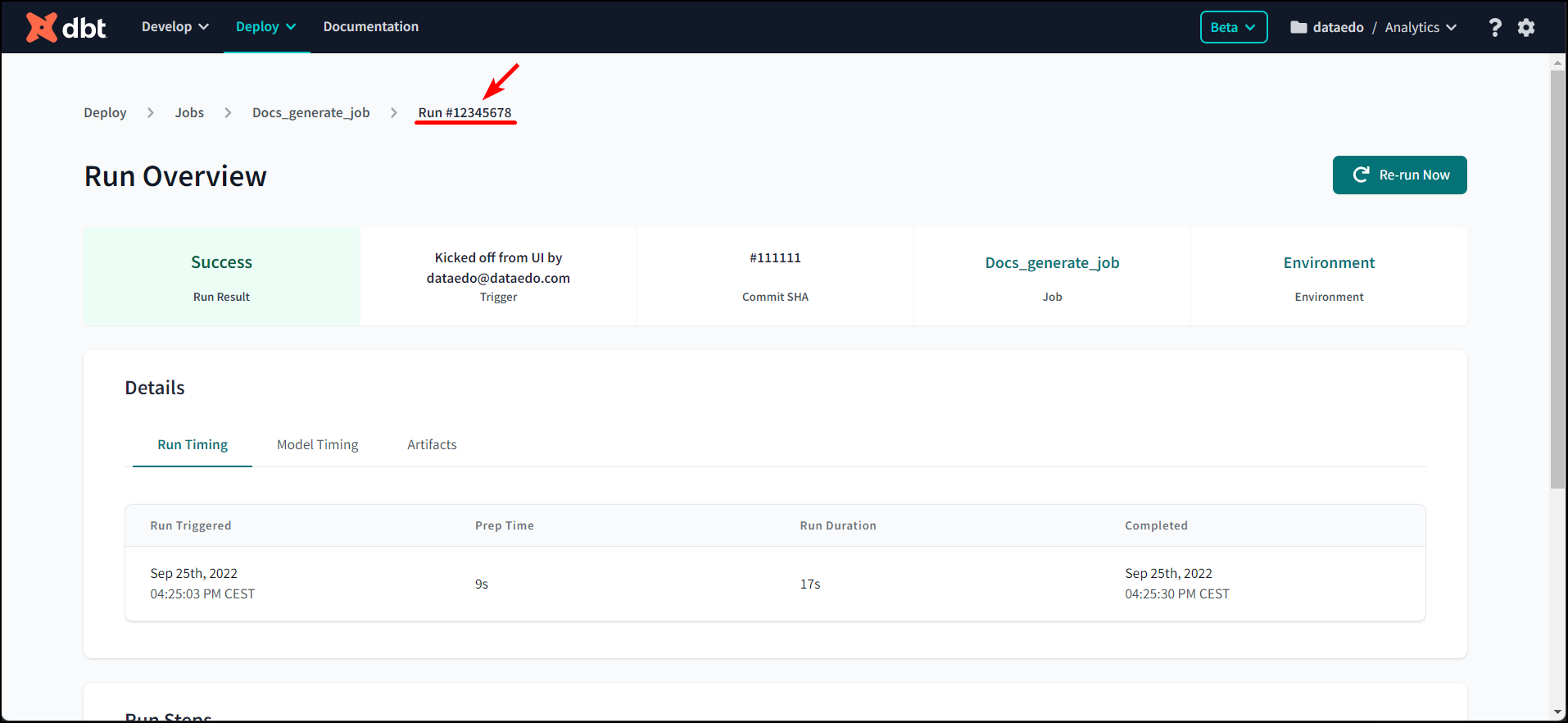

In the field marked with an arrow you will be able to read the Run Id - here it's 12345678 (without the hashtag). Save it because it will be necessary for the import.

Connect Dataedo to dbt project

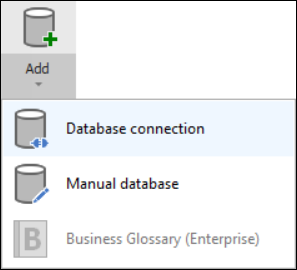

To connect to the dbt project create new documentation by clicking Add and choosing Database connection.

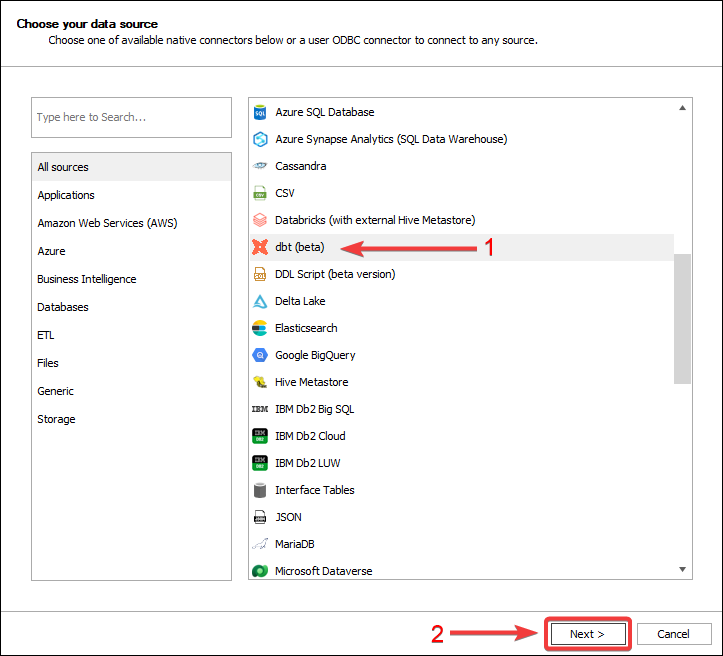

Choose dbt (beta) from the list and click Next >:

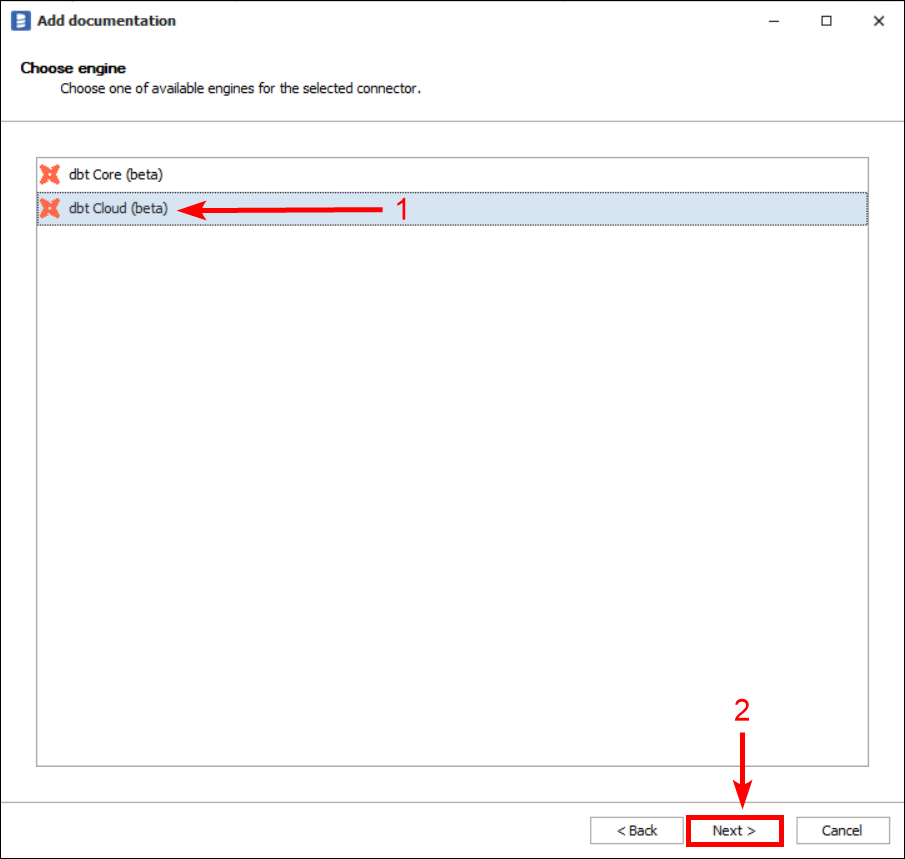

Select dbt Cloud (beta) and click Next >:

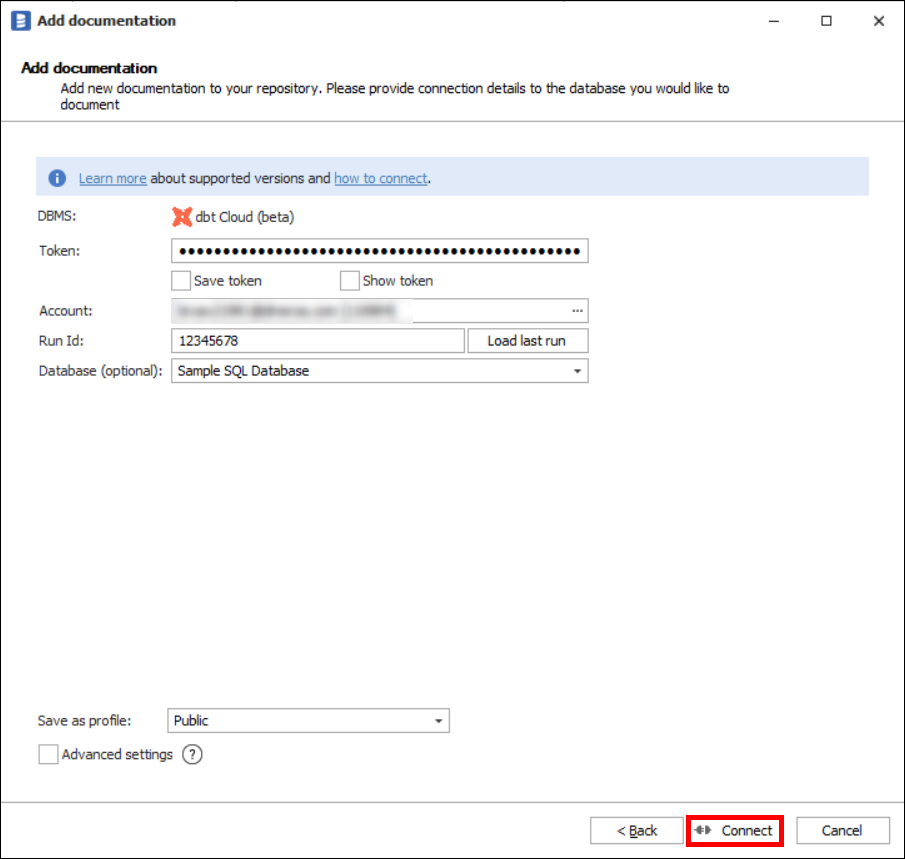

Paste Service Token, click on three dots next to Account, and choose account. Paste Run Id and for best results, add a data warehouse already documented in Dataedo in which dbt was running by selecting it in the Database field. Click Connect.

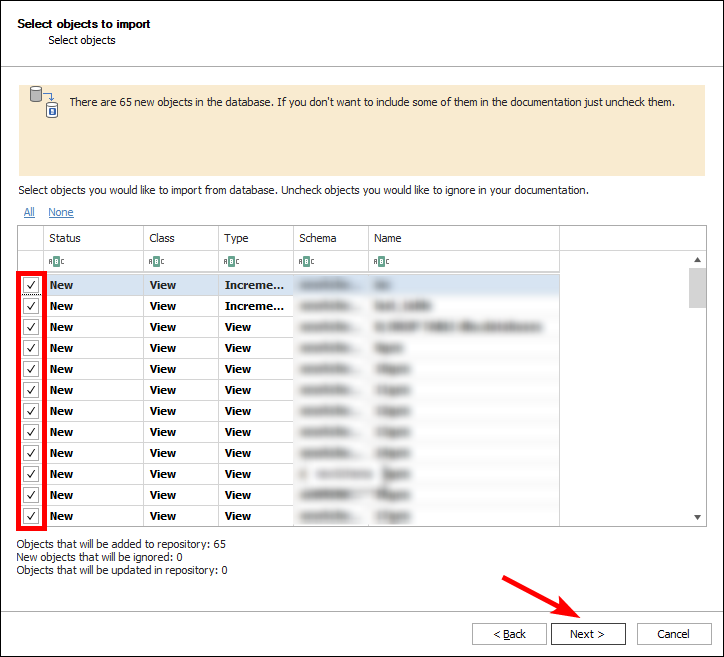

In the next window, you will be asked what objects you want to be imported into Dataedo. If you will want to omit some objects in bulk, refer to the advanced import filter. After selecting the objects, click Next >.

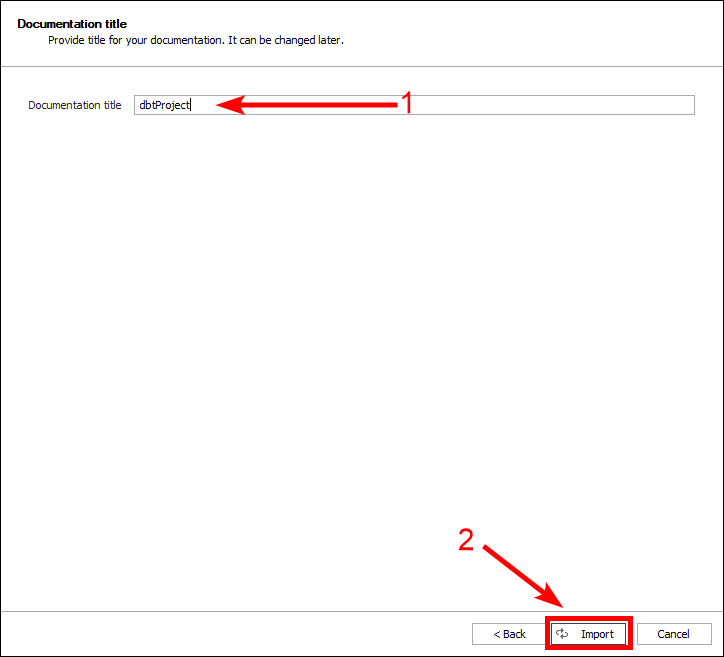

Add a document title and click Import.

Outcome

dbt project has been imported to new documentation. Automatic data lineage was created.

.

.

Hubert Książek

Hubert Książek