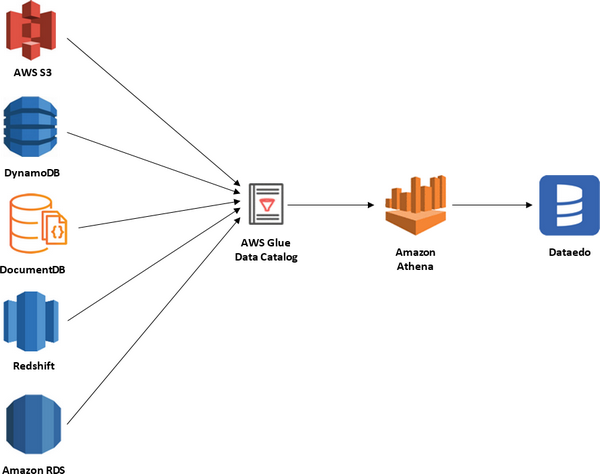

Dataedo supports connector for Amazon Athena, a query engine that allows querying various data sources on Amazon Web Services. It allows access to AWS Glue Data Catalog that can catalog files in S3, tables DynamoDB, DocumentDB, RDS databases and Redshift.

Supported schema elements and metadata

Dataedo reads following metadata from DynamoDB databases:

- Tables

- Attributes

- Name

- Primitive types

- Complex types

Data profiling

Datedo does not support data profiling in DynamoDB with Athena.

Configure AWS Services

Get DynamoDB AWS Region

All services configured later need to be created in the same region as DynamoDB. To get the region of AWS DynamoDB, log in to AWS Console, find the DynamoDB service, and open it. Then in the main window of the DynamoDB service, you will find a region by expanding the list of regions in the upper right corner or by just looking at the URL address – the first part of it is a region.

Create S3 buckets

To connect Amazon Athena to a data source using the Lambda function you need two S3 buckets, or one bucket serving both following purposes:

- S3 Bucket for Athena query results,

- S3 Bucket for Lambda function spill – to store the data that exceeds Lambda function response size limits.

You can use existing buckets instead of creating new ones.

Important: Buckets need to be in the same region as an Amazon DynamoDB database.

Following is a brief instruction on how to create an S3 bucket (see more at AWS documentation):

- Search for S3 service.

- Click the Create bucket button.

- Set the Bucket name and AWS region (same as DynamoDB region!).

- Other options can be left as default.

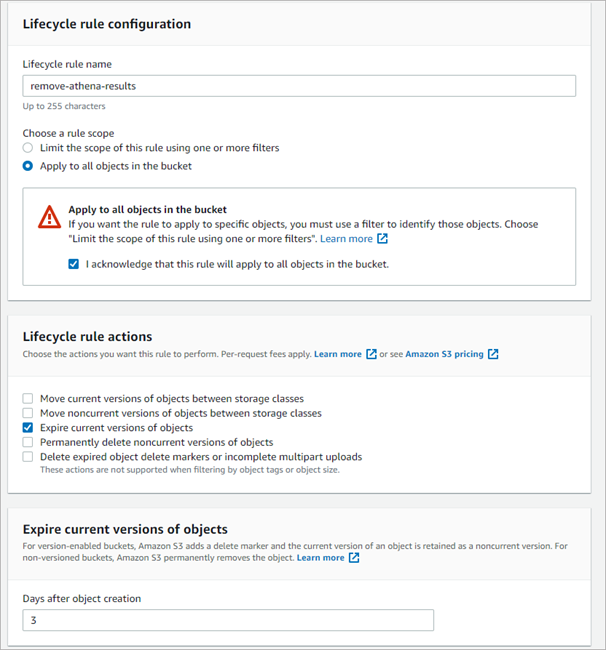

(Optional) Once the bucket is created, set the Lifecycle configuration. You can do this by:

- Clicking the name of the bucket,

- Opening Management tab,

- Clicking Create lifecycle rule,

- Configuring Lifecycle rule. We use the following configuration, which expires object after 3 days:

Set up AWS Athena

You do not need to explicitly activate Athena as by default it is enabled. Although, if you have never used it in a selected region, you need to select an S3 bucket for storing query results.

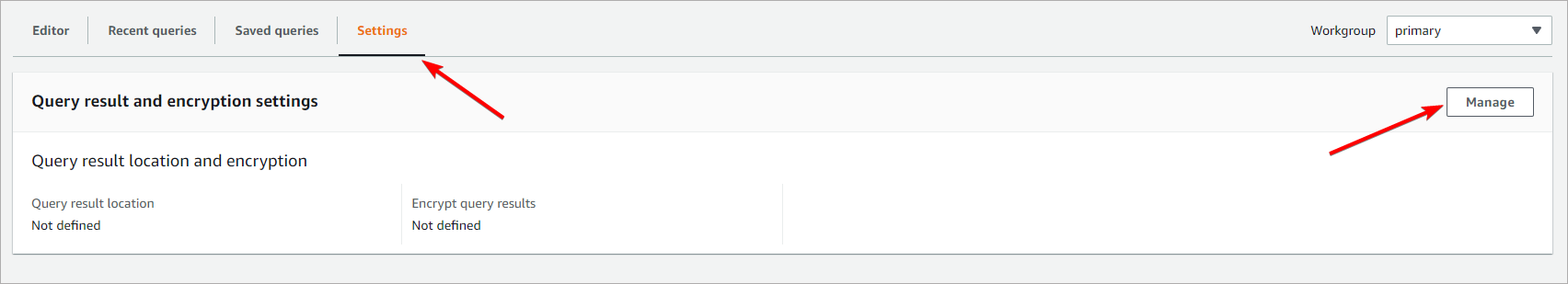

To configure this service for the first time, find Athena Service in an AWS console. If it is the first launch in a region, you will see an Athena home screen. Click the Explore the query editor button, open settings tab and click the Manage button.

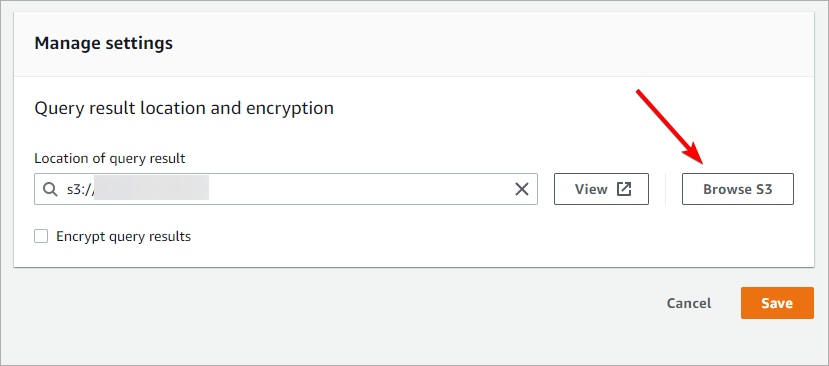

Open a list of available buckets by clicking Browse S3 and select bucket in which query results will be saved. IMPORTANT: If you cannot find a bucket, make sure it was created in the same region as currently selected Save settings.

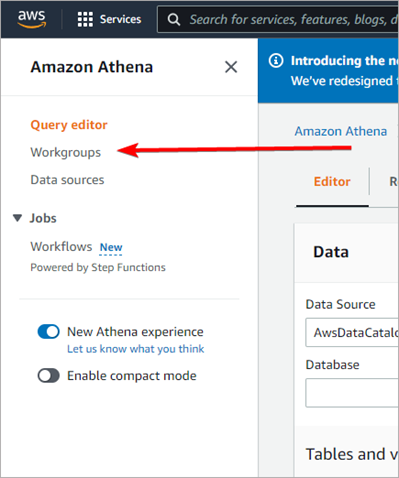

Now you need to configure a custom Workgroup to connect Athena to DynamoDB. Open the Workgroups tab and click the Create workgroup button.

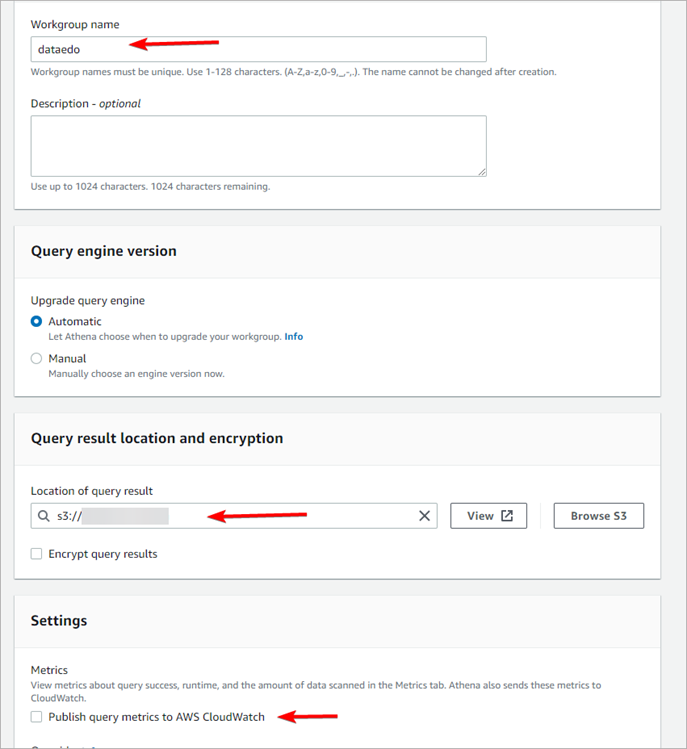

Set the name for the workgroup and select the S3 bucket (can be the very same bucket as previously selected for Athena). Additionally, you can uncheck Publish query metrics to AWS CloudWatch.

Connect Athena to DynamoDB

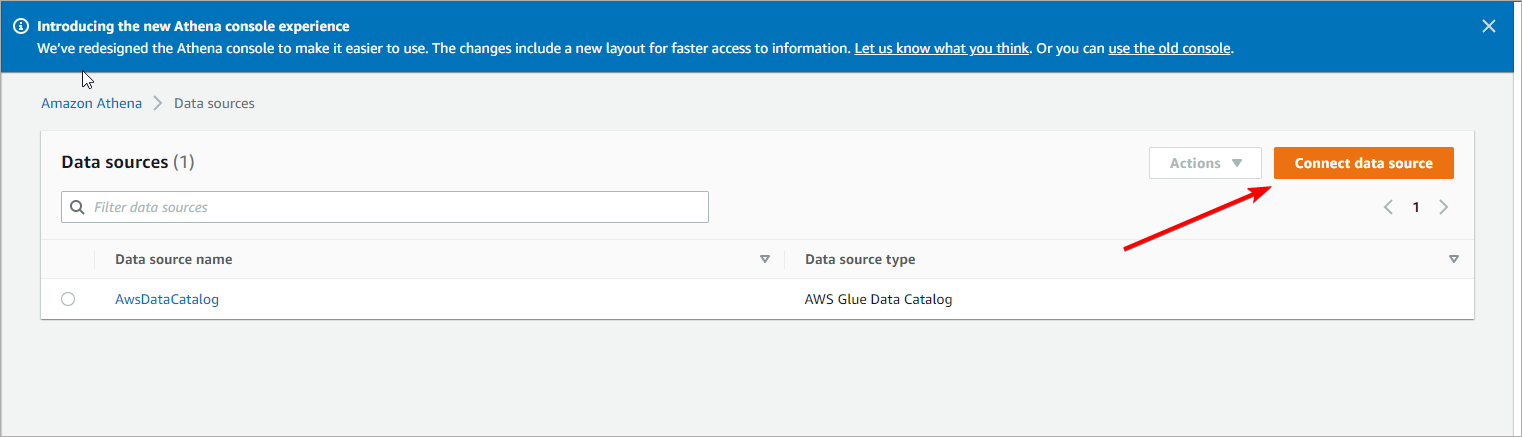

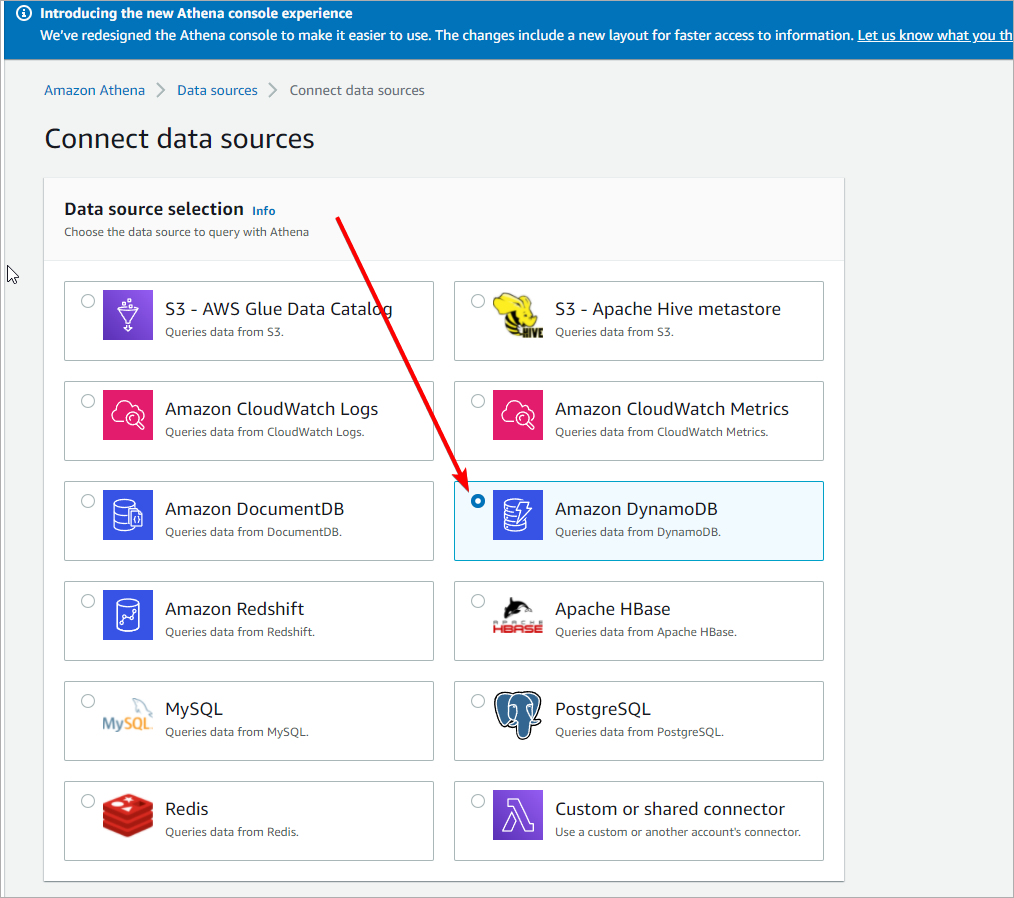

Open the Data sources tab, and click Connect Data source button.

On the list of data sources, select Amazon DynamoDB and set the Data source name.

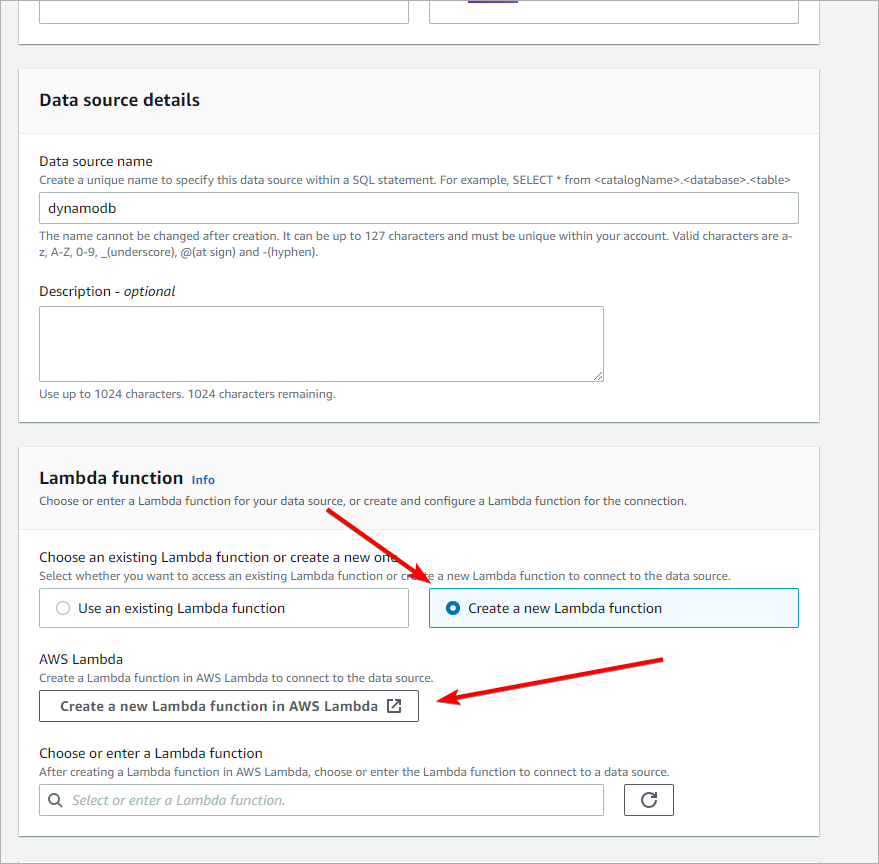

Then in the Lambda Function section click a Create a new Lambda Function button.

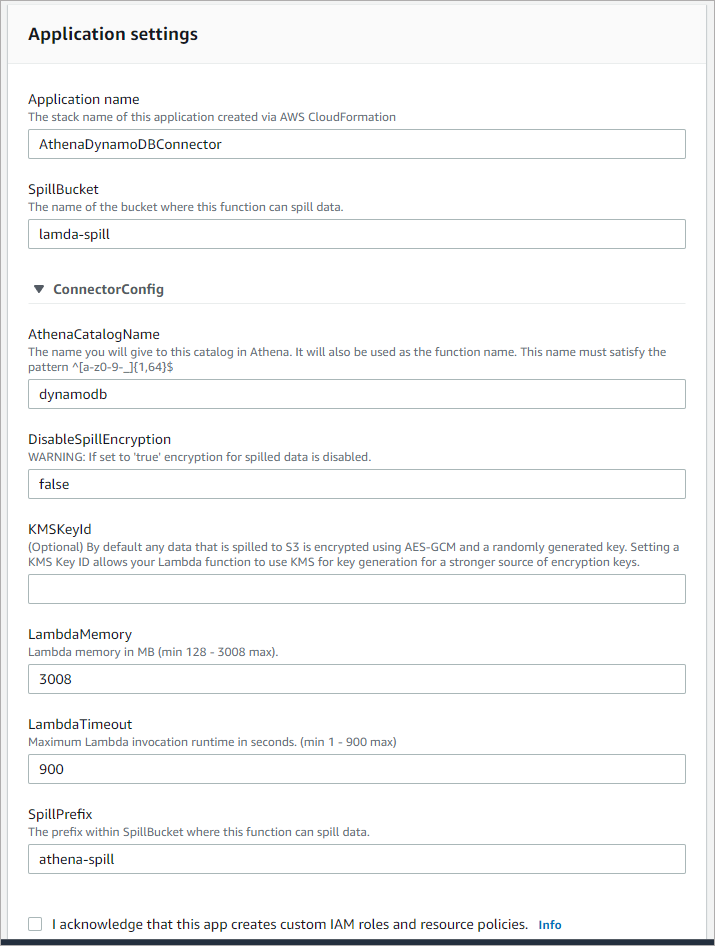

Button redirects you to Create Lambda Function page with a template for DynamoDB. You need to fill the SpillBucket field, which is a bucket we created in the first step. Value for this field is just a bucket name, not full address nor ARN. So, if the bucket name is spill-lambda-example, you need to provide this very name in SpillBucket field.

In the AthenaCatalogName field, set a name for the function, which also serves as a name of the catalog in Athena.

It will take a few minutes to create a lambda function. Once it is created go back to Add Datasource page, and select the function you just created in the Choose Lambda function field.

Create an IAM user

Dataedo connects to AWS Athena with an IAM user, which is a default authentication method for programmatic access. Account for Dataedo will require the following permissions:

- AWSQuicksightAthenaAccess – to read metadata with Athena

- AmazonS3FullAccess – to save query results in an S3 bucket

- AWSLambdaRole – to run the Lambda function

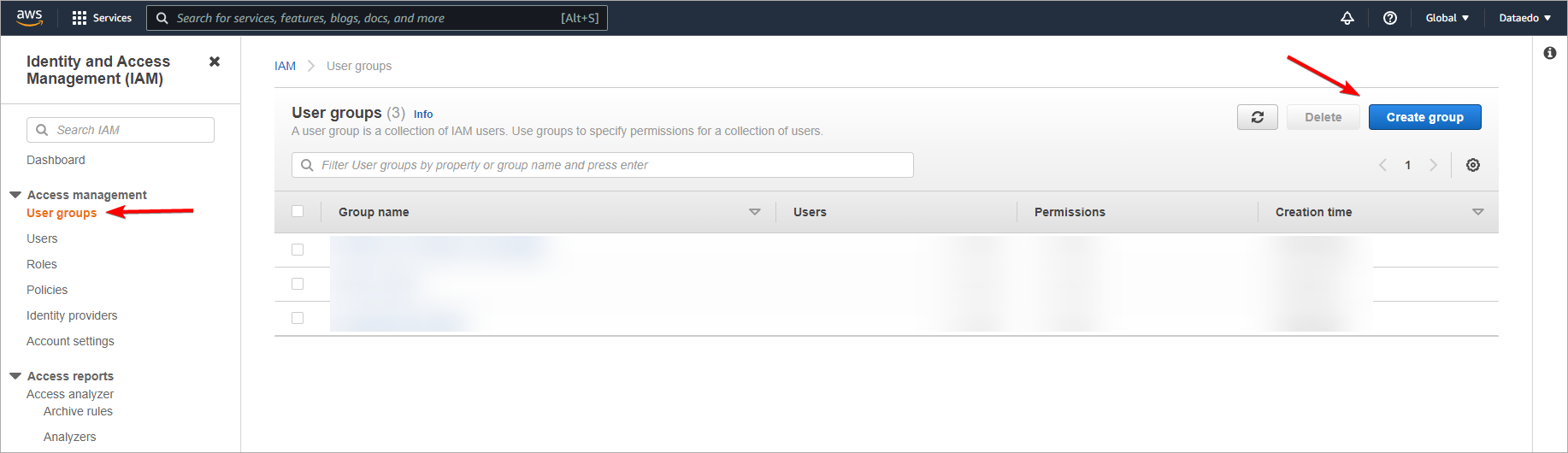

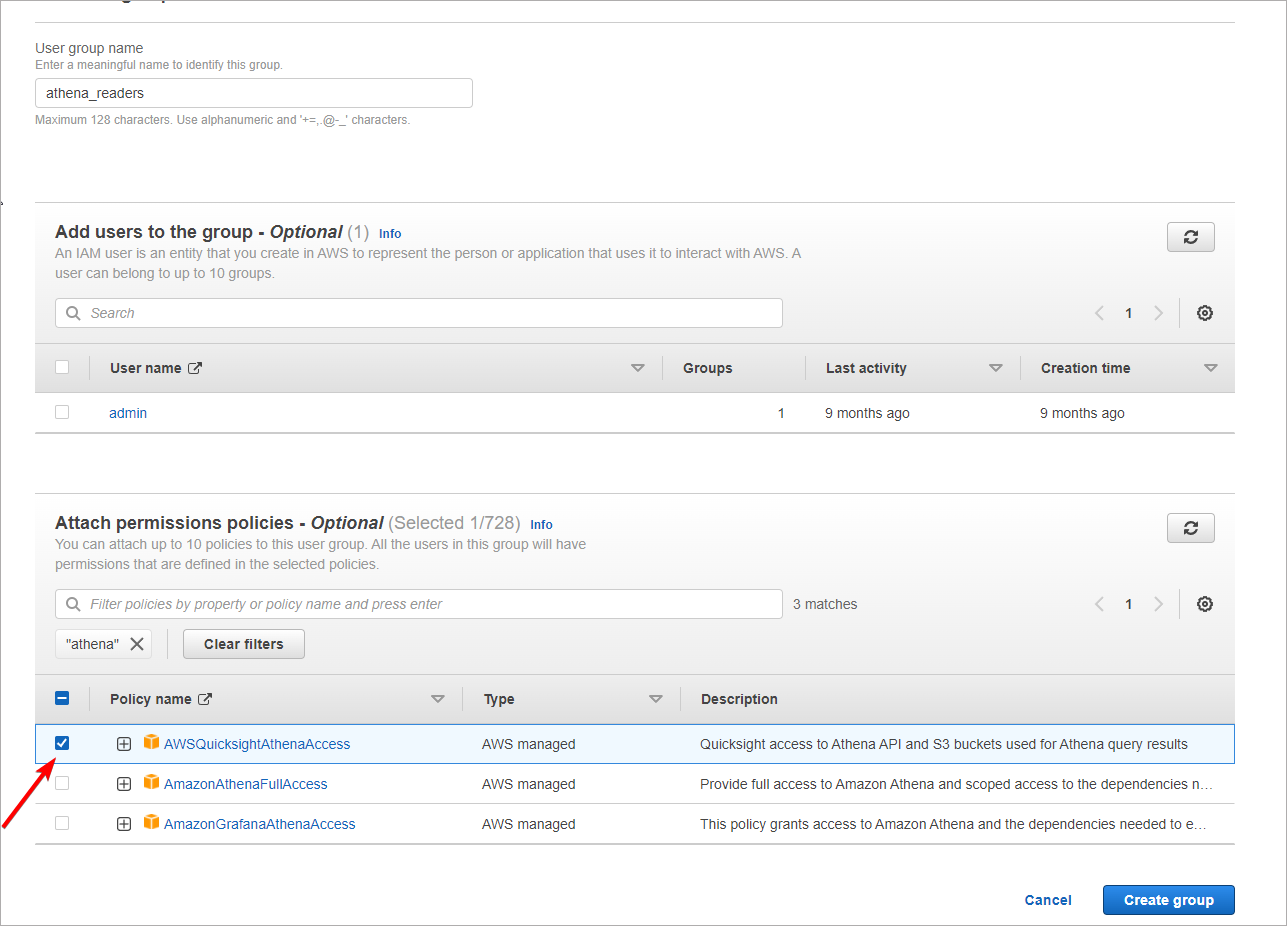

First, create an IAM group with the required permission. Find IAM Service in AWS console, open the User Groups tab, and click Create Group button.

Give your group a distinctive name, and add the aforementioned permissions in Attach permissions policies section.

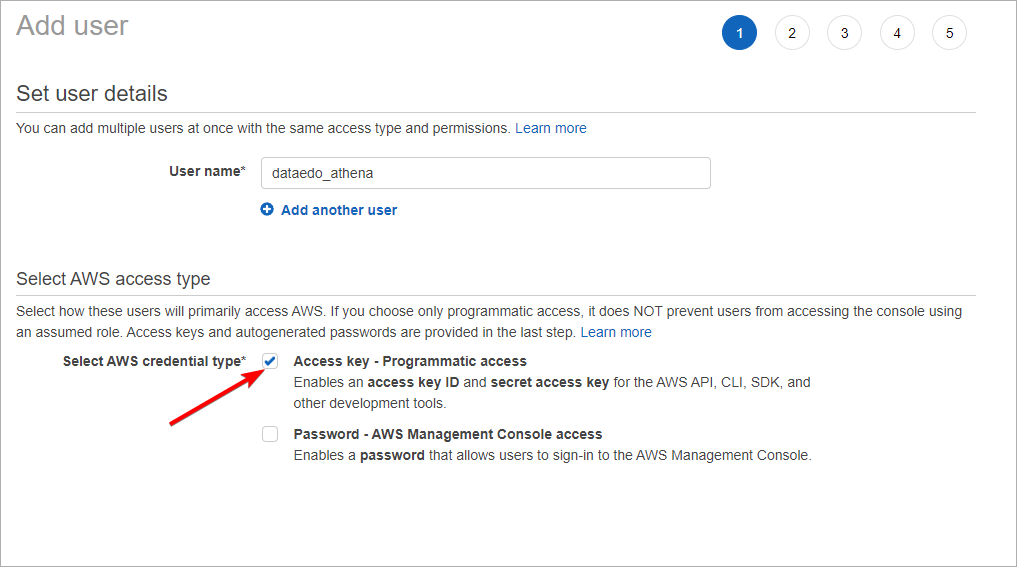

Go back to IAM service main window and open the Users tab and click the Add user button. Give a user a name and select Access Key – Programmatic access in the Select AWS access type section.

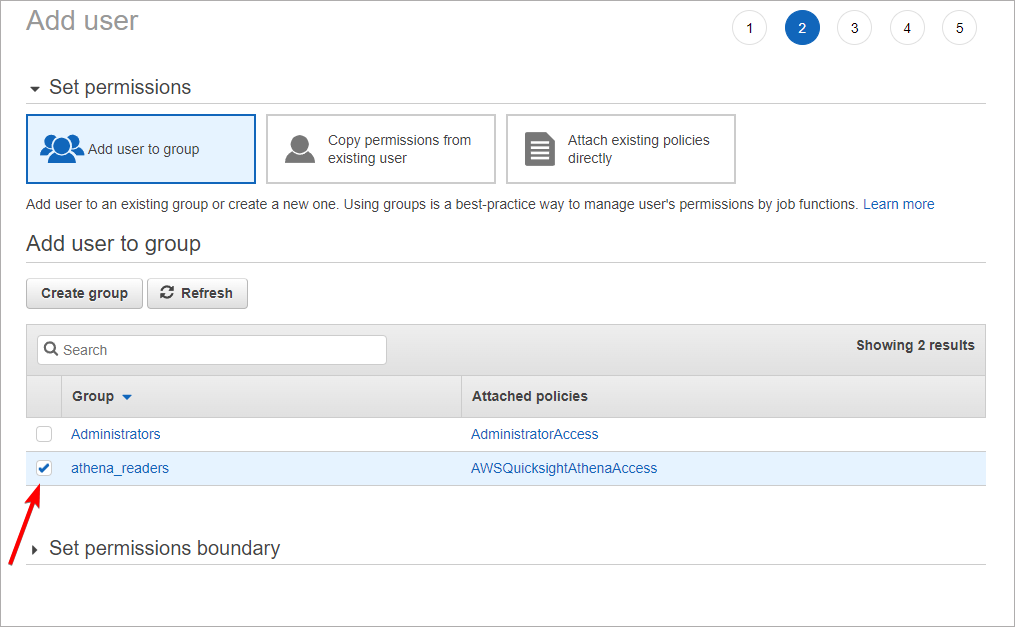

Go next and add the user to the group created in the previous step. Other options can be left default.

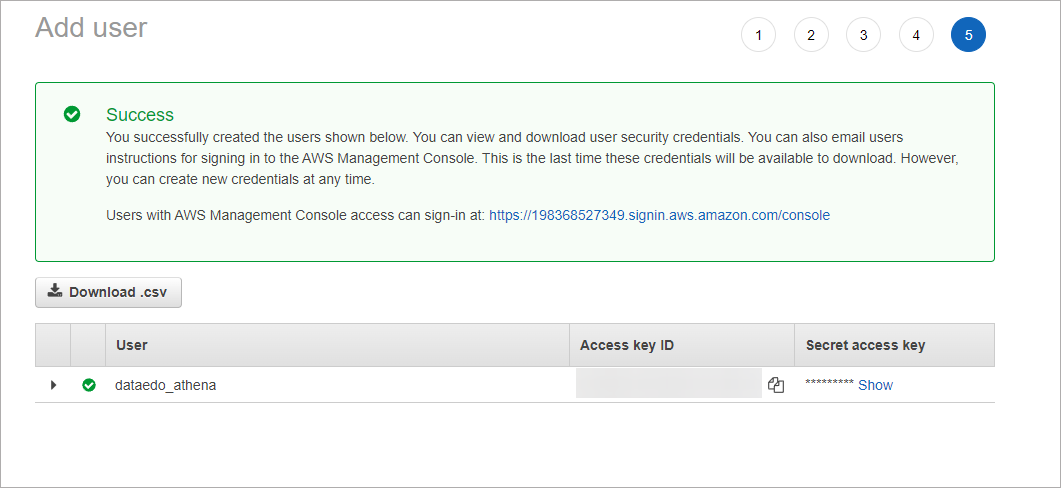

In the last step, AWS will provide you with an Access key ID and Secret access key. These are credentials to your IAM account which you will later use to connect to Athena with Dataedo. Store them safely (we recommend saving these values in an encrypted password manager file).

Connect Dataedo to Amazon DynamoDB with AWS Athena

Add new connection

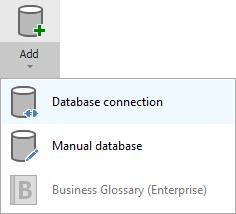

To connect to Amazon DynamoDB with Athena create new documentation by clicking Add documentation and choosing Database connection.

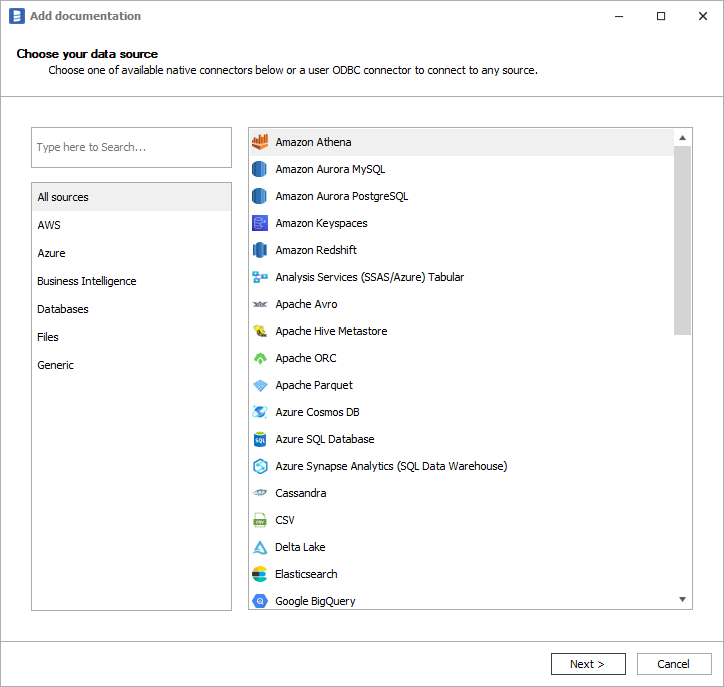

On the Add documentation window choose Amazon Athena:

Connection details

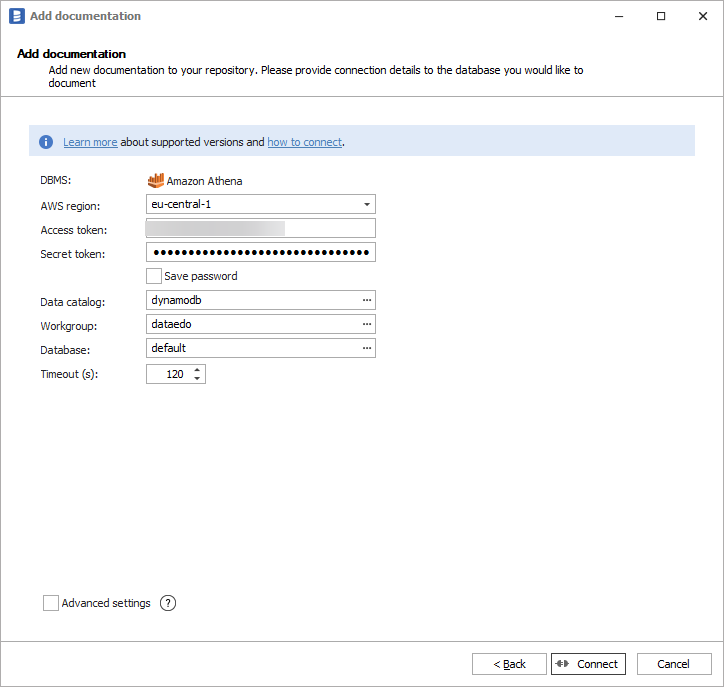

Provide connection details:

- AWS Region - AWS region in which Athena and DynamoDB reside,

- Access Token - IAM user access key ID,

- Secret Token - IAM user secret key,

- Data Catalog - name of lambda function created in Connect Athena to DynamoDB section of this article,

- Workgroup - Athena workgroup

- Database - Athena database.

Importing Metadata

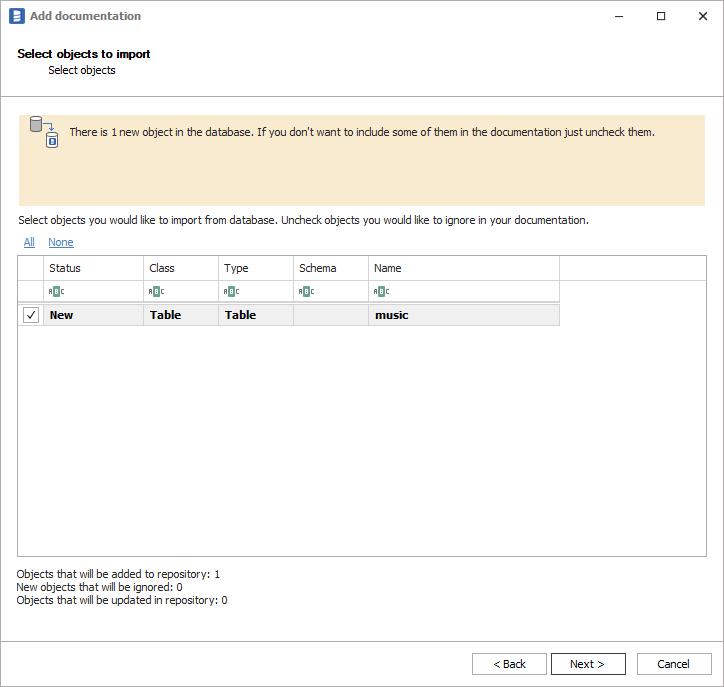

When connection was successful Dataedo will read objects and show a list of objects found. You can choose which objects to import. You can also use advanced filter to narrow down list of objects.

Confirm list of objects to import by clicking Next.

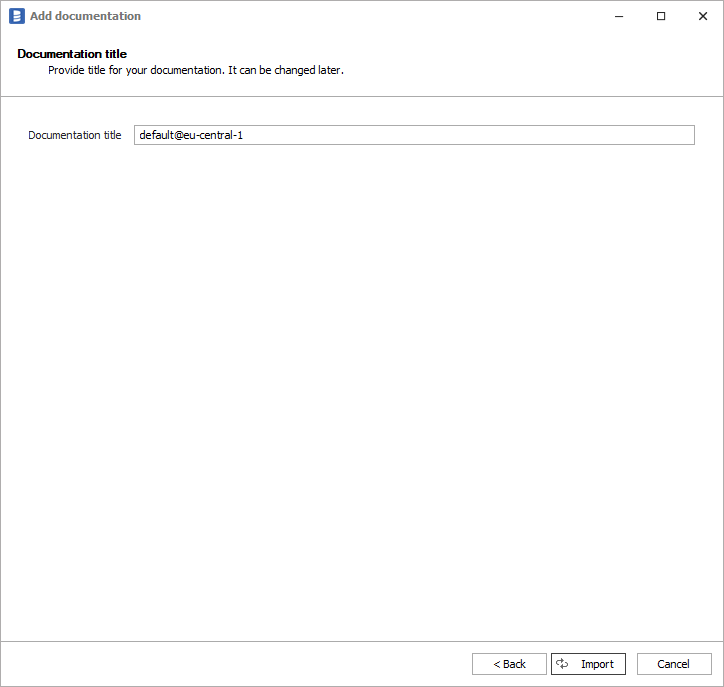

Next screen allow you to change default name of the documentation under which it will be visible in Dataedo repository.

Click Import to start the import.

When done close import window with Finish button.

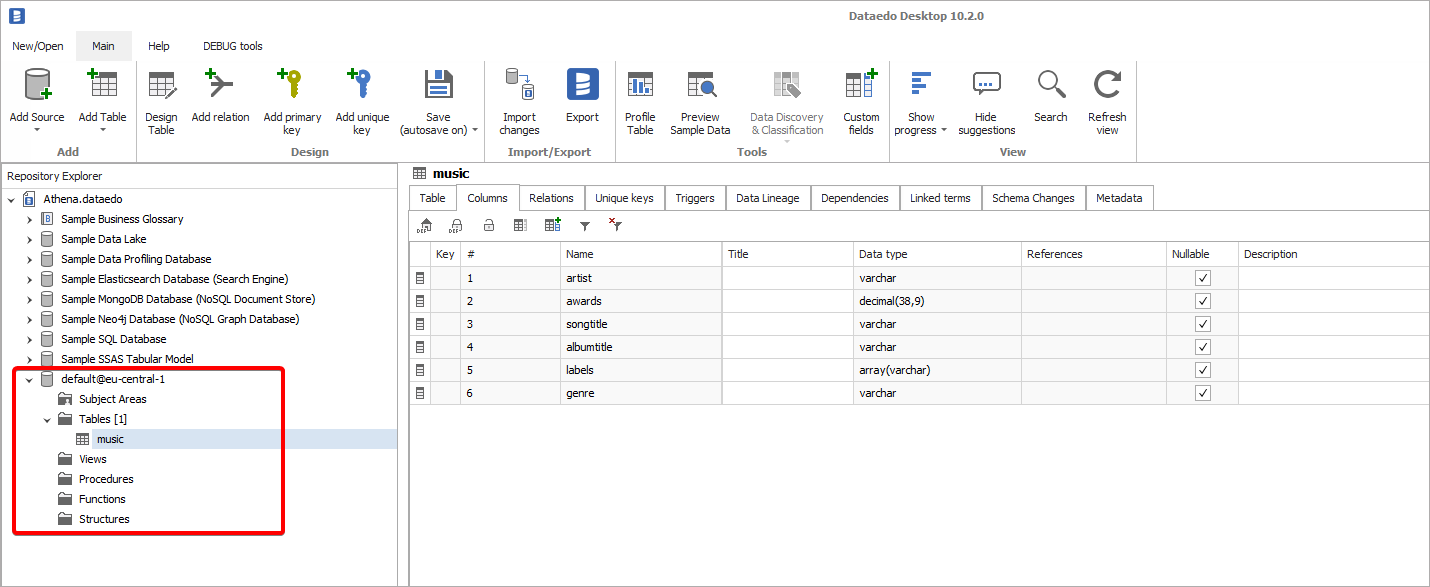

Outcome

Your DynamoDB database schema has been imported to new documentation in the repository.