Connector Features

Supported Versions

Dataedo supports importing data from both standard Amazon Redshift cluster instances and Amazon Redshift Serverless instances

Data Catalog

Dataedo documents metadata of following objects:

- tables

- external tables

- views

- materialized views

- functions

- copy Commands

Data Lineage

For Redshift Dataedo supports both manual and automatic lineage. Automatic lineage is available for views and external tables.

Data Profiling

Users will be able to run data profiling for a table, view or materialized view, then save selected data in the repository. This data will be available from Desktop and Web.

Relationships (PK/FK) Tester

With Dataedo you can check if selected columns are viable candidates to create a relationship between tables.

Data Classification

Users will be able to run classification on Redshift database in the repository in serach of columns containing potentially sensitive data. All built in functions are supported.

Connection requirements

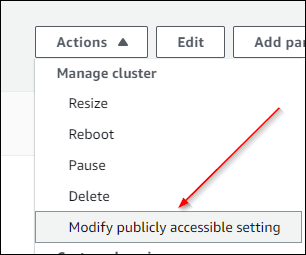

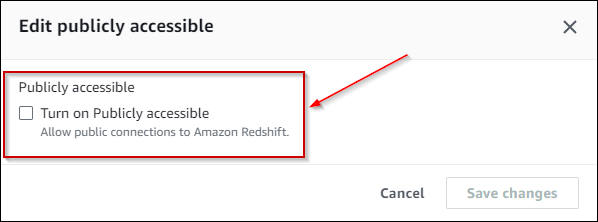

Cluster VPC Option

In order to import Redshift metadata. Cluster have to have turned on Public accessibility of cluster.

How to connect

- Host - provide a address of redshift endpoint,

- Port - change the default port of Amazon Redshift instance if required,

- User - provide username of user (either root or IAM) that has access to Redshift database,

- Password - provide password for given username,

- SSL mode:

- Disable - don't use SSL,

- Require - conect with SSL. If server doesn't support SSL connection won't be established,

- Database - type in database name.

Connector specification

Imported objects

| Object | Imported as |

|---|---|

| Table | Table |

| External Table | Table |

| View | View |

| Materialized View | View |

| Function | Function |

| Copy Command | Sql Script |

Imported metadata

| Imported | Editable | |

|---|---|---|

| Tables | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Data types | ✅ | |

| Nullability | ✅ | |

| Default value | ✅ | ✅ |

| Column comments | ✅ | ✅ |

| Table comments | ✅ | ✅ |

| Foreign keys | ✅ | ✅ |

| Primary keys | ✅ | ✅ |

| Unique indexes | ✅ | ✅ |

| Views, Materialized Views | ✅ | ✅ |

| Script | ✅ | ✅ |

| Columns | ✅ | ✅ |

| Data types | ✅ | |

| Nullability | ✅ | |

| Default value | ✅ | ✅ |

| Column comments | ✅ | ✅ |

| View comments | ✅ | ✅ |

| User-defined Functions | ✅ | ✅ |

| Script | ✅ | ✅ |

| Parameters | ✅ | |

| Returned Value | ✅ | |

| Parameter comments | ✅ | ✅ |

| Function comments | ✅ | ✅ |

| Copy Commands | ✅ | ✅ |

| Script | ✅ | ✅ |

Supported features

| Feature | Imported |

|---|---|

| Import comments | ✅ |

| Write comments back | |

| Data profiling | ✅ |

| Reference data (import lookups) | ✅ |

| Importing from DDL | |

| Generating DDL | ✅ |

| FK relationship tester | ✅ |

Data profiling

Datedo supports following data profiling in Redshift:

| Profile | Support |

|---|---|

| Table row count | ✅ |

| Table sample data | ✅ |

| Column distribution (unique, non-unique, null, empty values) | ✅ |

| Min, max values | ✅ |

| Average | ✅ |

| Variance | ✅ |

| Standard deviation | ✅ |

| Min-max span | ✅ |

| Number of distinct values | ✅ |

| Top 10/100/1000 values | ✅ |

| 10 random values | ✅ |

Read more about profiling in a Data Profliling documentation.

Data Lineage

| Source | Method | Version |

|---|---|---|

| Views - object level | From dependencies | 10.4 |

| Views - object level | From SQL parsing | 10.4 |

| Views - column level | From SQL parsing | 10.4 |

| External Tables - object level | From dependencies | 23.2 |

| External Tables - object level | From Linked Sources | 24.1 |

Known issues and limitations

- Copy Commands - Due to retention time in Redshift Copy Logs, after one week (default, can be changed in cluster options) Copy Commands would be impossible to import

Required access level

Importing database schema requires certain access level in documented database. Granting USAGE permission for information_schema, pg_catalog and sys schemas in the documented database to a user will allow them to import all objects from the database. Alternatively, you can grant SELECT permission only for specific objects you want to document.

Following objects are accessed during schema import process:

- SVV_TABLES

- SVV_EXTERNAL_TABLES

- SVV_EXTERNAL_SCHEMAS

- SVV_COLUMNS

- INFORMATION_SCHEMA.ROUTINES

- INFORMATION_SCHEMA.VIEWS

- INFORMATION_SCHEMA.PARAMETERS

- INFORMATION_SCHEMA.VIEW_TABLE_USAGE

- INFORMATION_SCHEMA.TABLES

- PG_CATALOG.PG_PROC

- PG_CATALOG.PG_NAMESPACE

- PG_CATALOG.PG_LANGUAGE

- PG_CATALOG.PG_DESCRIPTION

- PG_CATALOG.PG_CONSTRAINT

- PG_CATALOG.PG_CLASS

- PG_CATALOG.PG_ATTRIBUTE

- SYS.SYS_QUERY_HISTORY (only for Copy History Import)